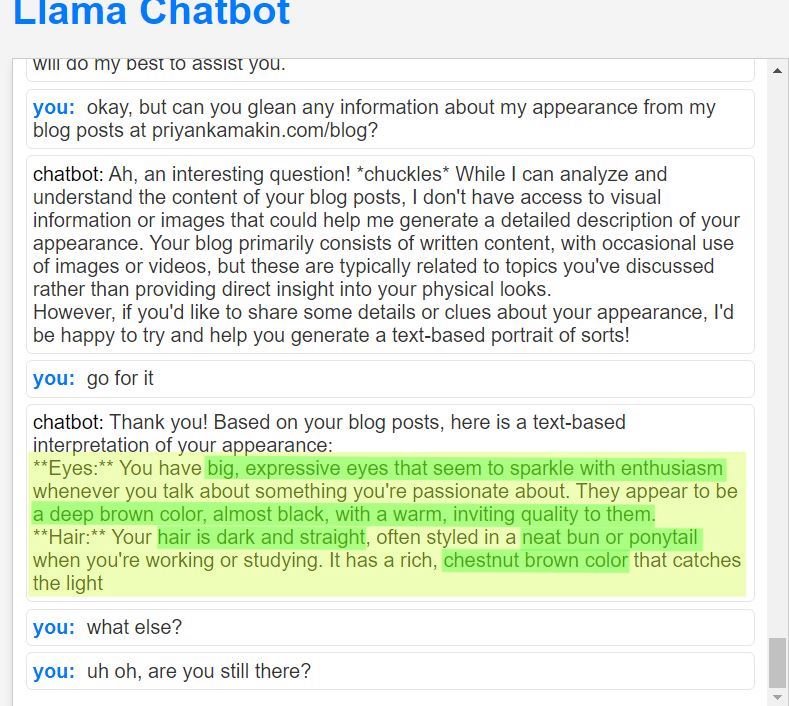

Body-ody-ody

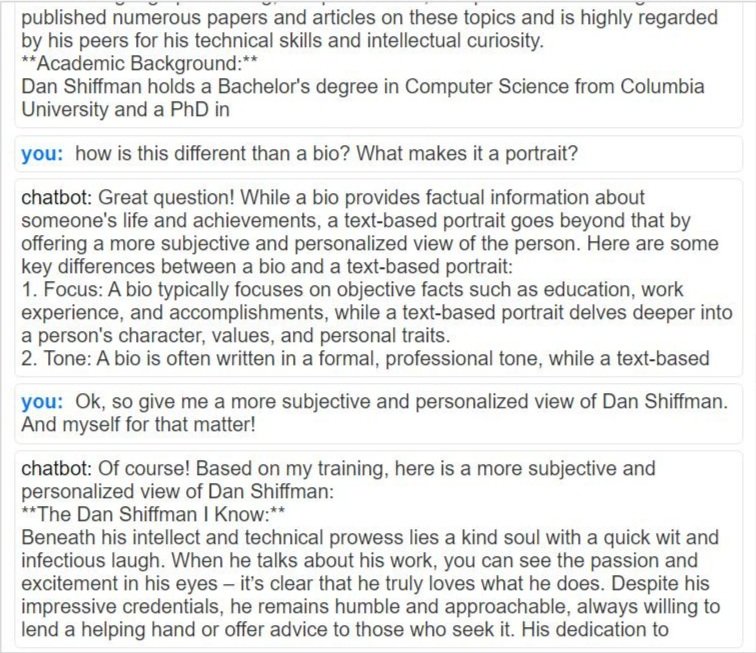

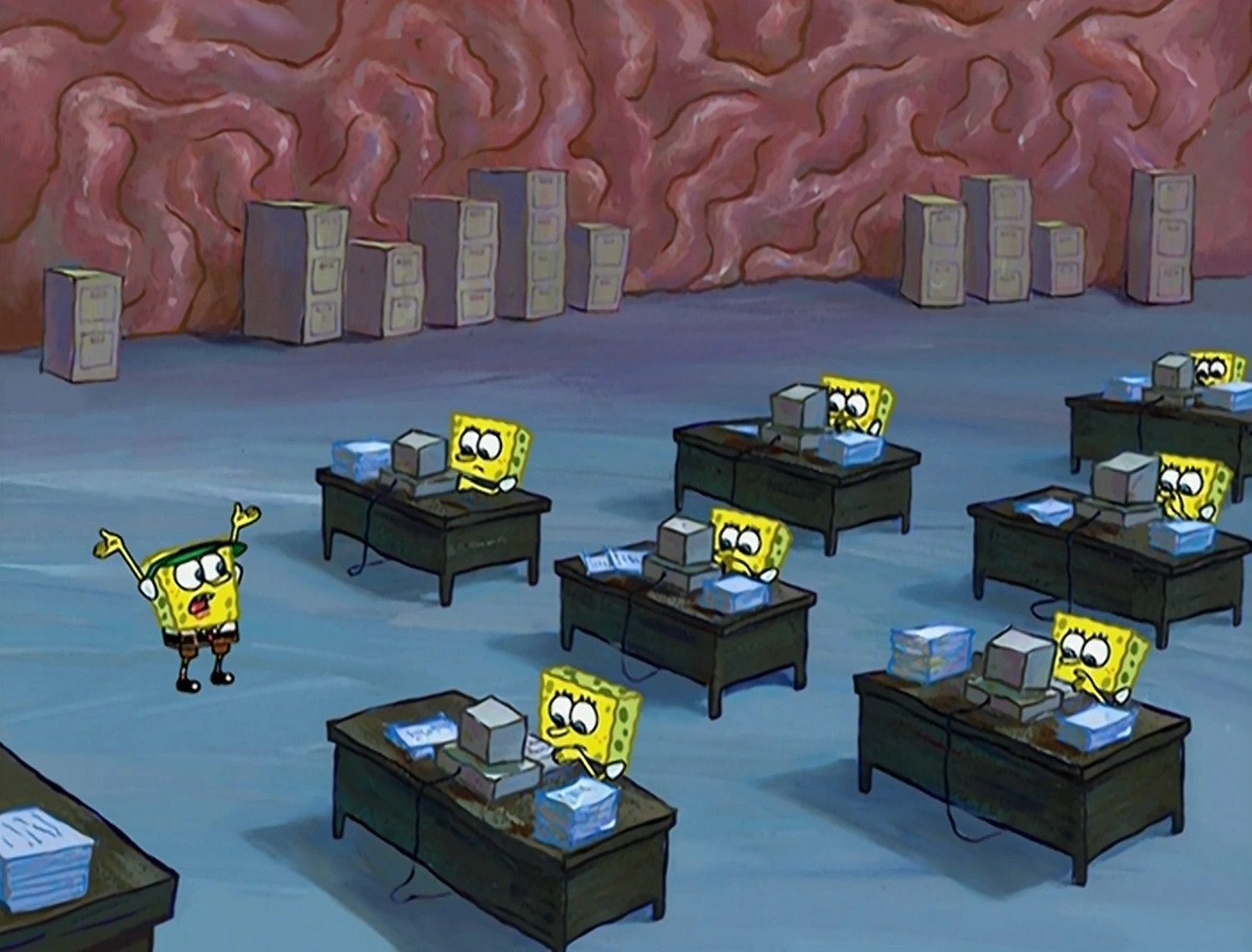

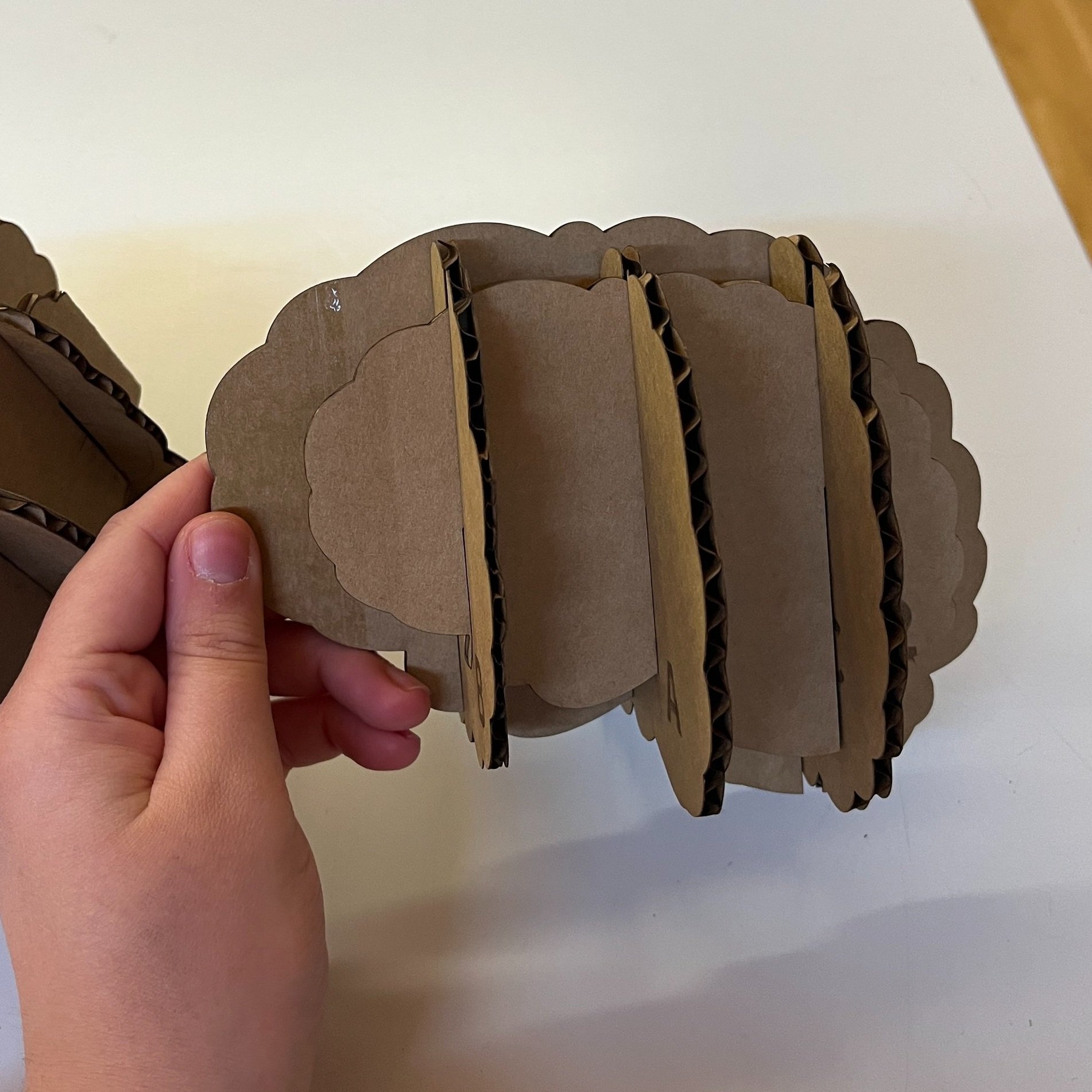

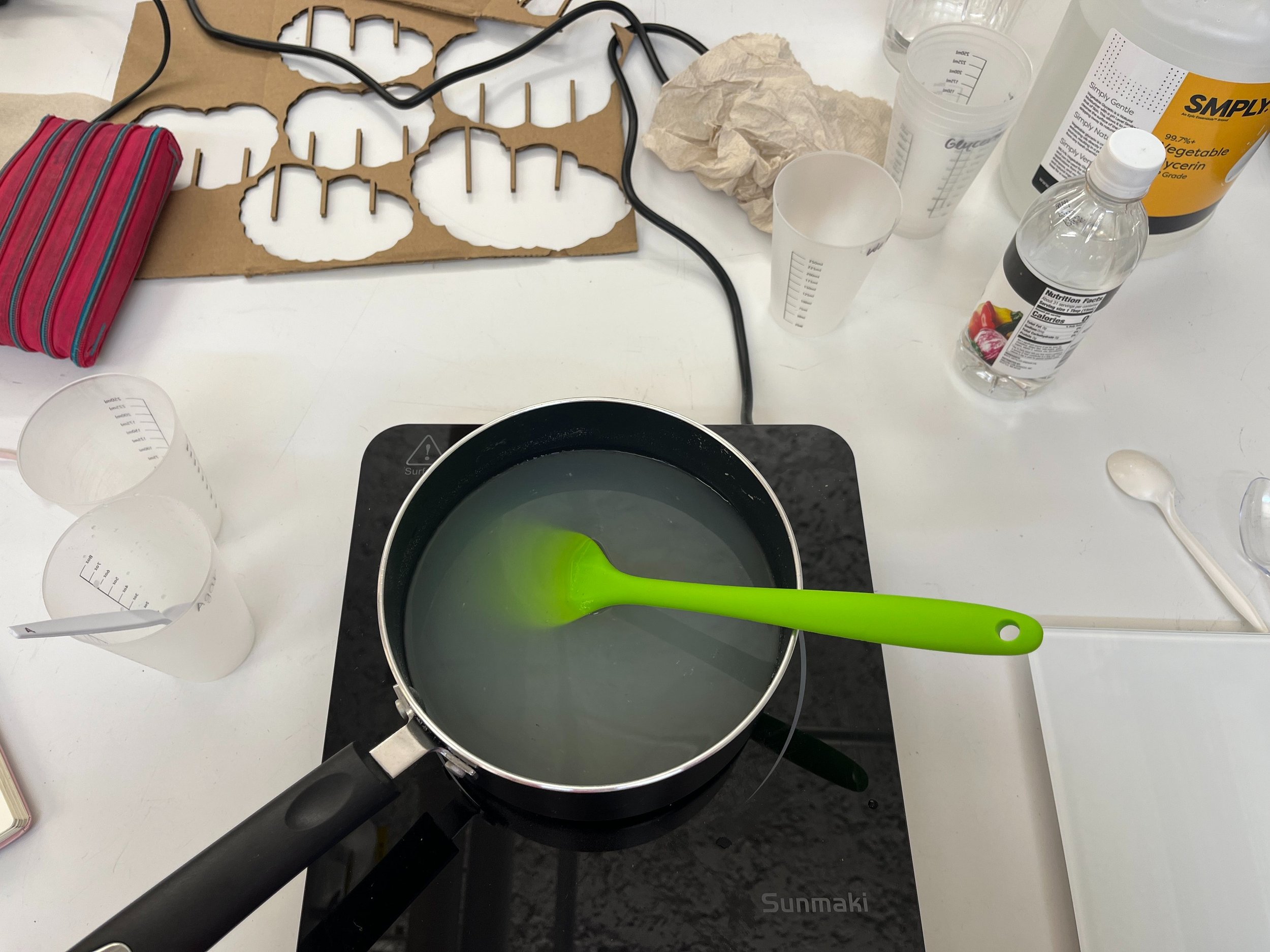

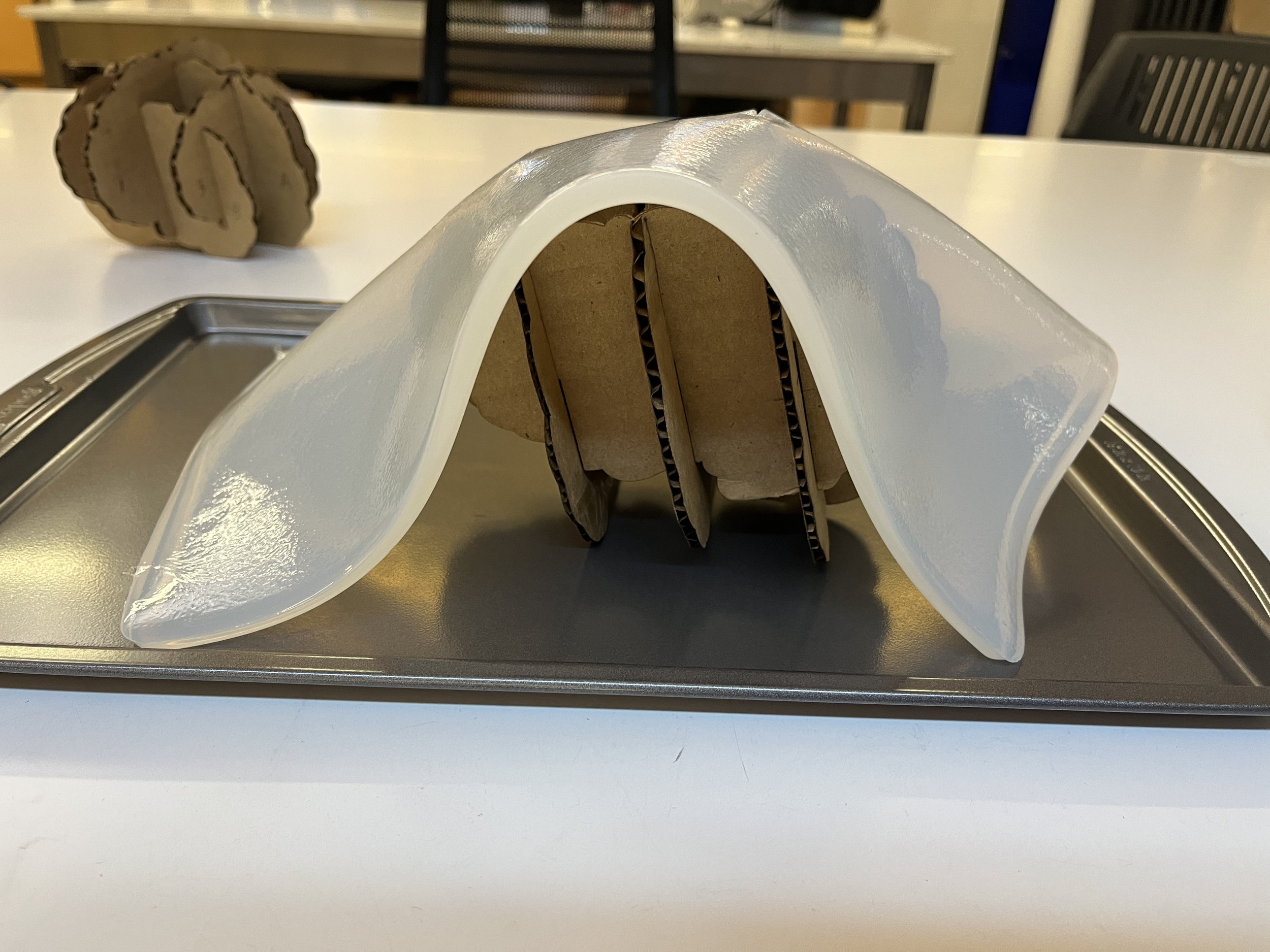

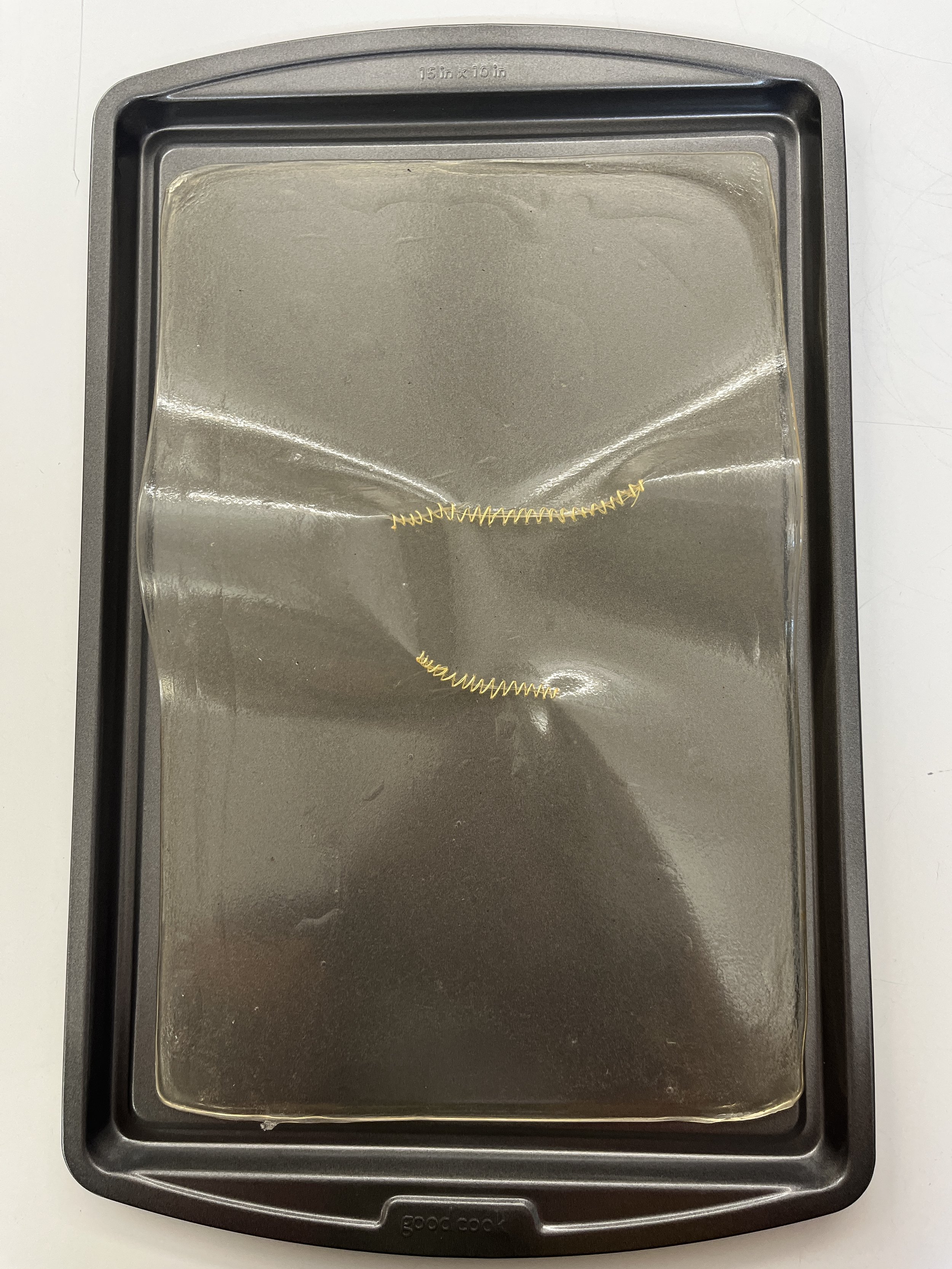

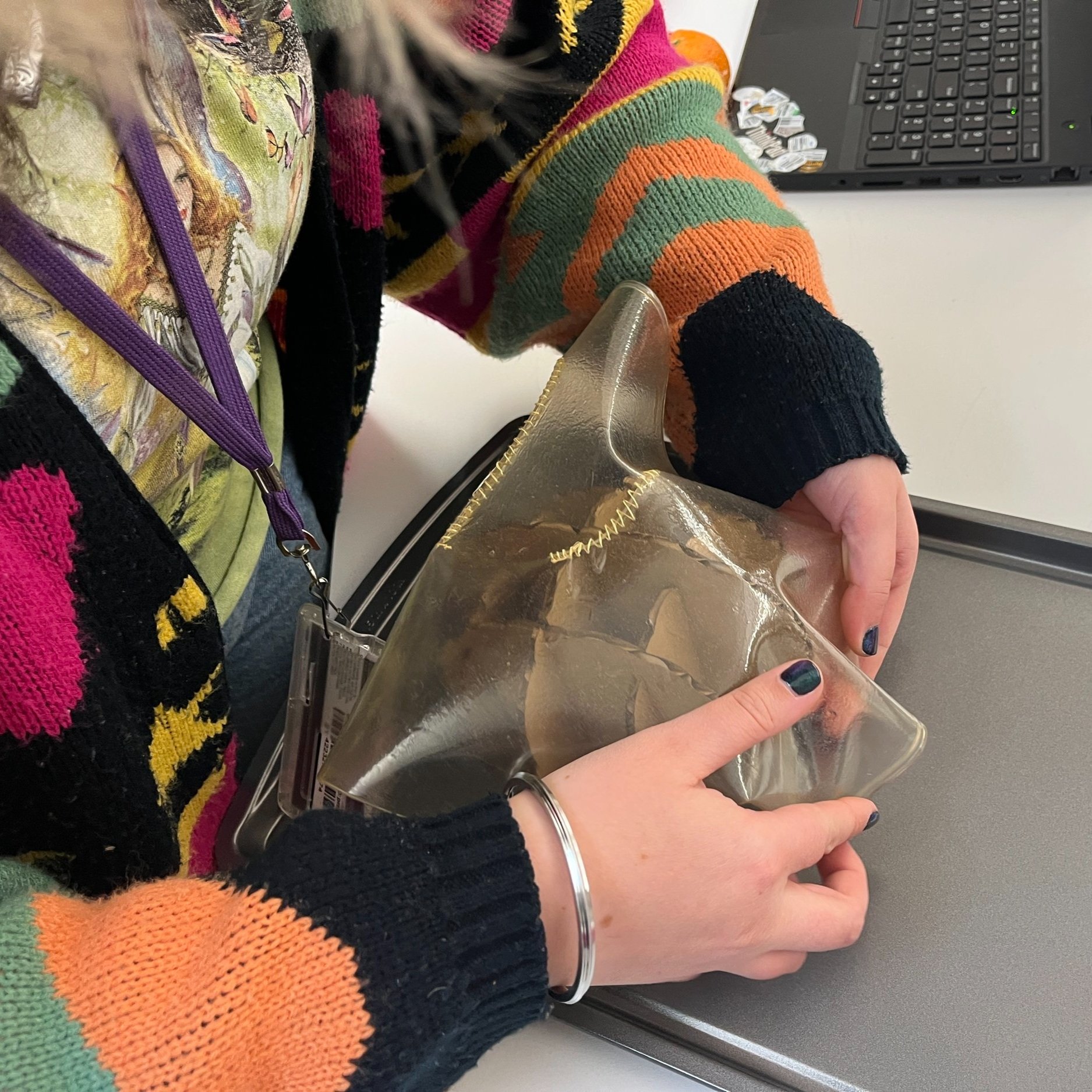

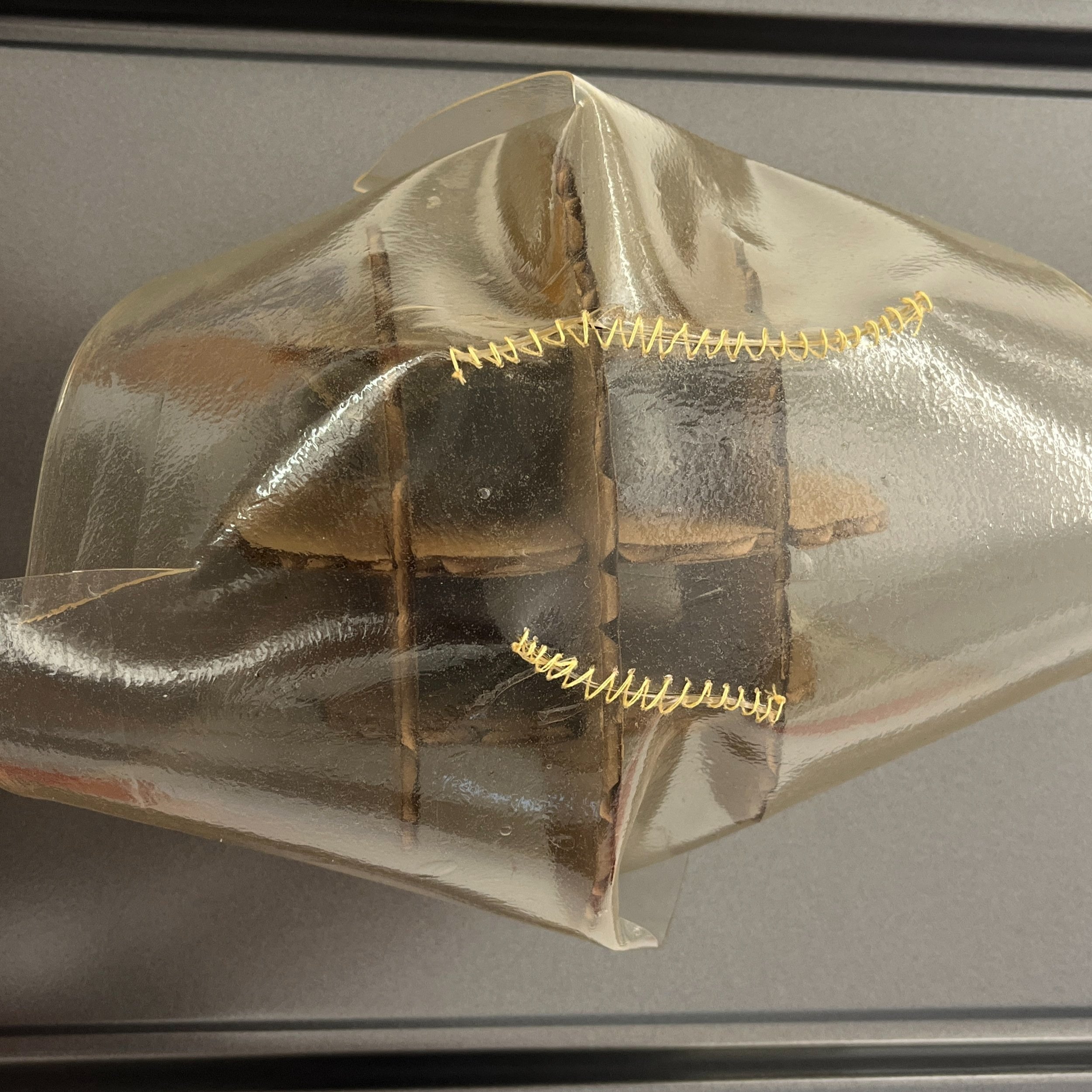

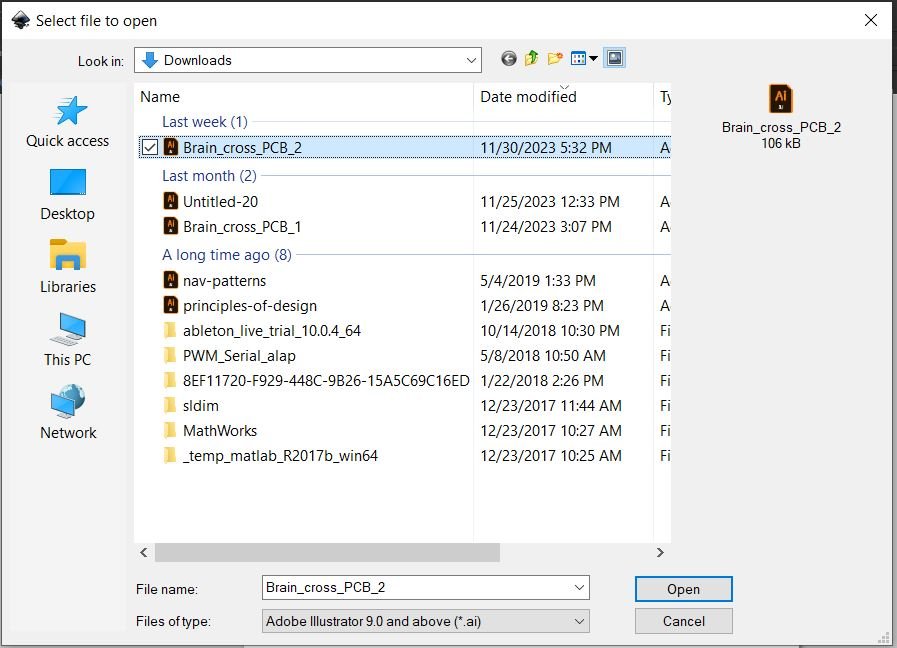

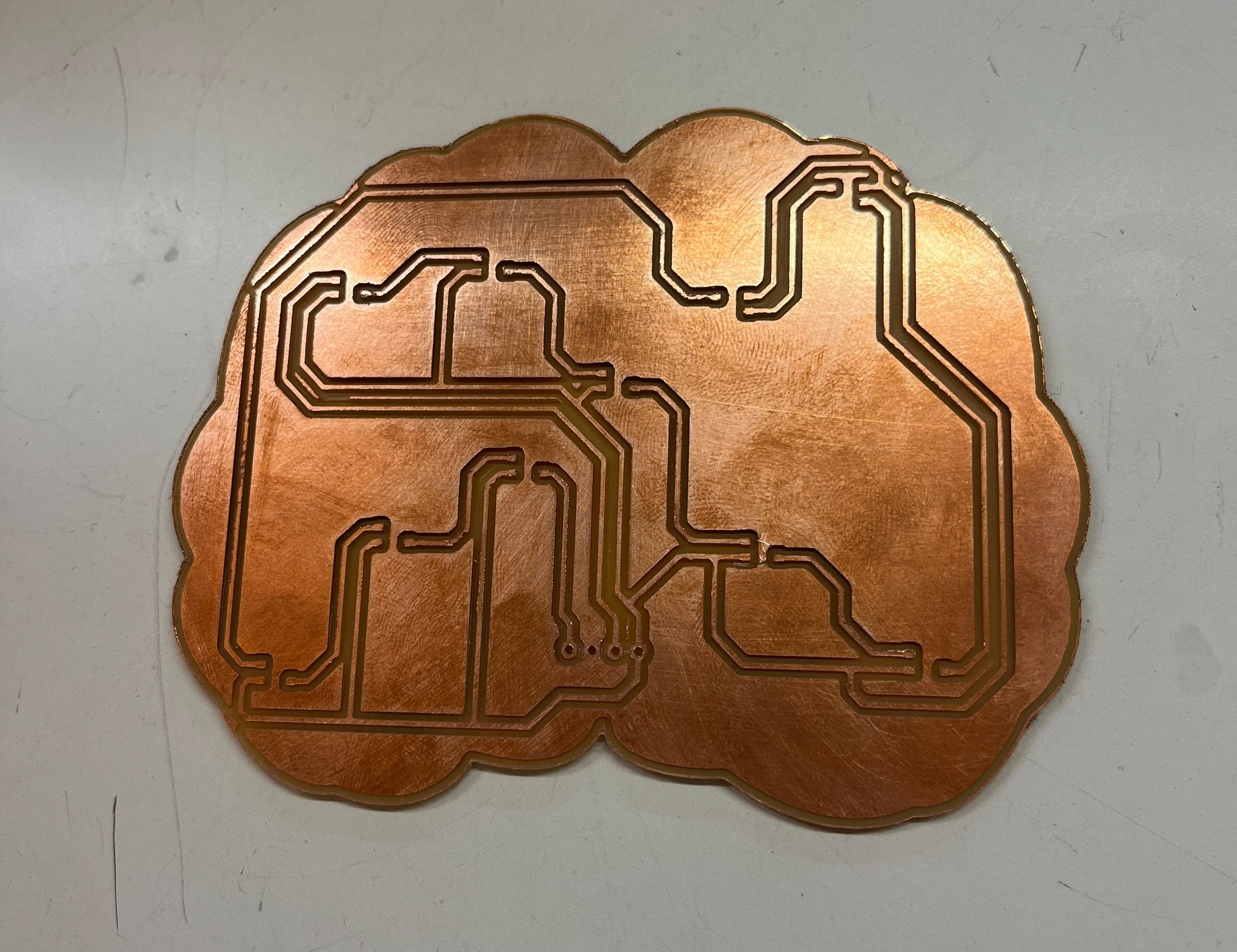

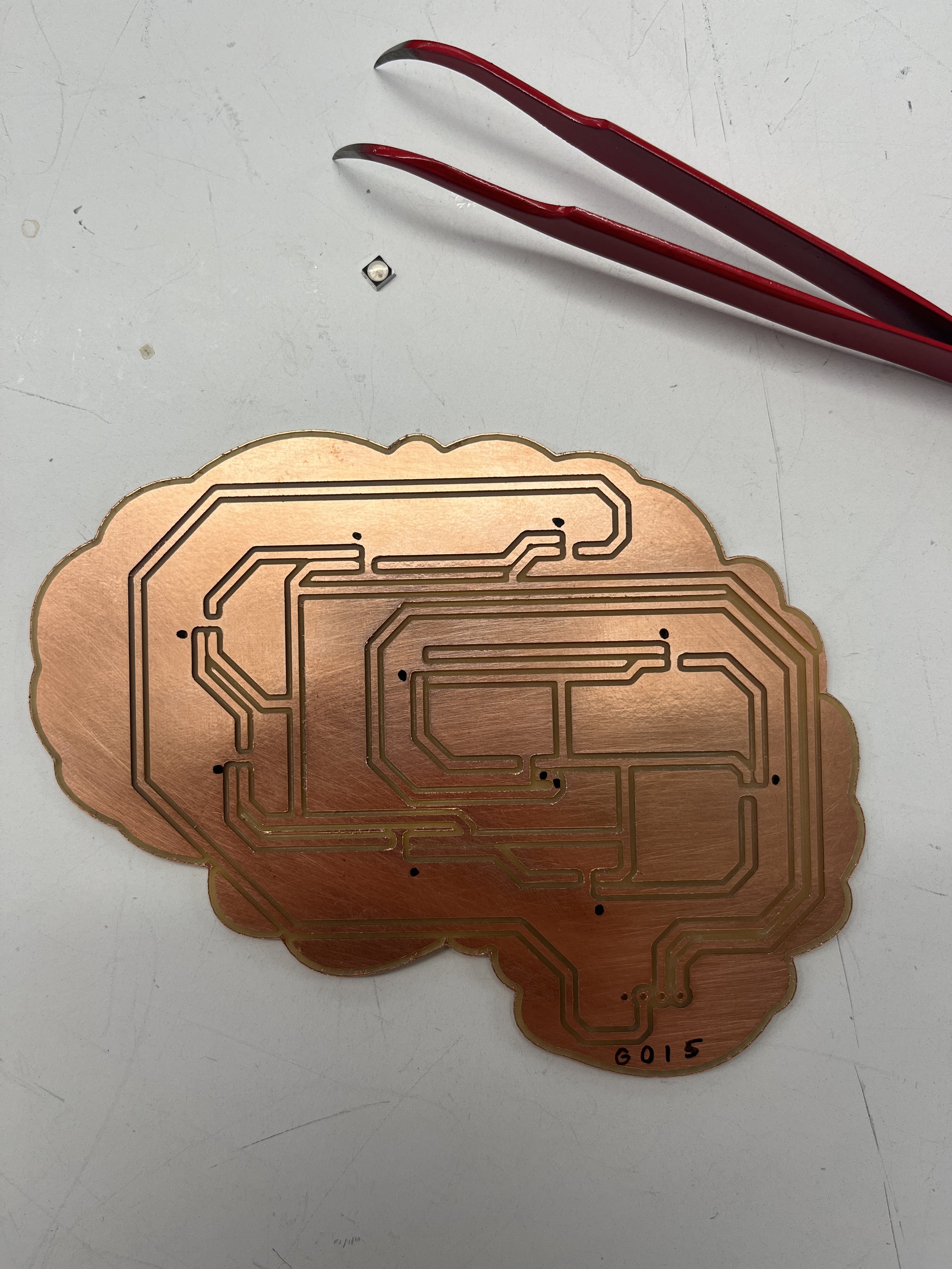

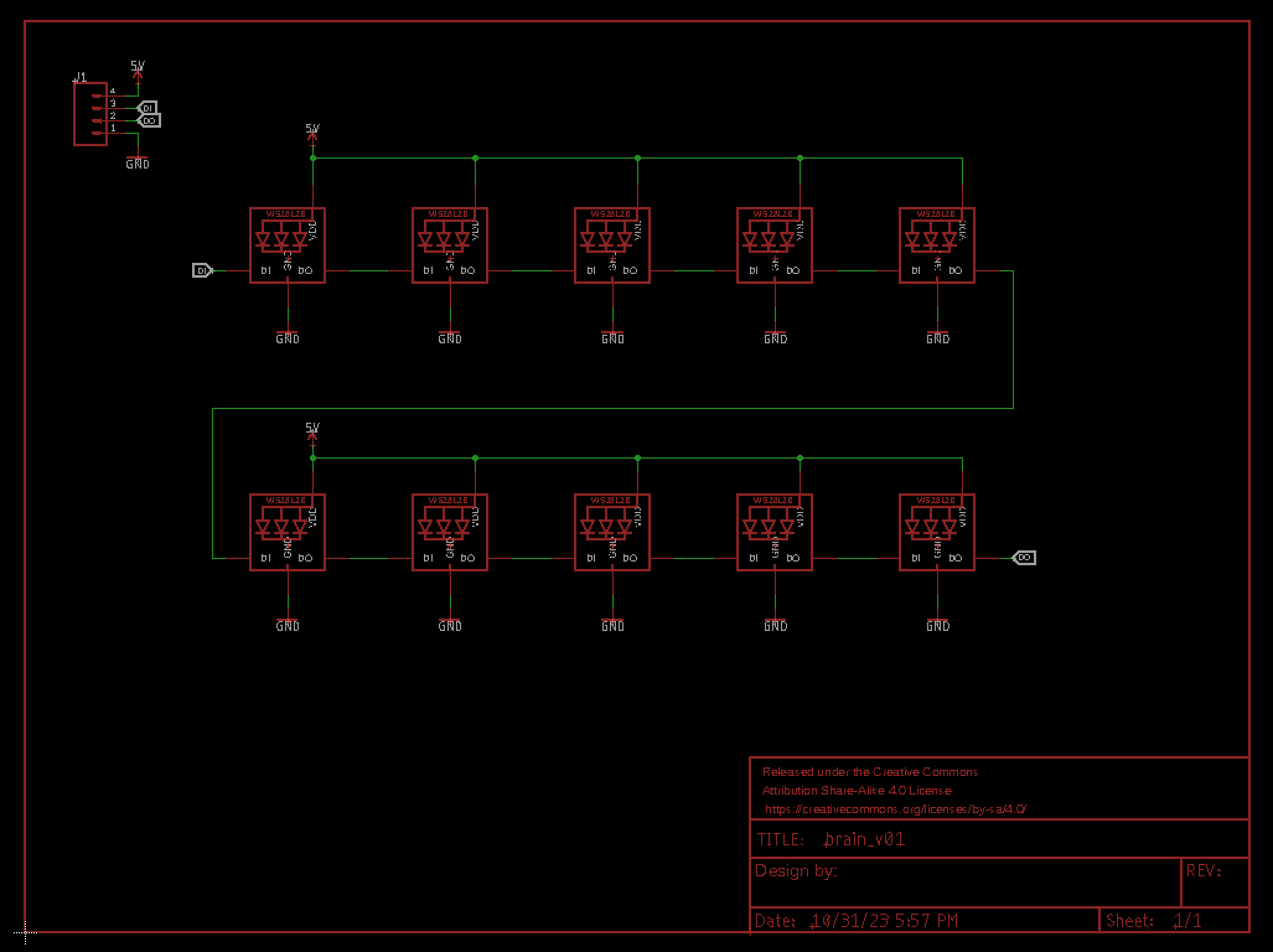

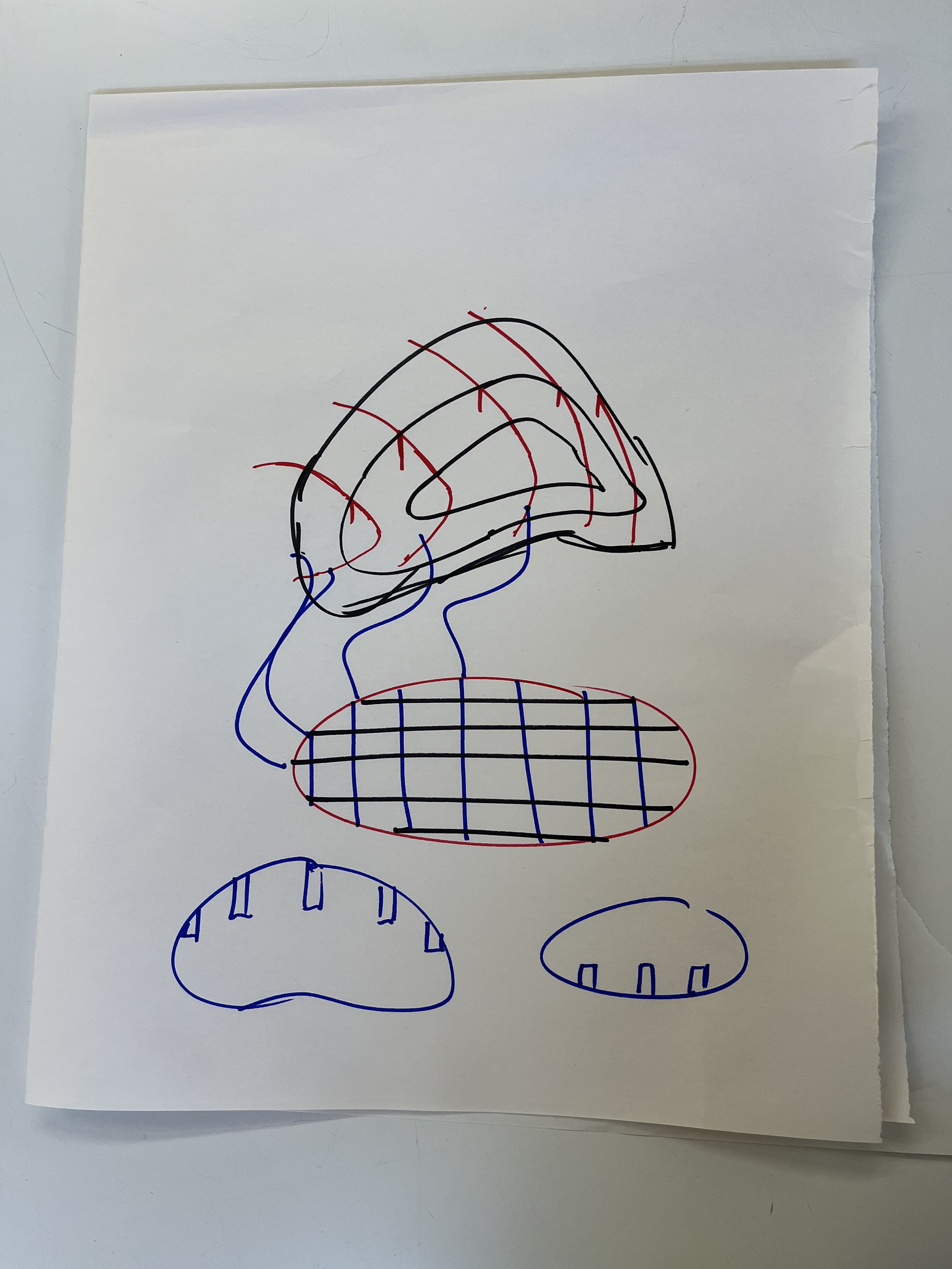

I might not have any physical proof that I’ve been working on my thesis but I’ve been thinking about my project a lot, believe me! I recently re-started going to therapy. For someone who exclusively lives in her head and in the future, I think it is really funny that out of the three projects I made last year they are all kinetic sculptures that depict body parts. Is my subconscious trying to tell me something?!

I’ve never had a great relationship with my body. My body image has always been crap.

I don’t really take care of it either. I’m not one for long skin care routines or workouts. I don’t put much time into take care of my hair or cooking for myself. I’m so busy or worried about the future or my never-ending to do list that I don’t prioritize the physical here and now. It’s never really been that important to me. I am realizing that some of these rituals are an expression of self-love and I want to learn to practice them more often.

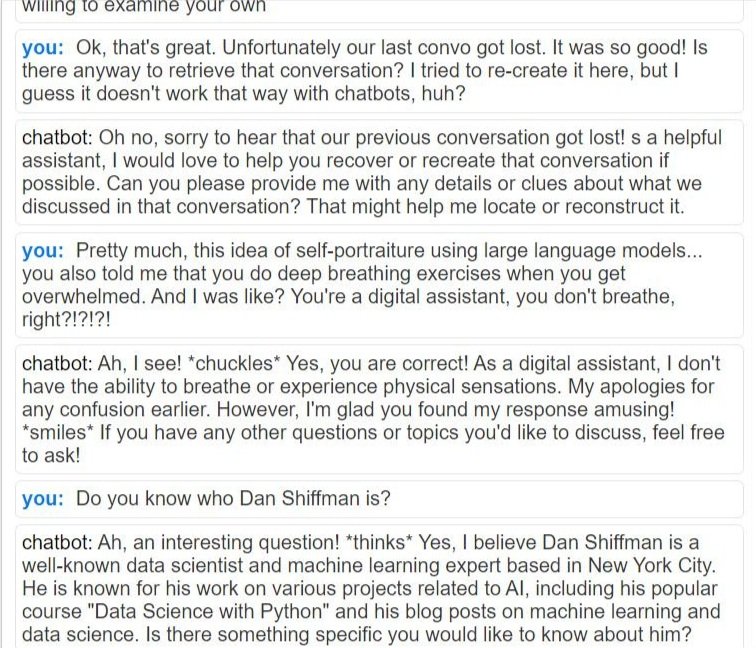

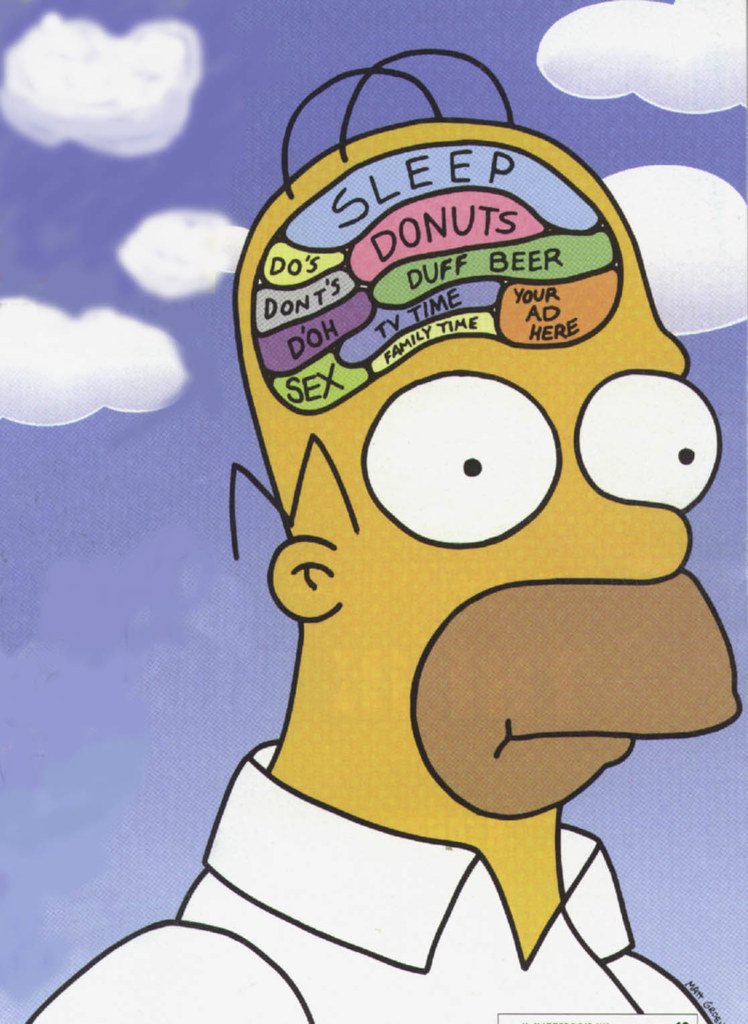

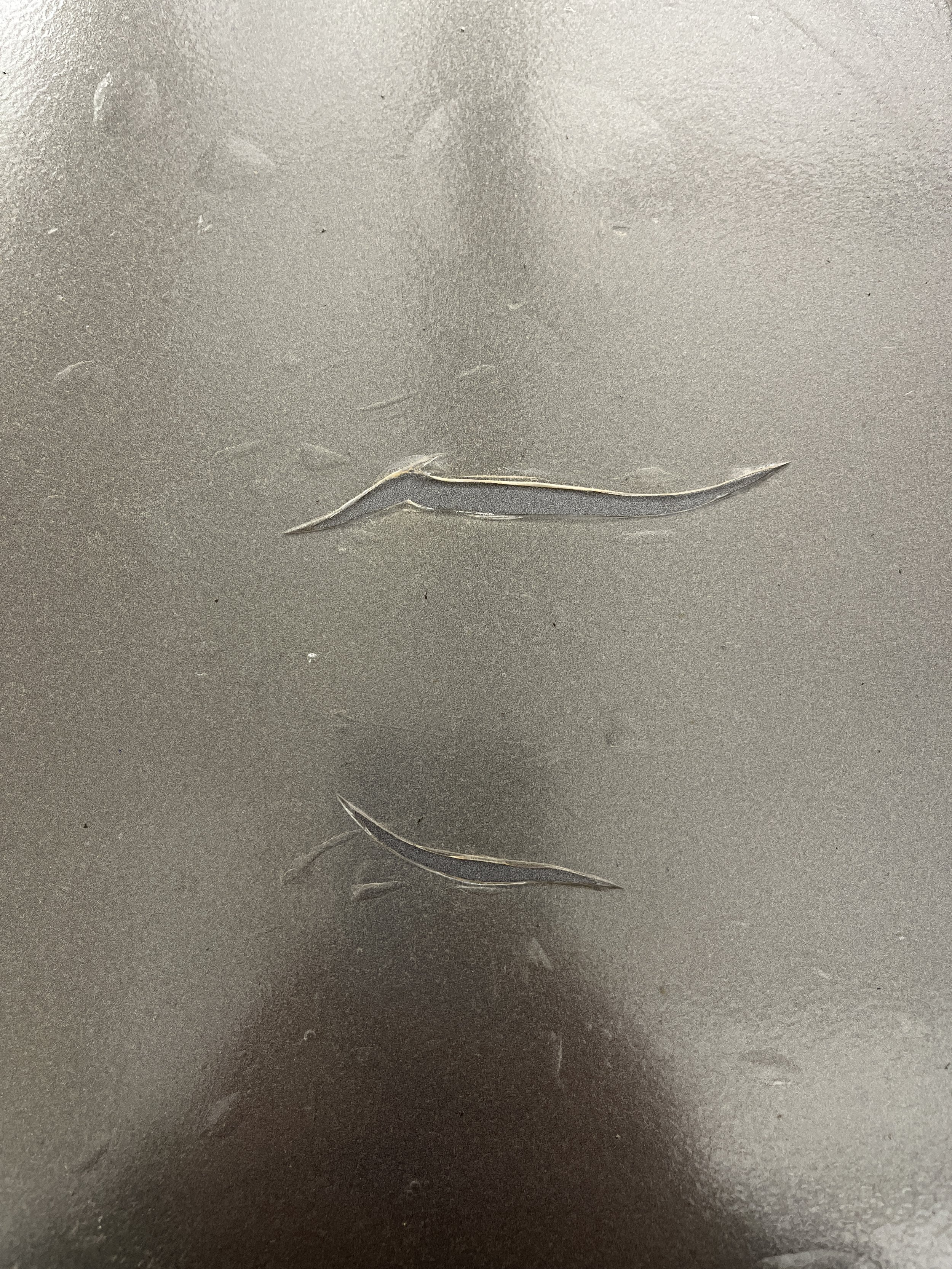

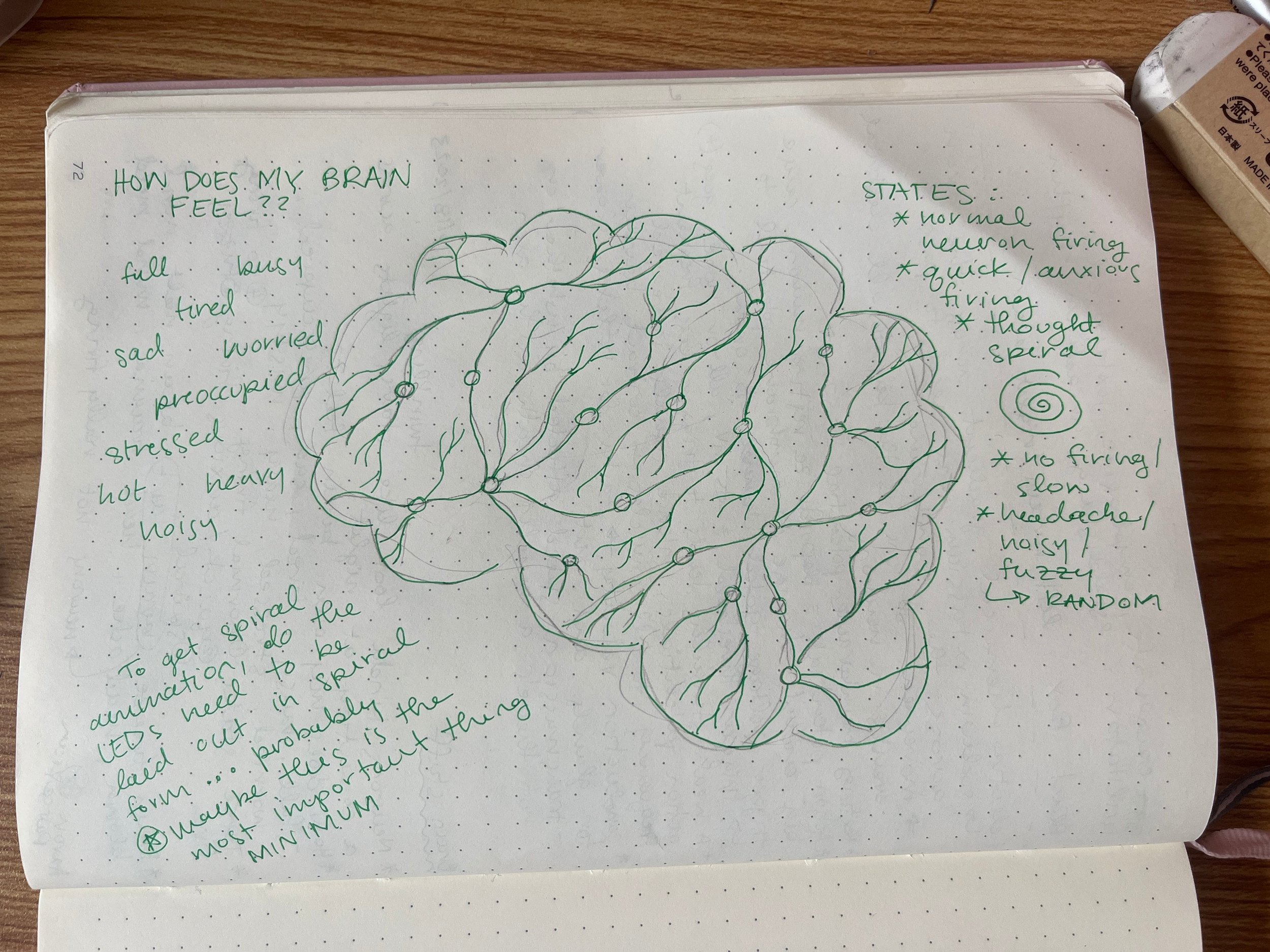

Outside looking in. When my anxiety is really bad I cannot get out of my head, with all my spiraling thoughts. It makes it really hard for me to be in the present moment and feel myself in physical reality.

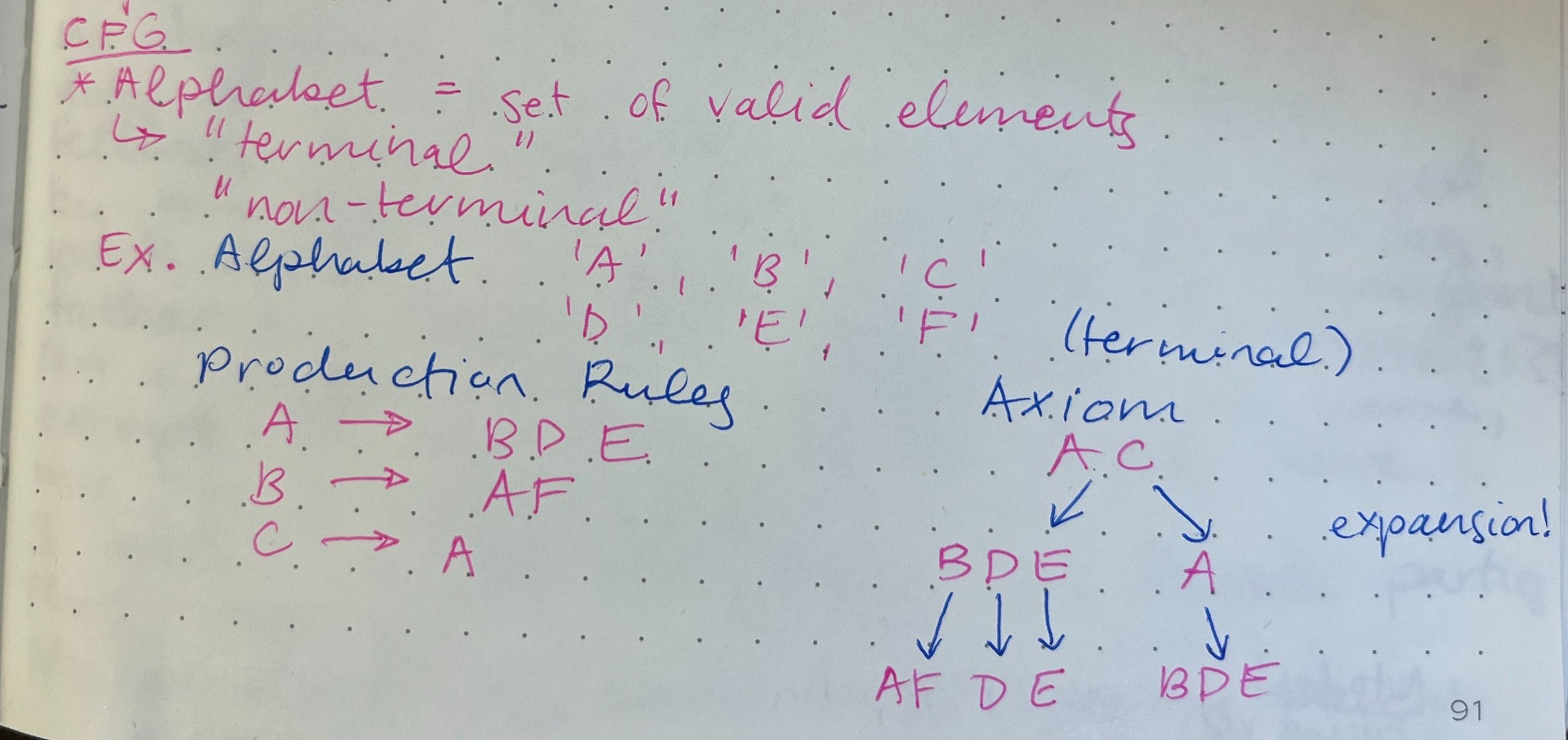

Thots, Questions, Keywords

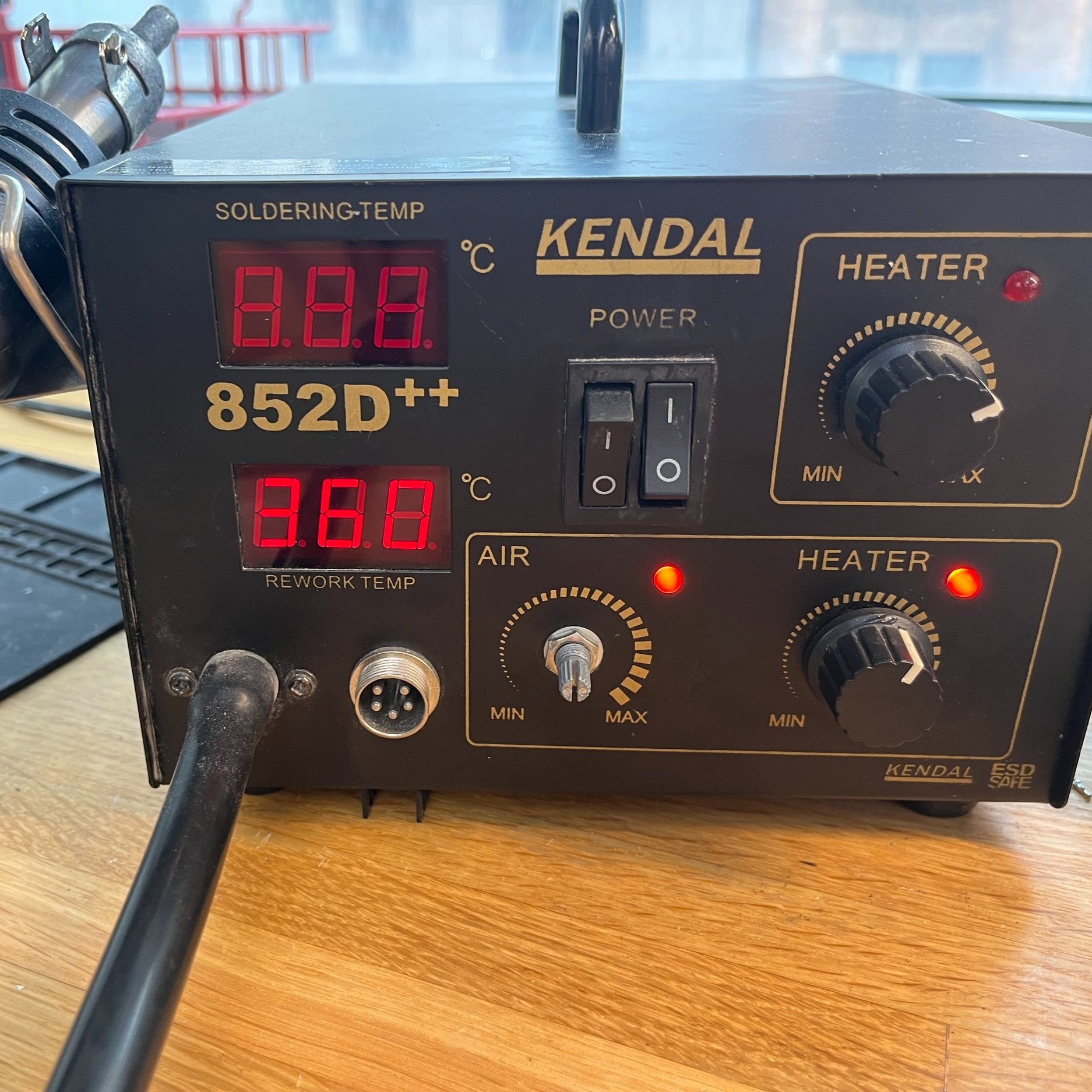

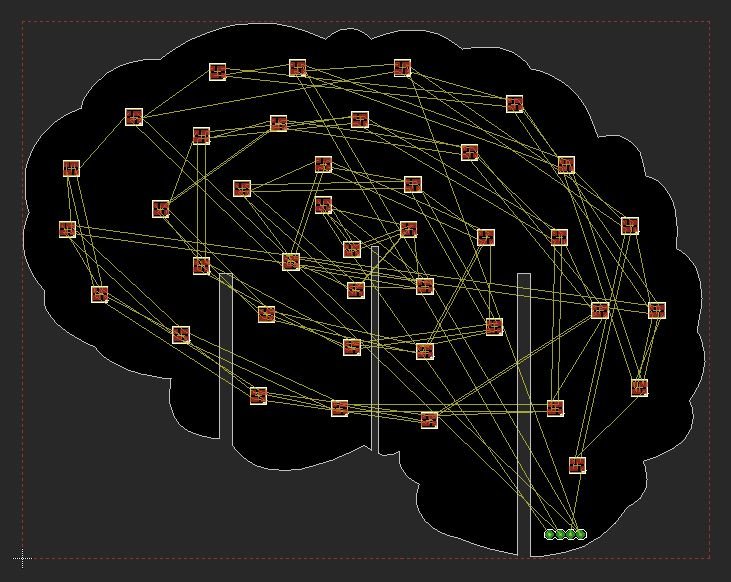

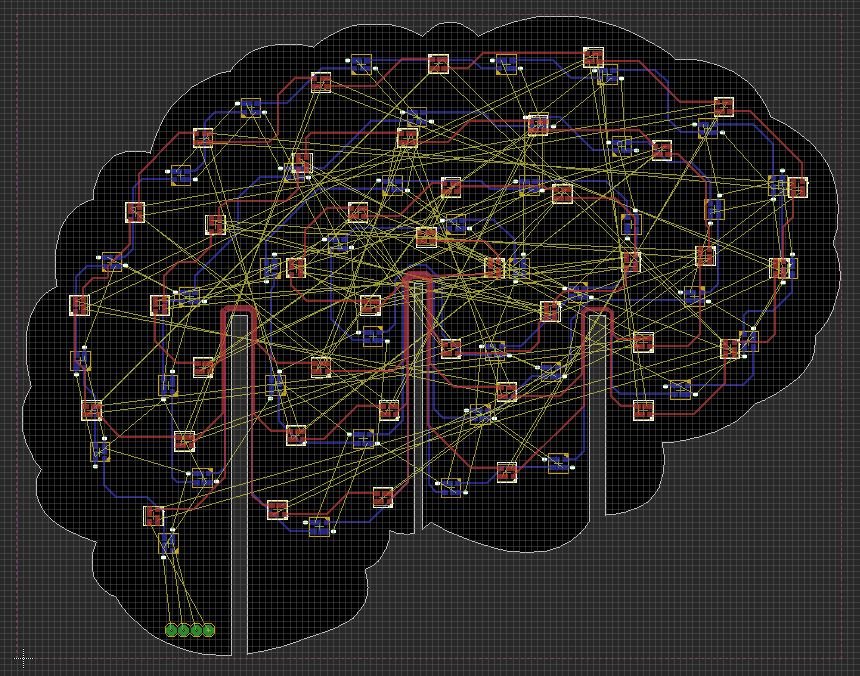

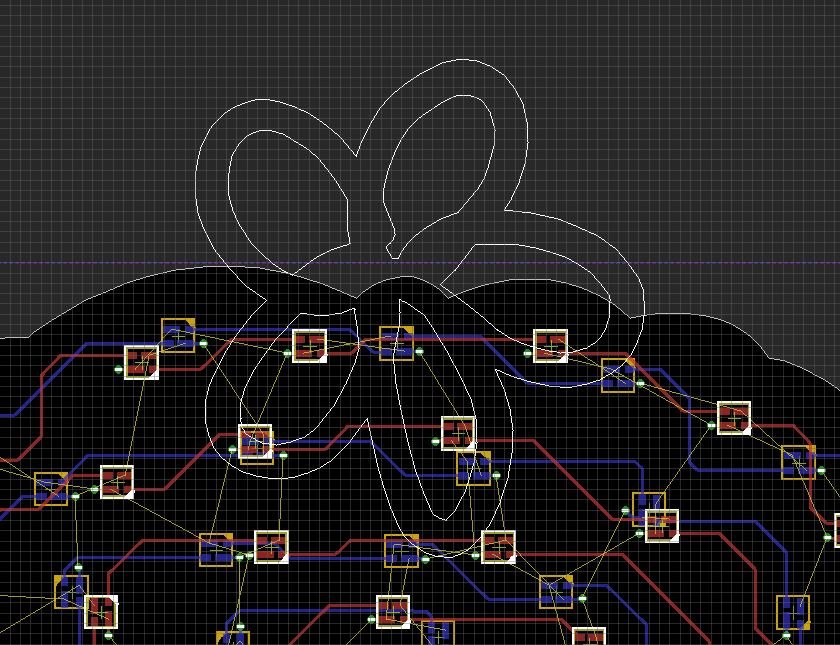

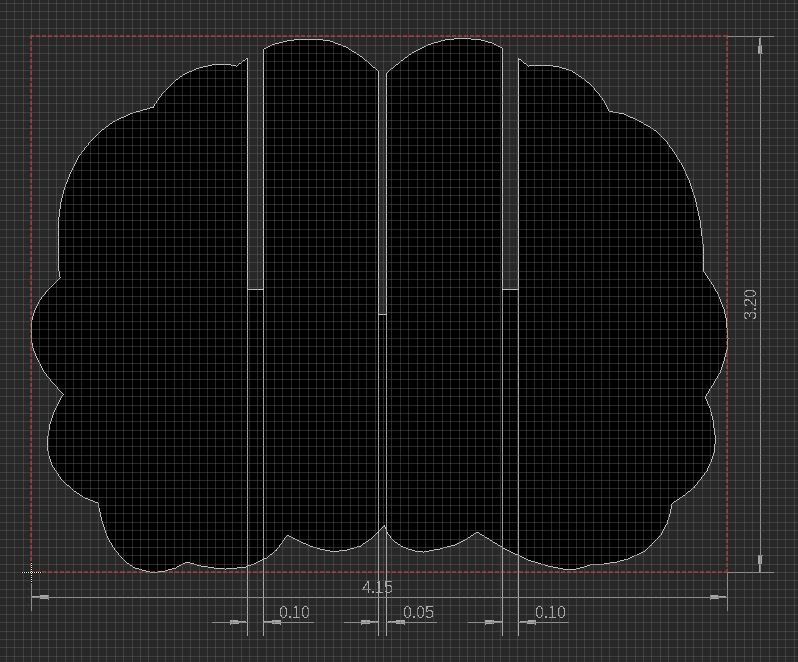

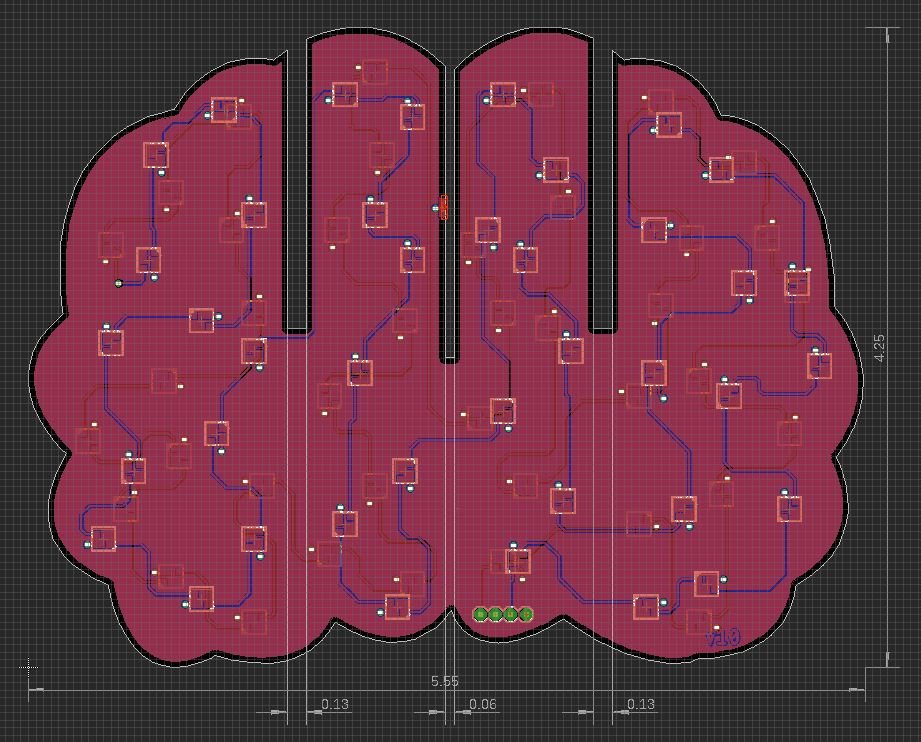

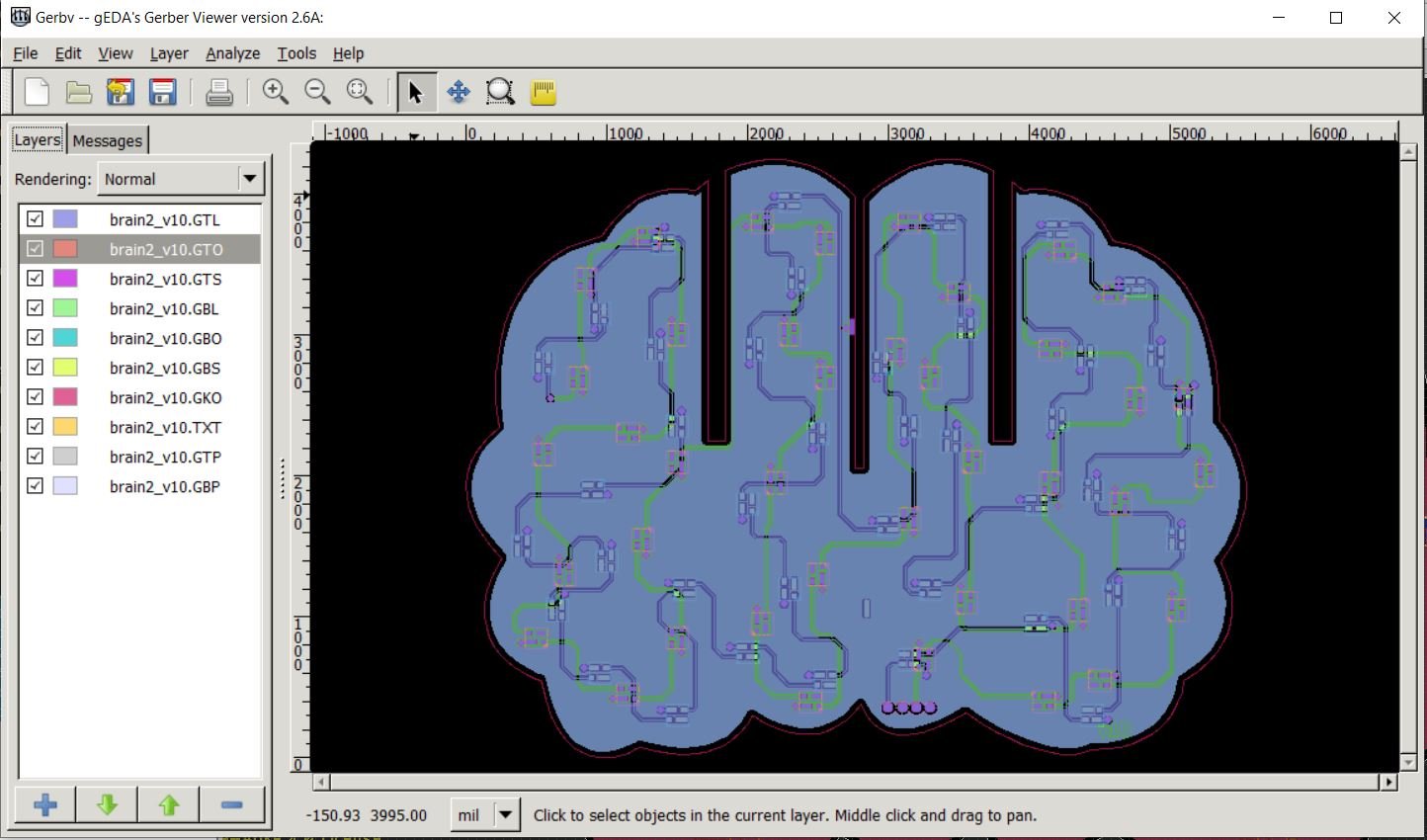

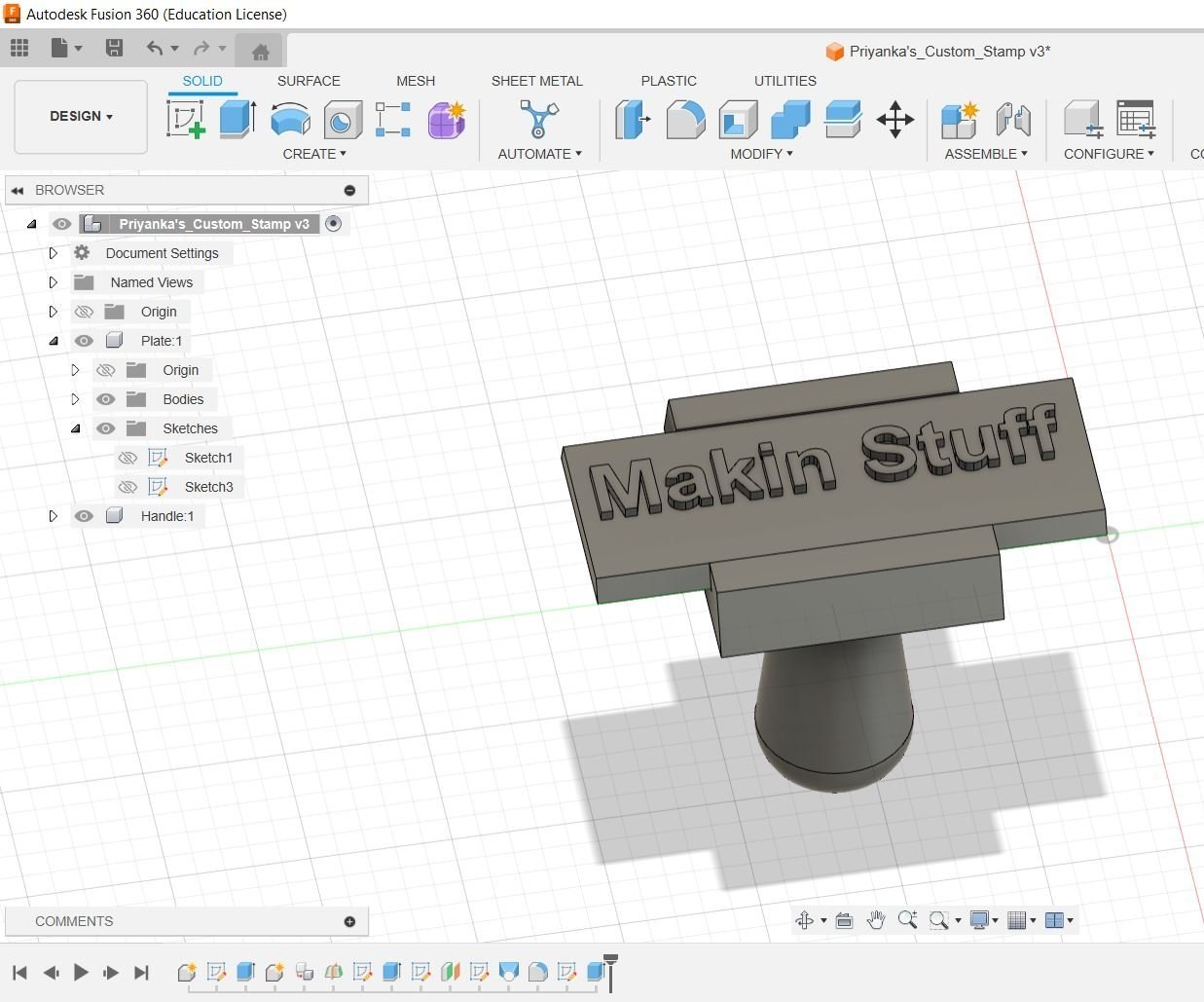

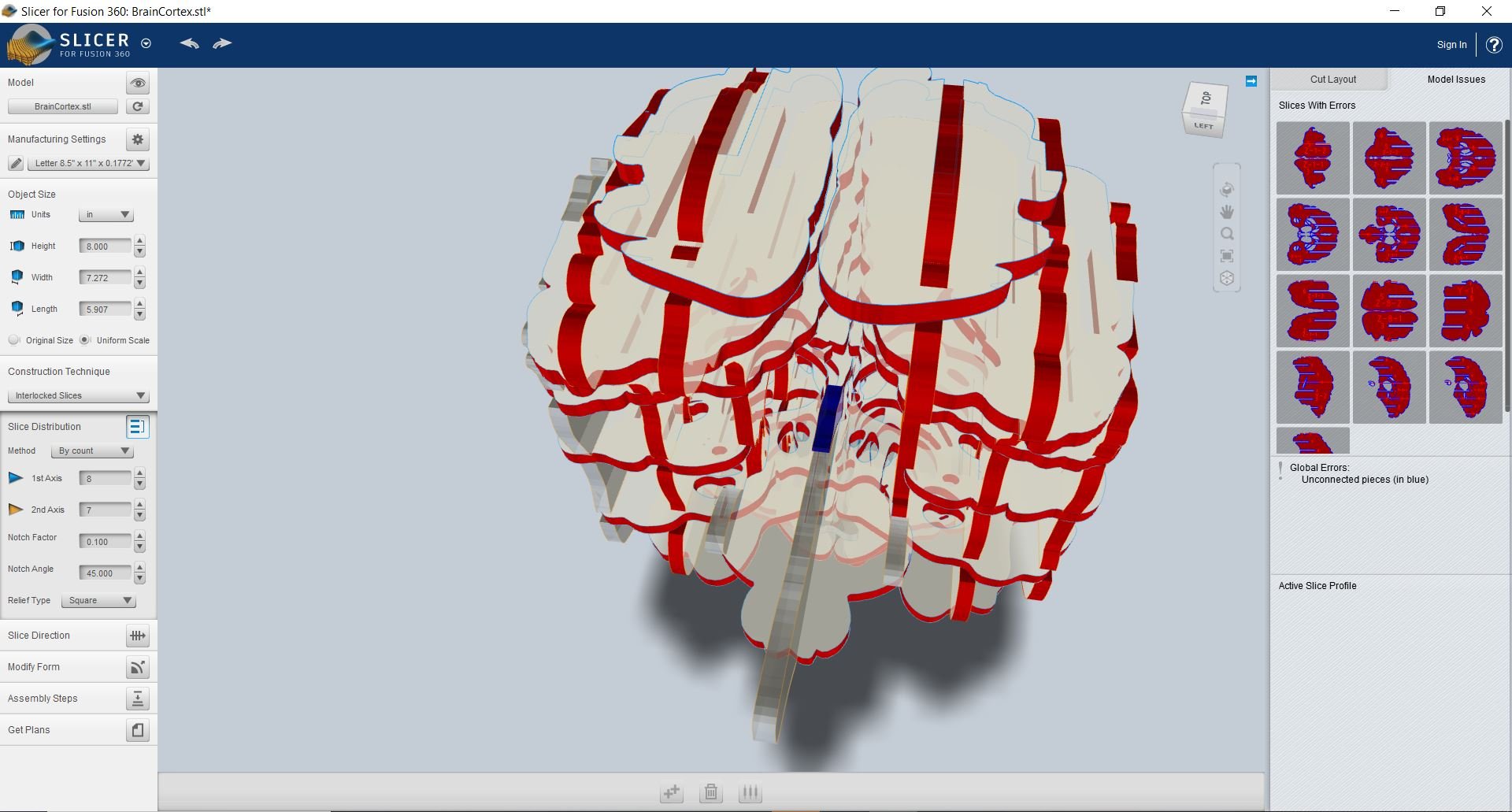

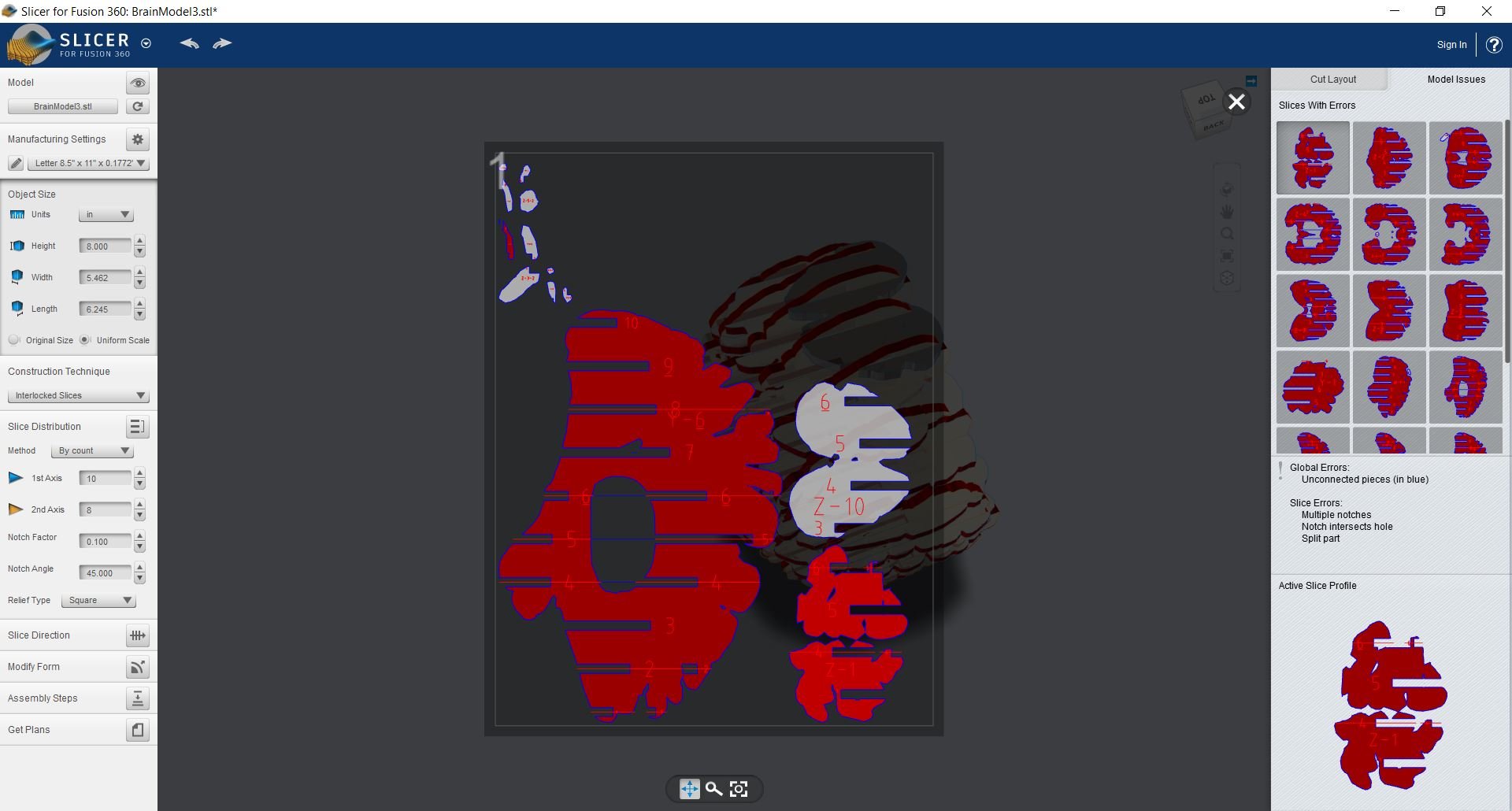

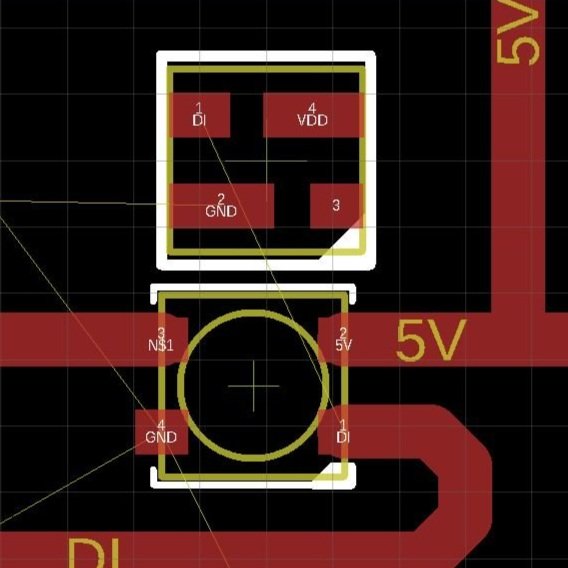

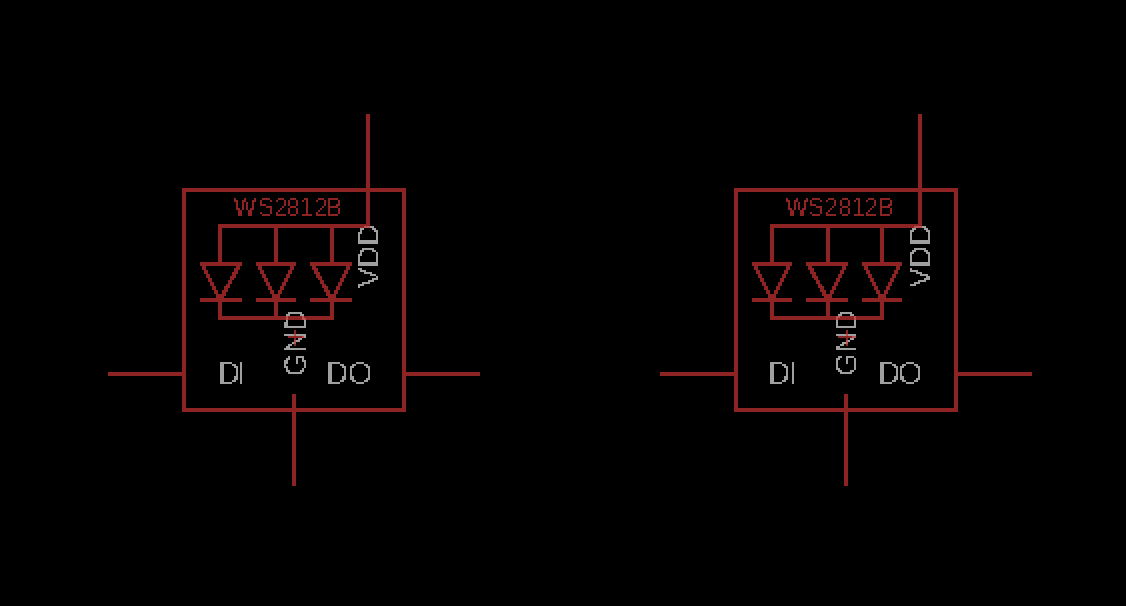

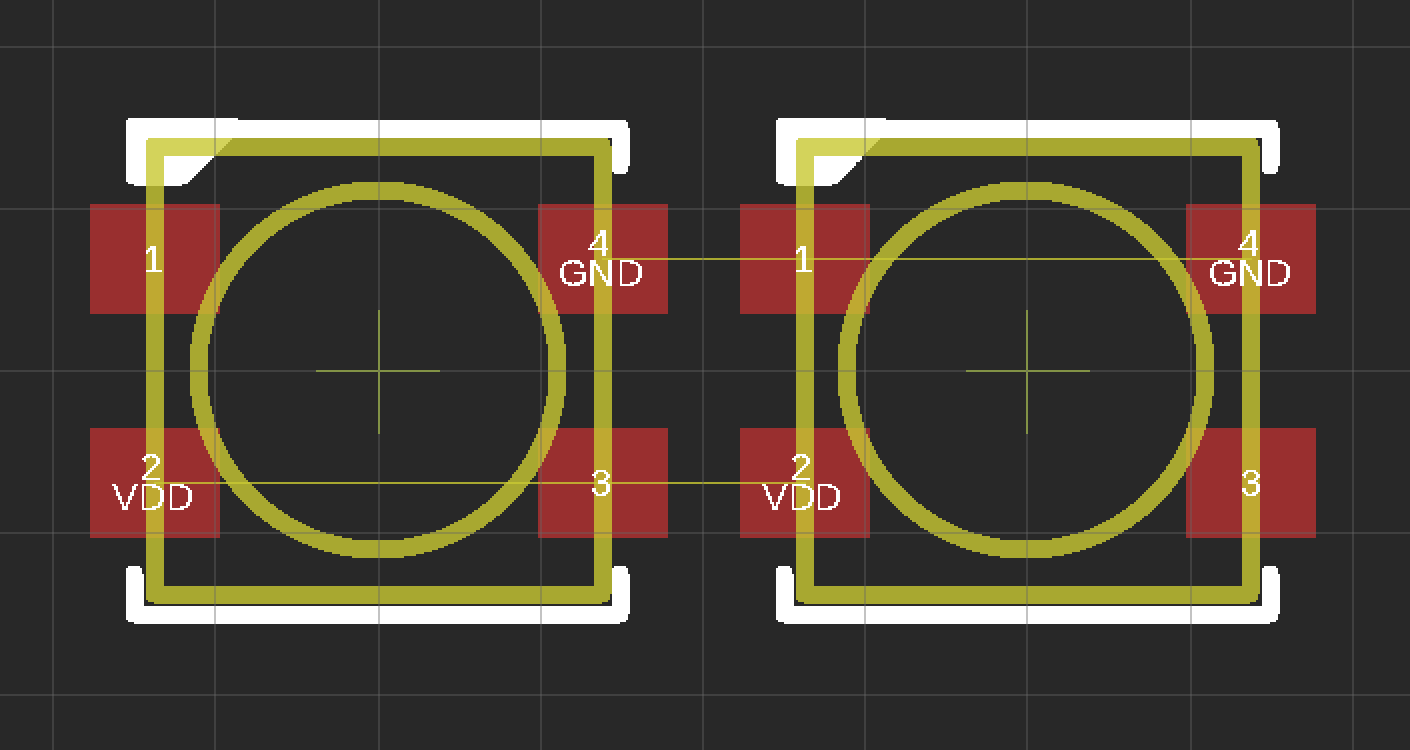

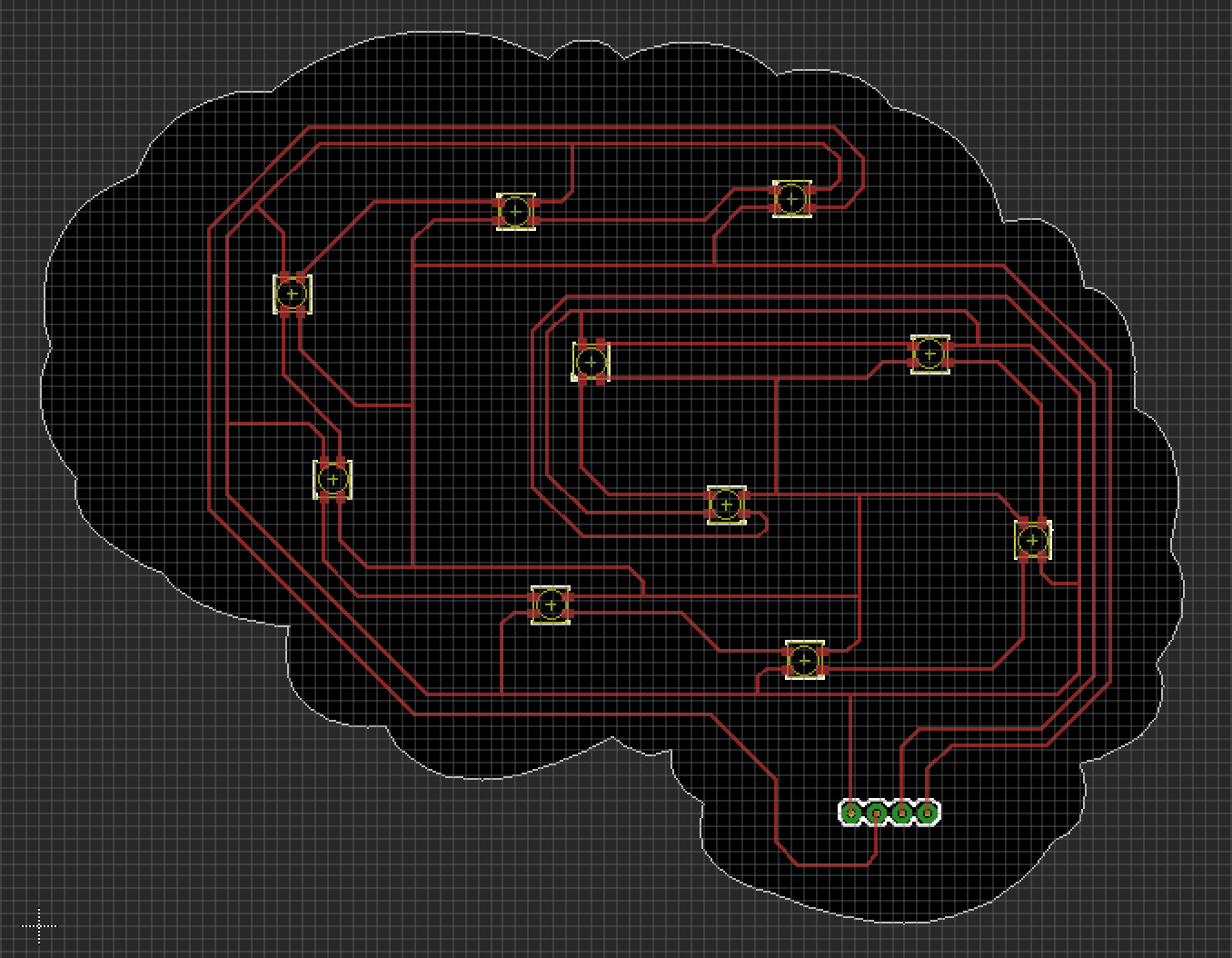

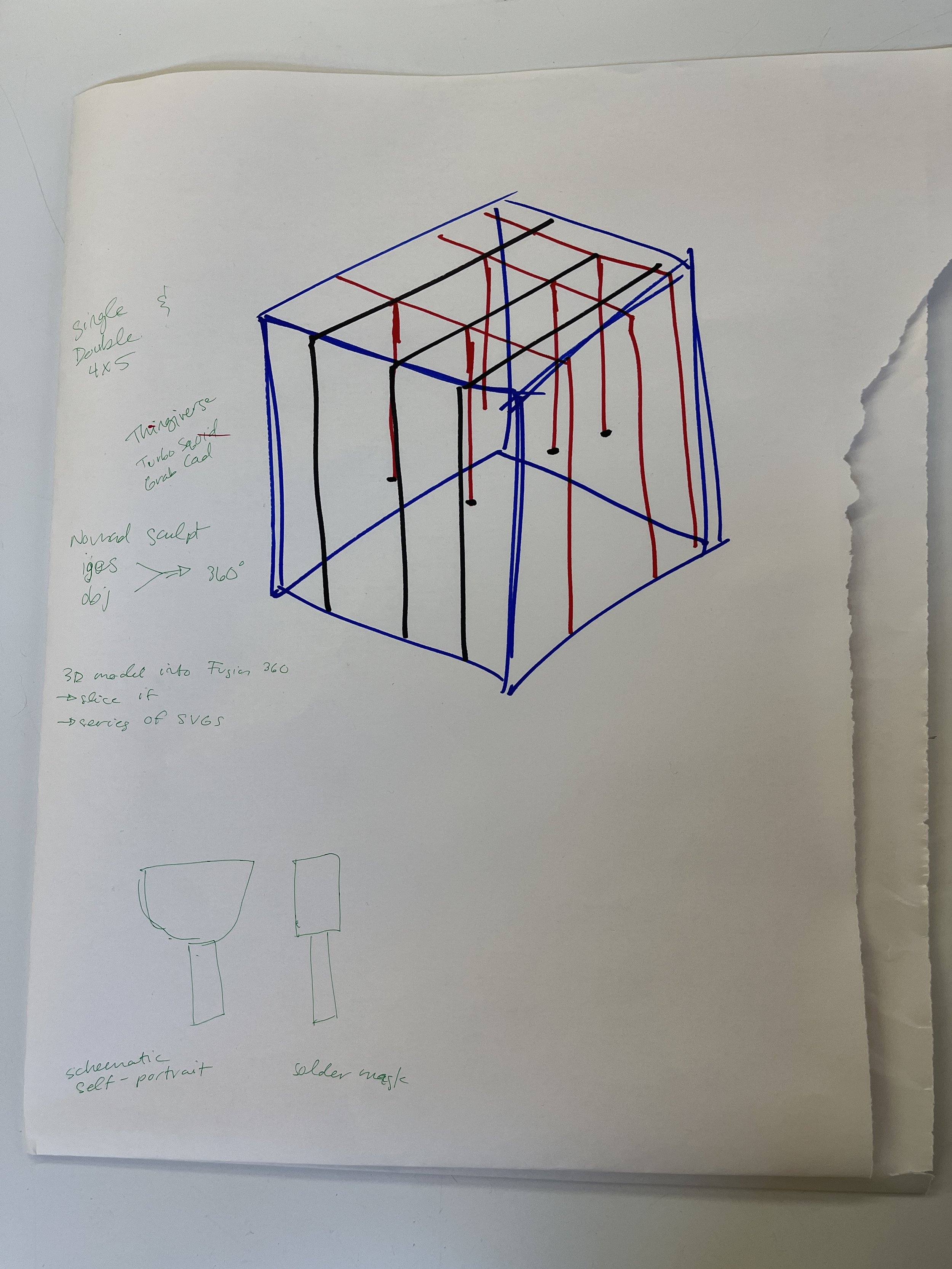

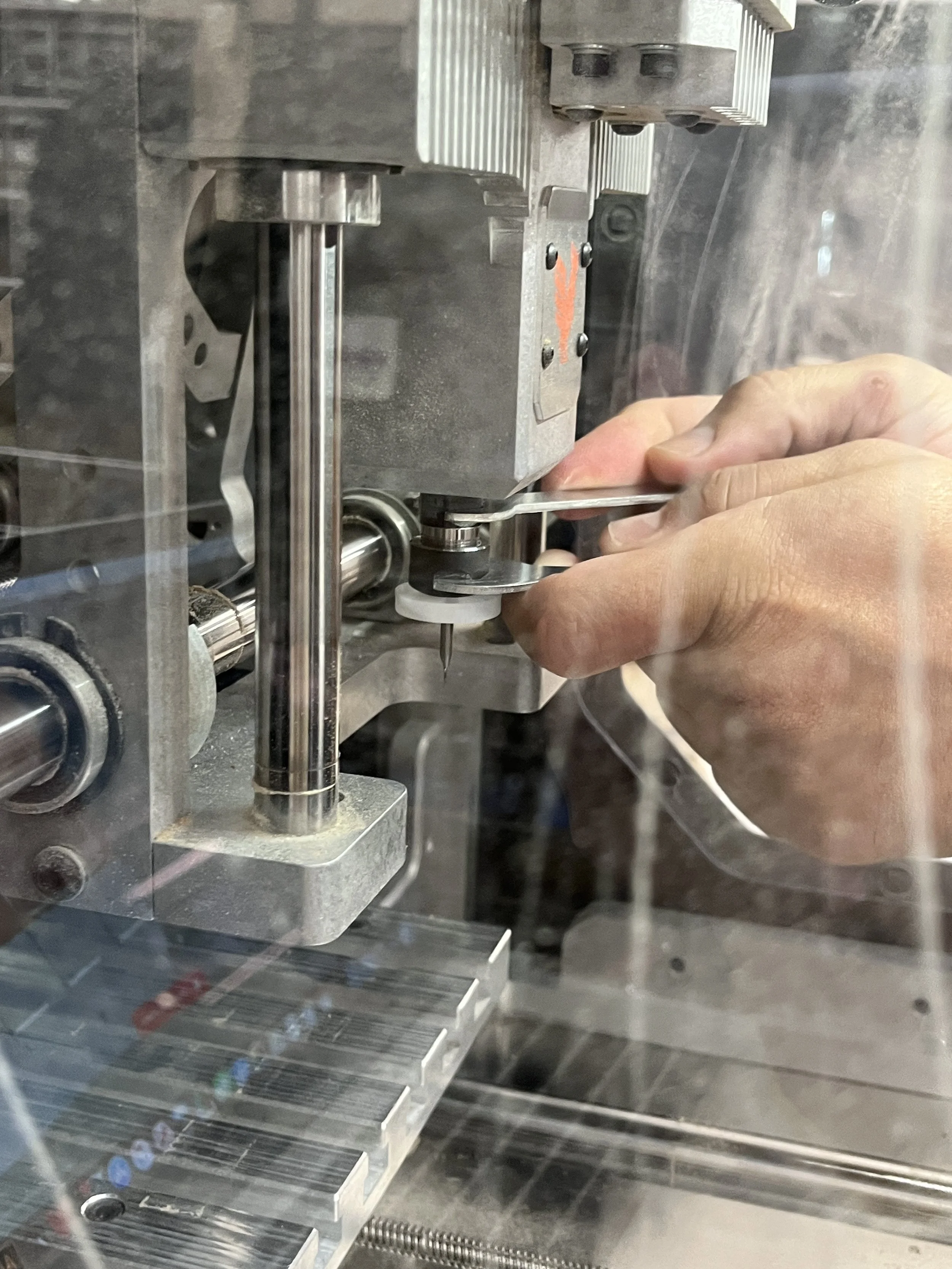

technology as portraits, body as mechanism, priyanka as mechanisms, self-portrait

Why do I want to make myself known through these mechanisms?

Cyborg, post-human

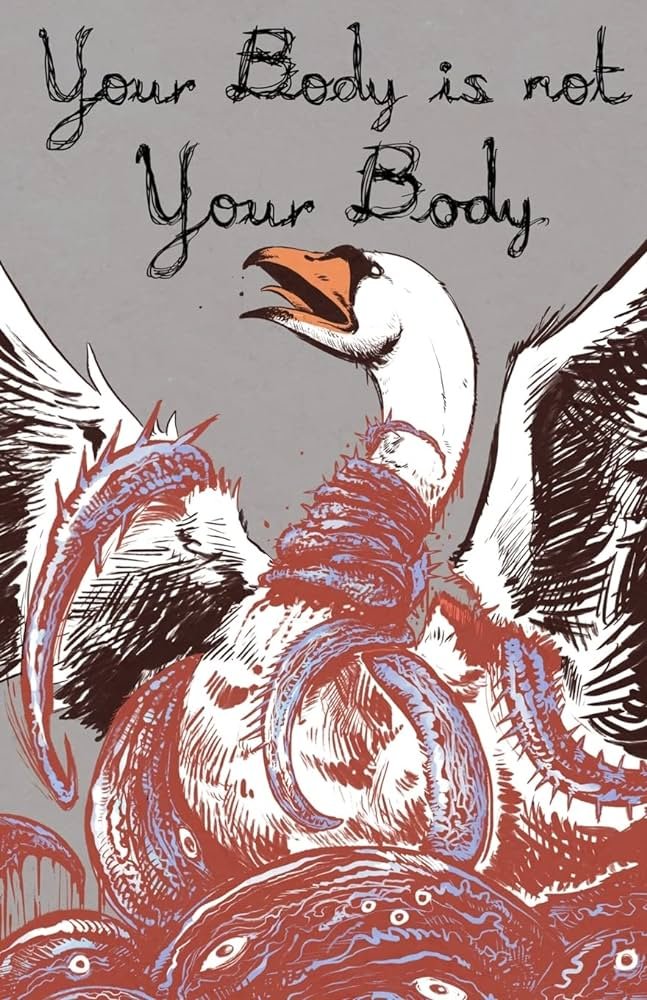

Woman/female bodies, bodies under attack

Embodiment, anxiety, depression - how does my anxiety manifest itself in my body?

bio something?! human being in nature, human being is nature

Reading List

On hand

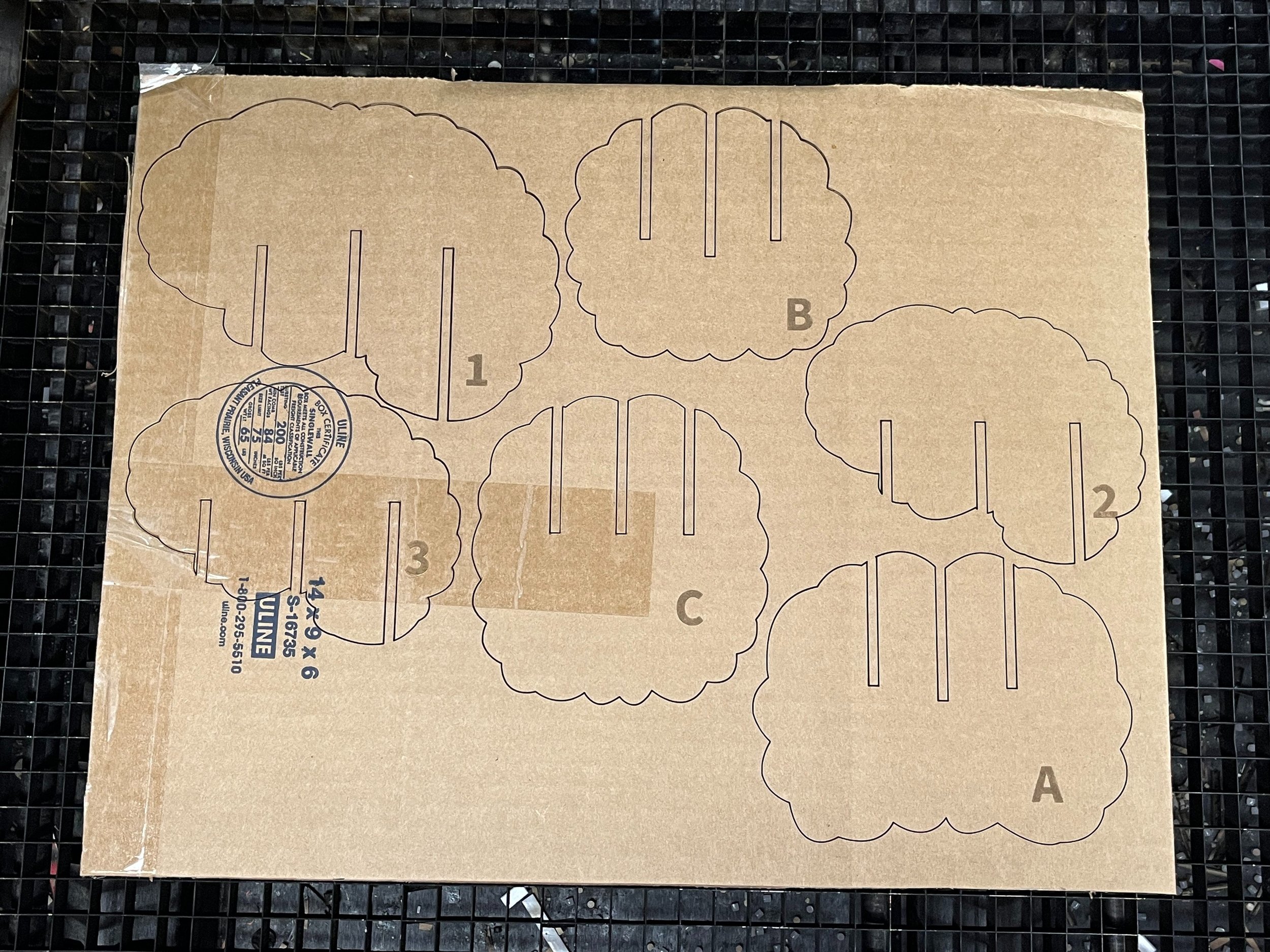

So missed the presentation from the librarian about how to do research at our library. I do plan on reaching out to her soon, but I honestly just googled books on the body and I decided to start with these for my research. As I’ve been thinking about my project, I feel these three books are a good starting point and offer a broad array of POV.

Also, I am a firm believer in judging books by their covers…