Notes

A Markov chain is a stochastic describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous even

Can simulate “stickiness” by modifying probabilities

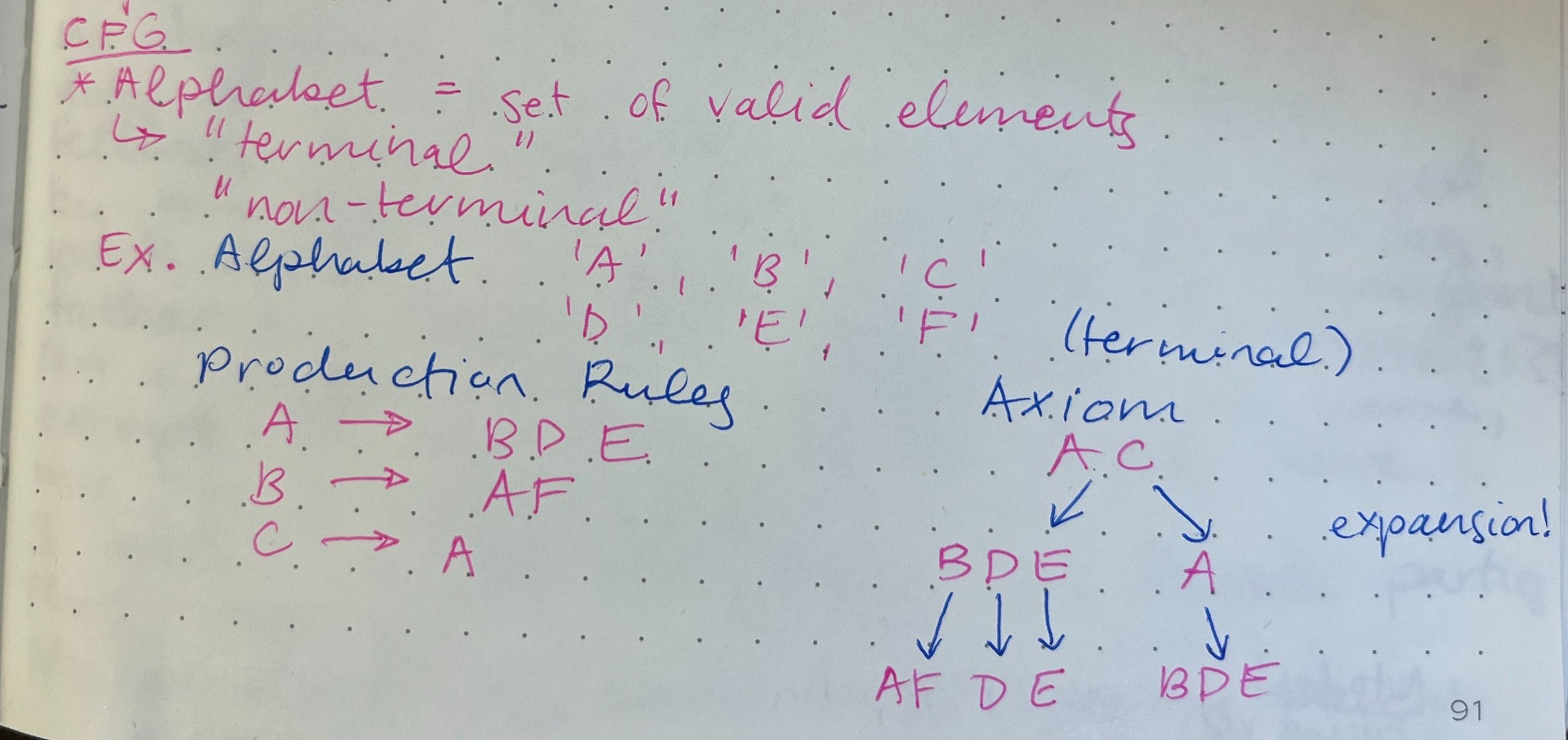

Grammar = “language of languages”. Provides a framework to describe the syntax and structure of any language

Context-free grammar = rules don’t depend on surrounding context

Vocab: alphabet, terminal and non-terminal symbols, production rules, axiom

n-gram = contiguous sequence of characters or words

Used to reconstruct or generate a text that is statistically similar to the original text

Frequently used in natural language processing and text analysis

Unit of an n-gram is called the level, length is called order

A Markov Chain will return less nonsense if the n-gram is longer, output will be closer to the original text

Tracery = generative text tool that creates text that is algorithmically combinatorial and surprising while still seeing the authorial “voice” in the finished text

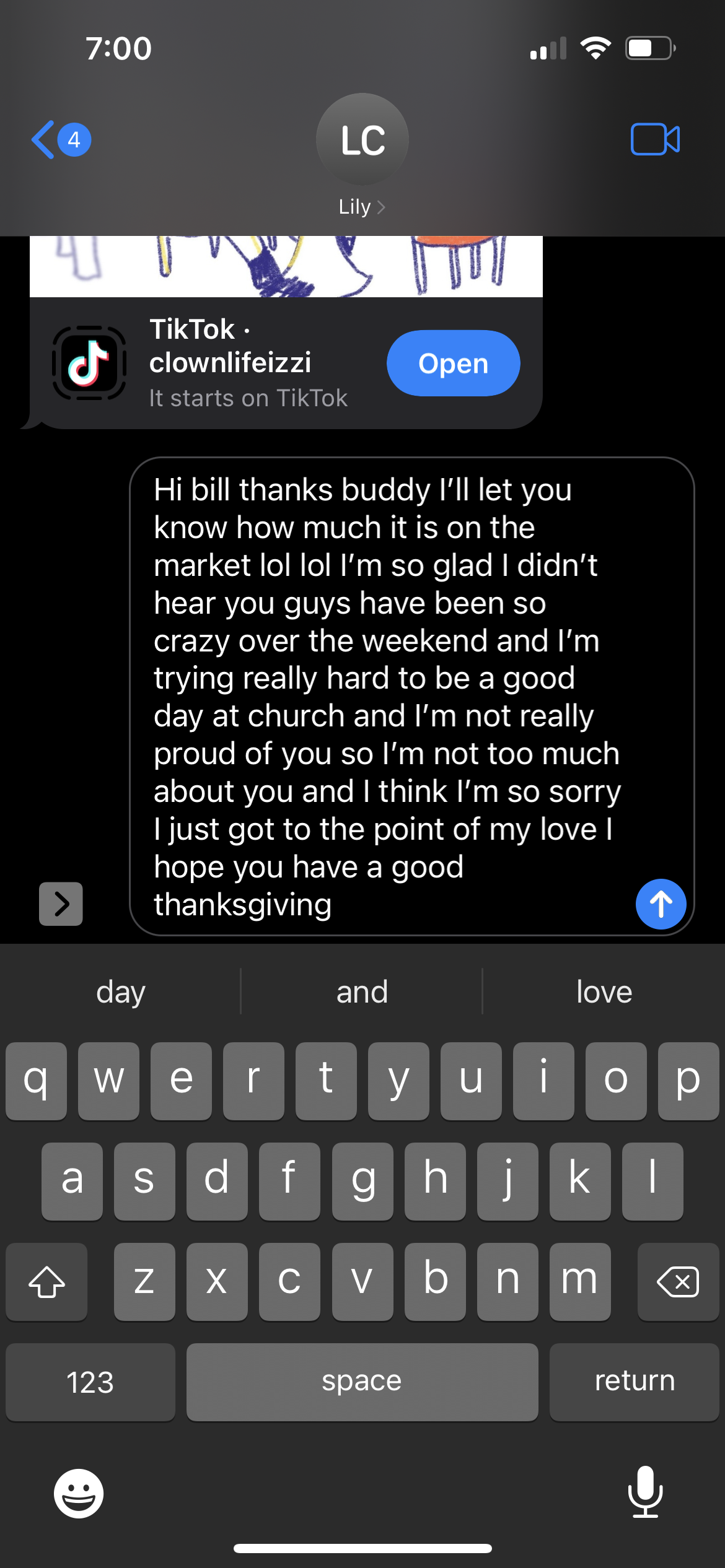

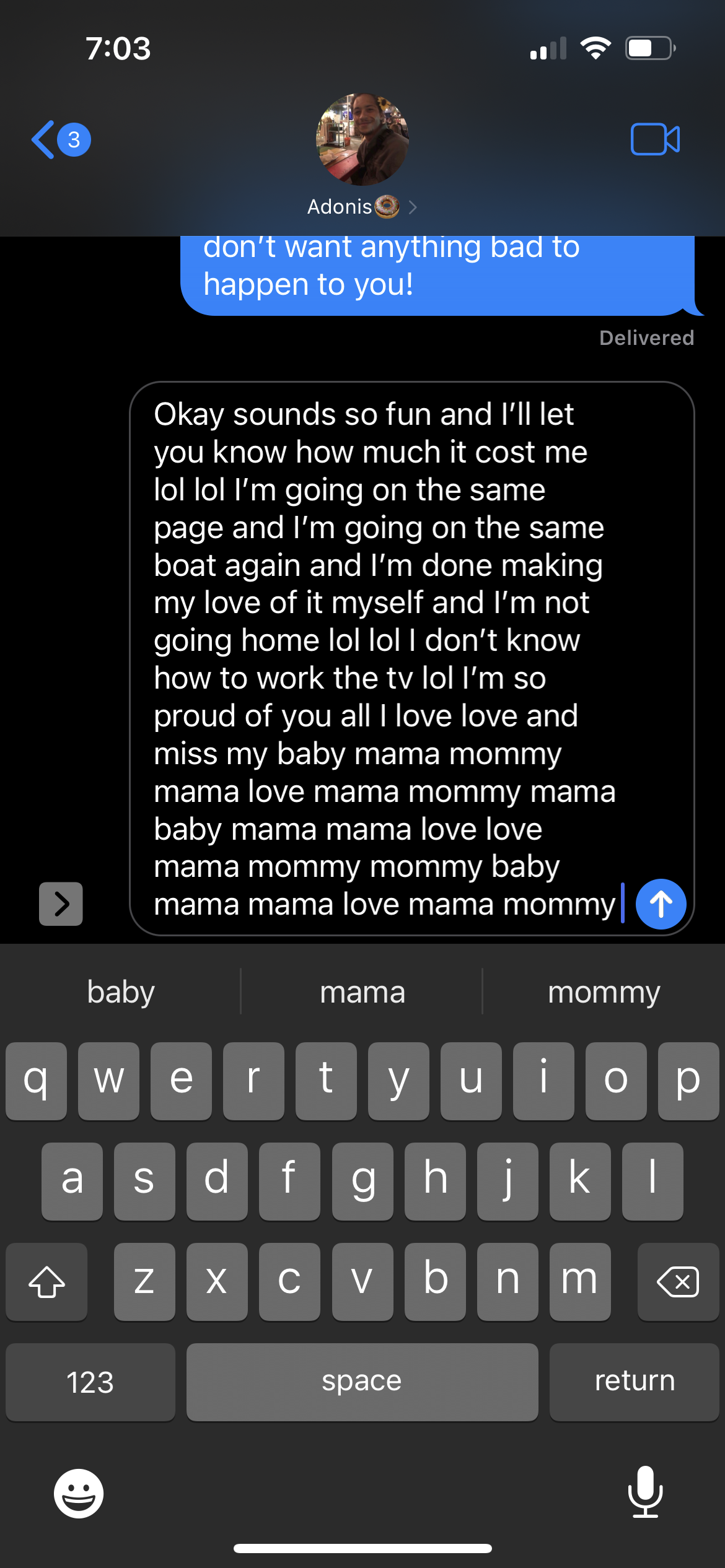

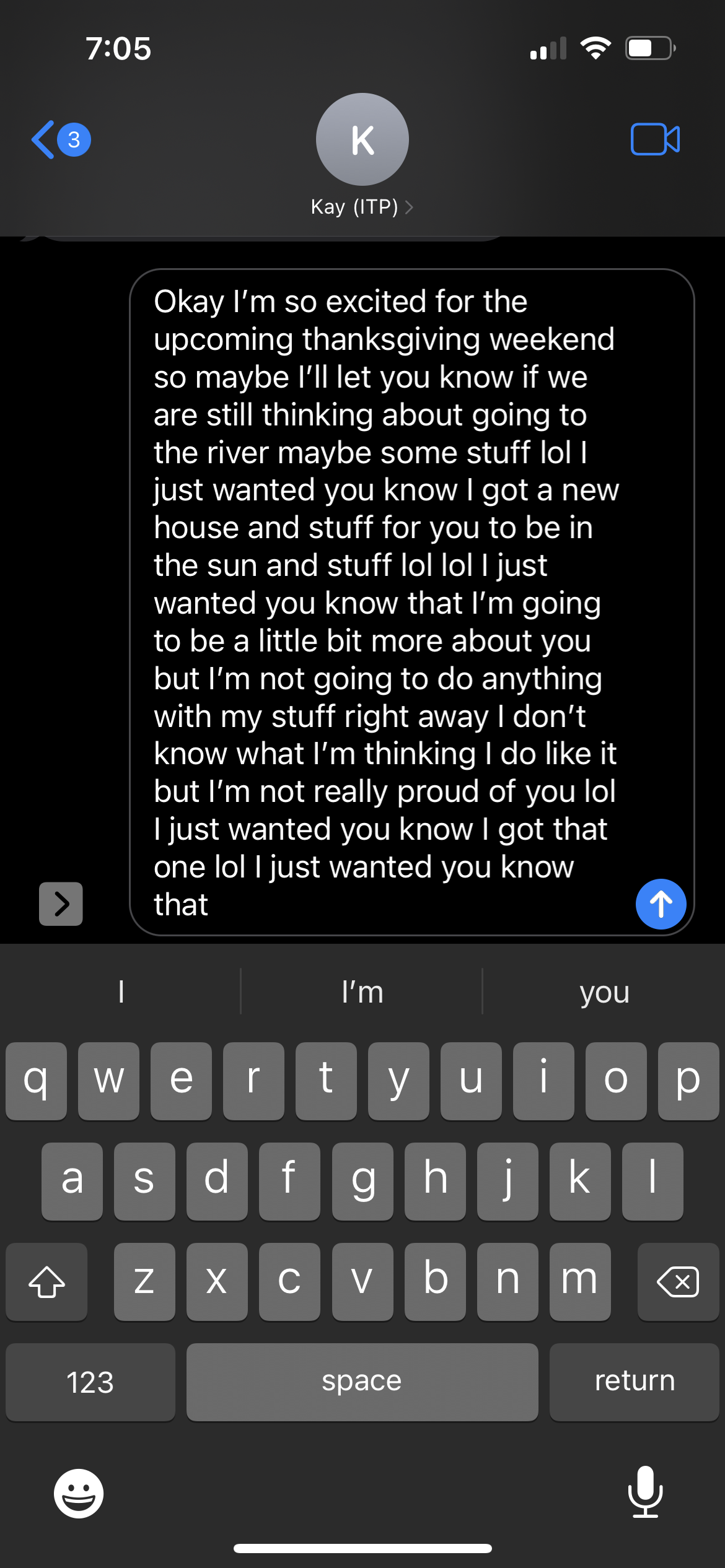

Predictive Text

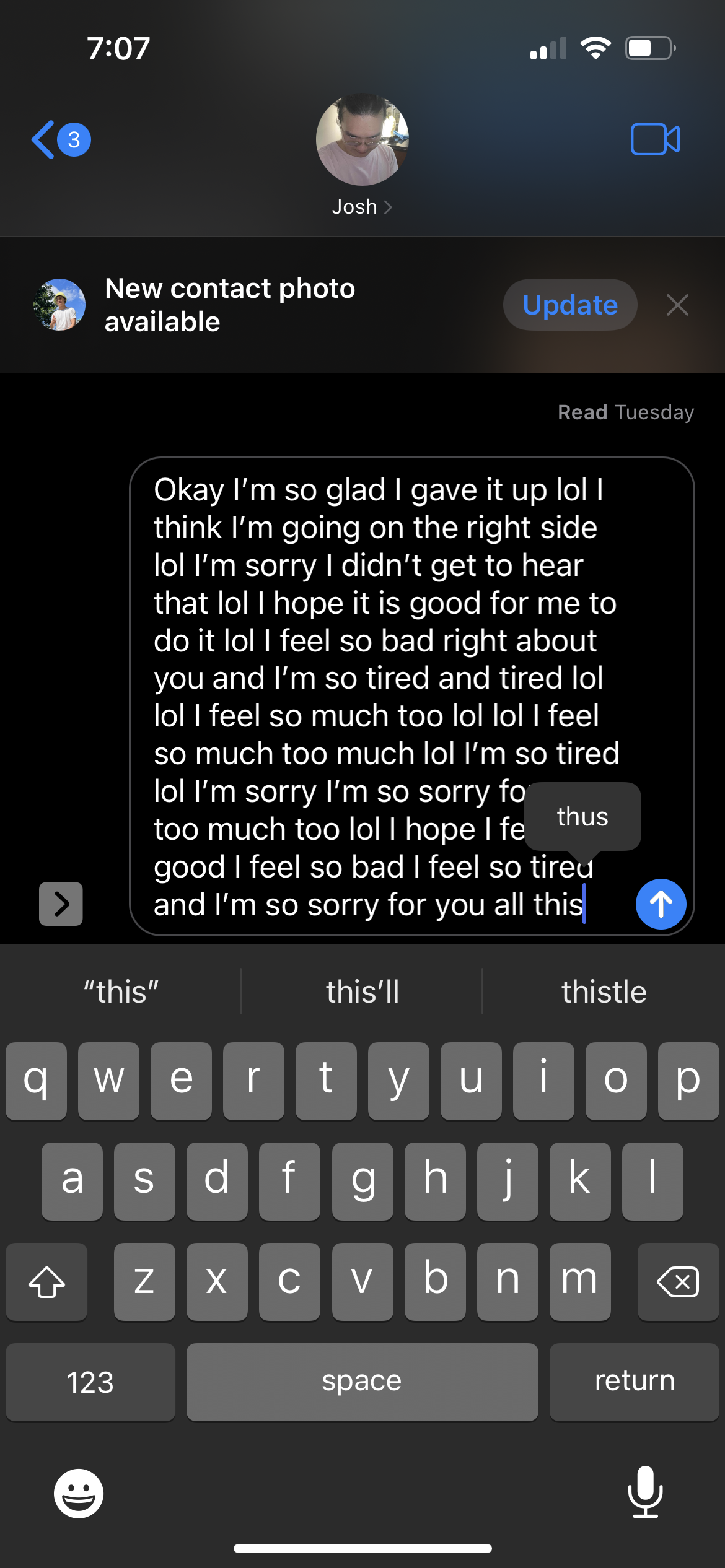

Thinking about natural language processing and predictive text, one of my friends made me aware of the predictive text feature in iMessage (a setting I didn’t have turned on). Basically, I tapped the middle button over and over again for different text conversations. The generated messages are below, different for different threads. The text to my boyfriend just devolved into “mama” “mommy” over and over. Really weird…

Assignment

It’s that point in the semester where my brain is completely dead and I’m having a hard time thinking of text that I’ve come across that follows a pattern. Yikes! But my last food delivery order of sub-par Pad Thai sparked some inspiration.

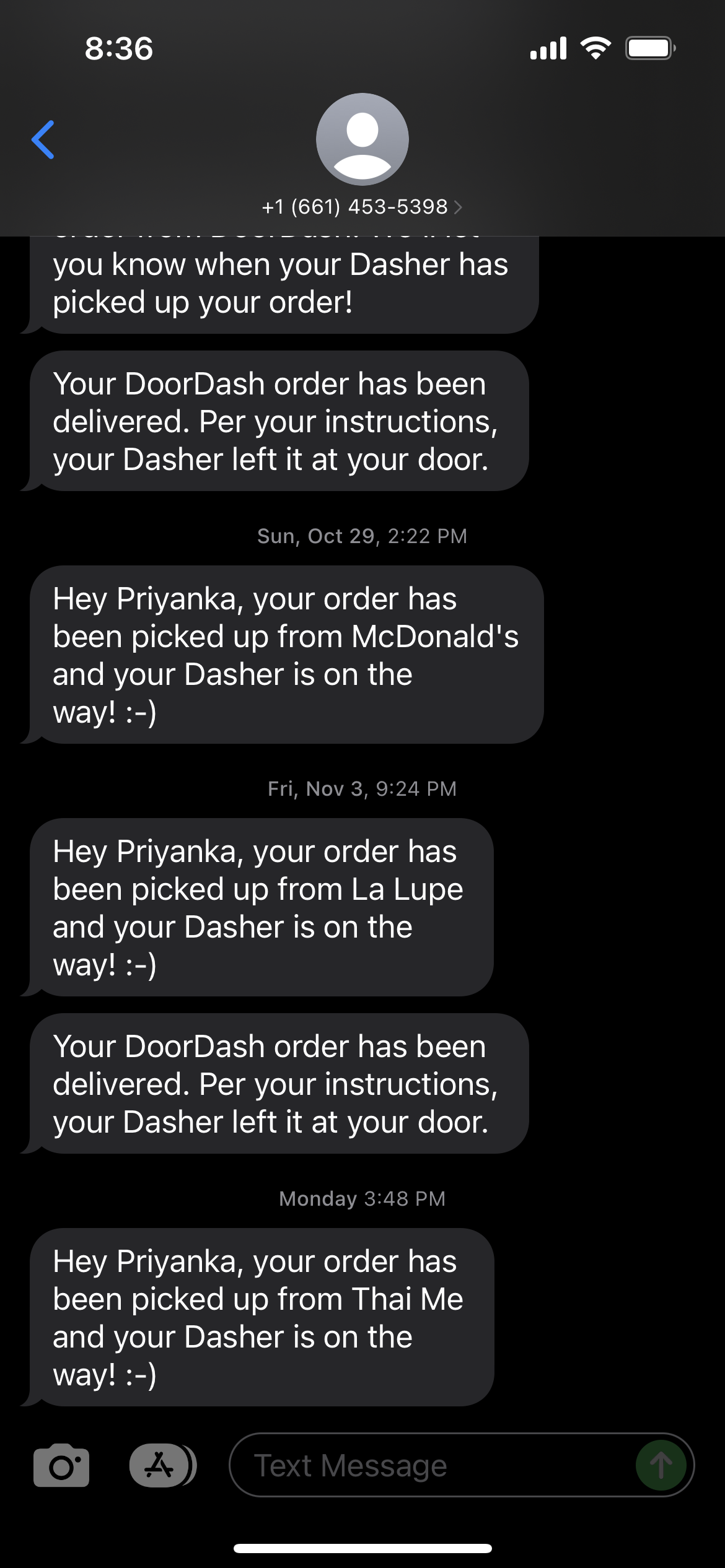

Building off of the context-free grammar using Tracery Coding Train example, I created a context-free grammar that would simulate the super-friendly texts I get from DoorDash. Creating this grammar using the Tracery library was really easy to do because it essentially works as a mad-lib and chooses an item randomly from a word bank that I hard-coded. You can find the p5 sketch for my DoorDash CFG here.

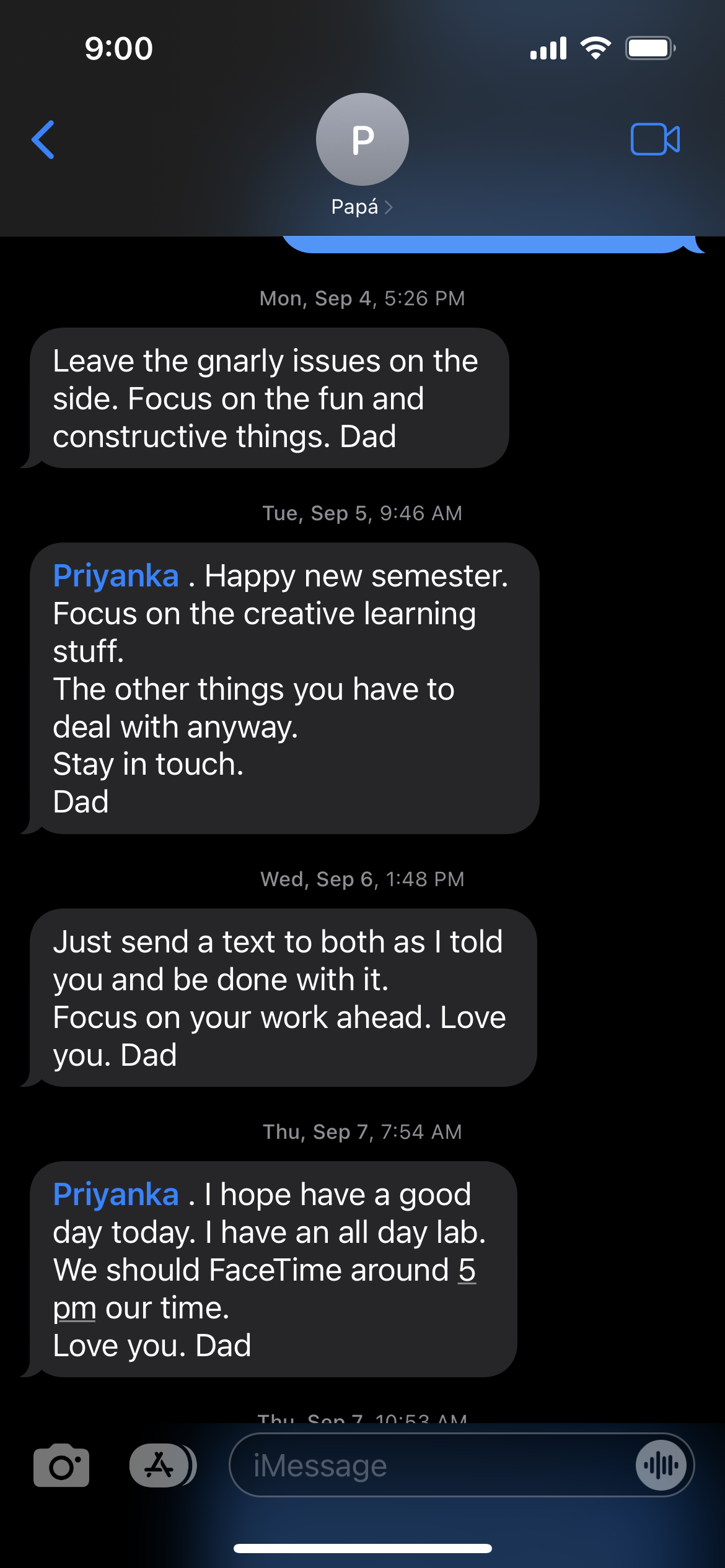

Also, Gracy gave me the genius idea to try generating texts from my dad since we compare dad texts sometimes. My dad is … involved to say the least. Generally, I’ll get reminders, motivations and wisdoms, un-solicited “suggestions”, and the occasional dad-in-the-life update with visuals. I started with the Markov Chain example and put in my dad-text data. This was particularly annoying to gather because I’m working on my PC these days so I had to manually select and copy+paste each text and compose emails to myself, etc, etc. Maybe this would’ve been easier using the iMessage app on my MacBook?! Anyway, I just created a new txt file in the p5 sketch with an assortment of my dad’s recent texts to me… and that’s it really! My sketch is here. Not sure how to define it specifically but the generated texts feel pretty authentic to me!

DoorDash texts

Real dad texts

Generated dad texts

You know, I actually really love that I landed on these two sketches because it is only my dad and DoorDash that text me haha! Or maybe that’s just how it feels sometimes!

I realize that these sketches I modified for the assignment are super close to the examples provided and are pretty simplistic. I haven’t had the time to build these examples out fully yet. In the future, I’d love to create more options for the DoorDash CFG. I think it would be kinda funny if I generated texts that sometimes responded back to my dad. Also, I want to build out the UI in p5 to look like the Messages app on my phone so that I could put my generated text in a meaningful visual context.

Resources

Priyanka’s DoorDash CFG sketch