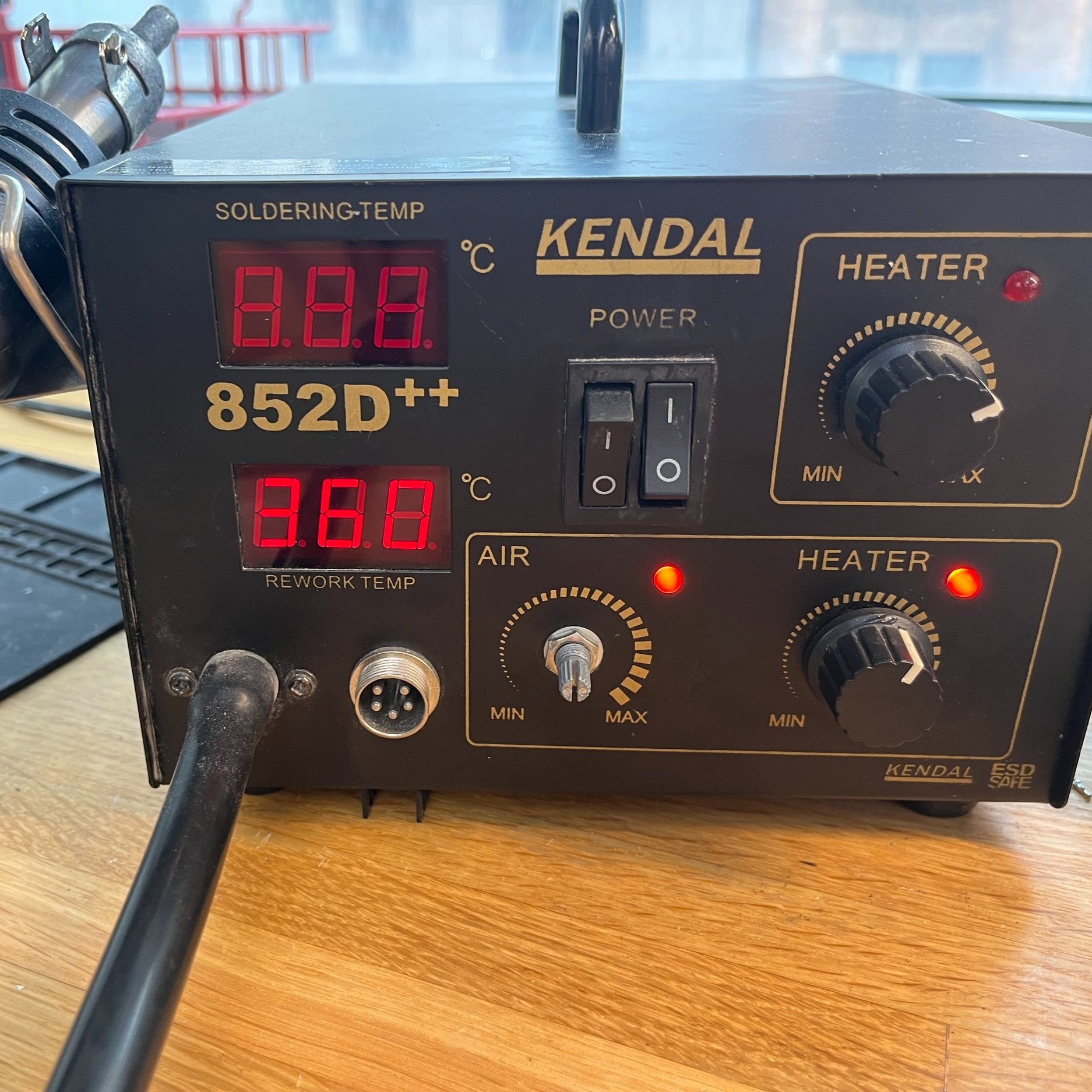

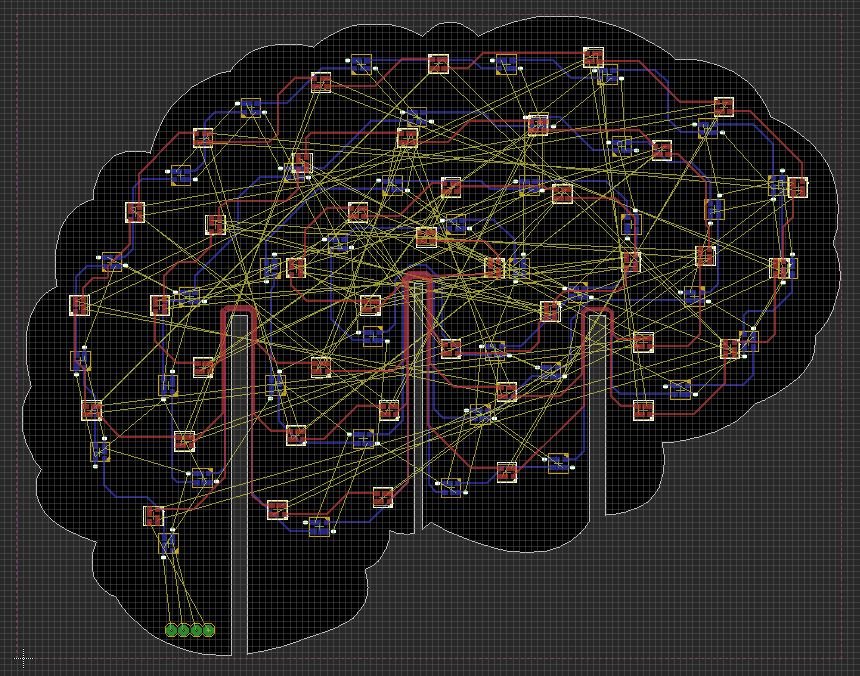

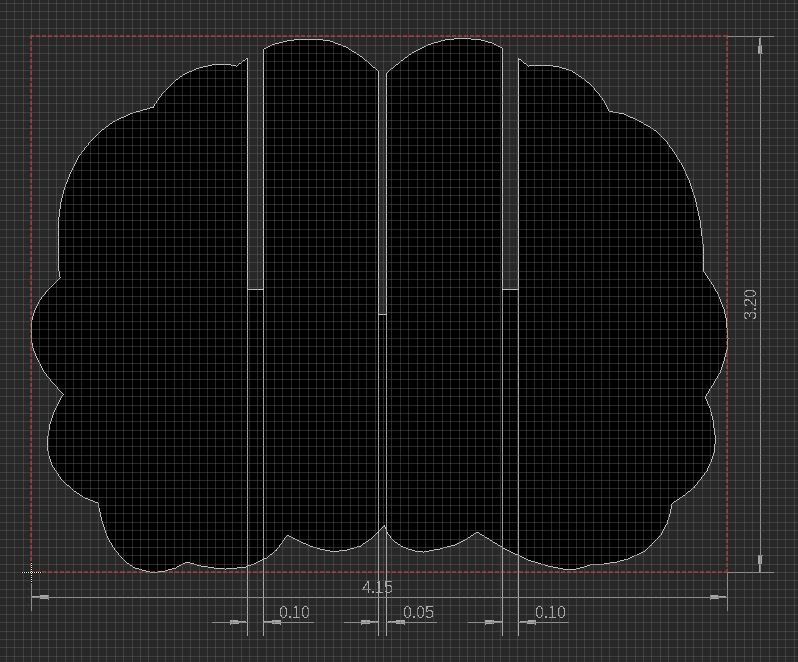

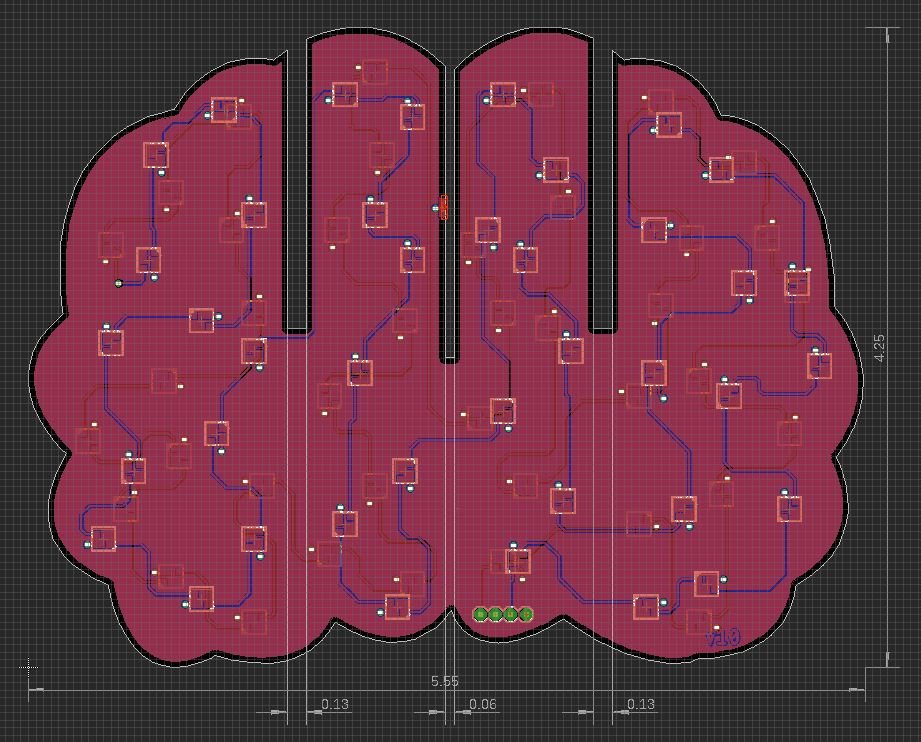

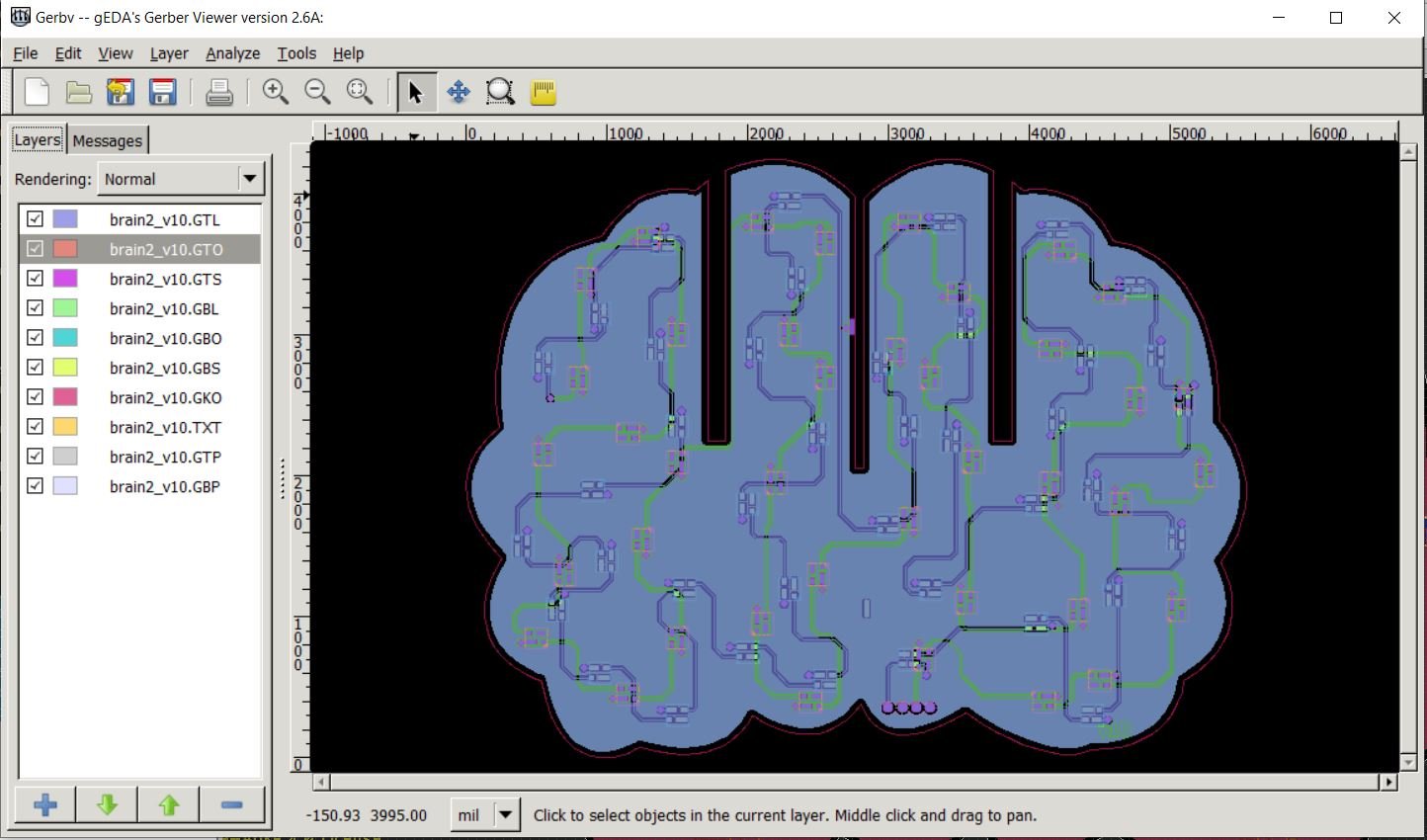

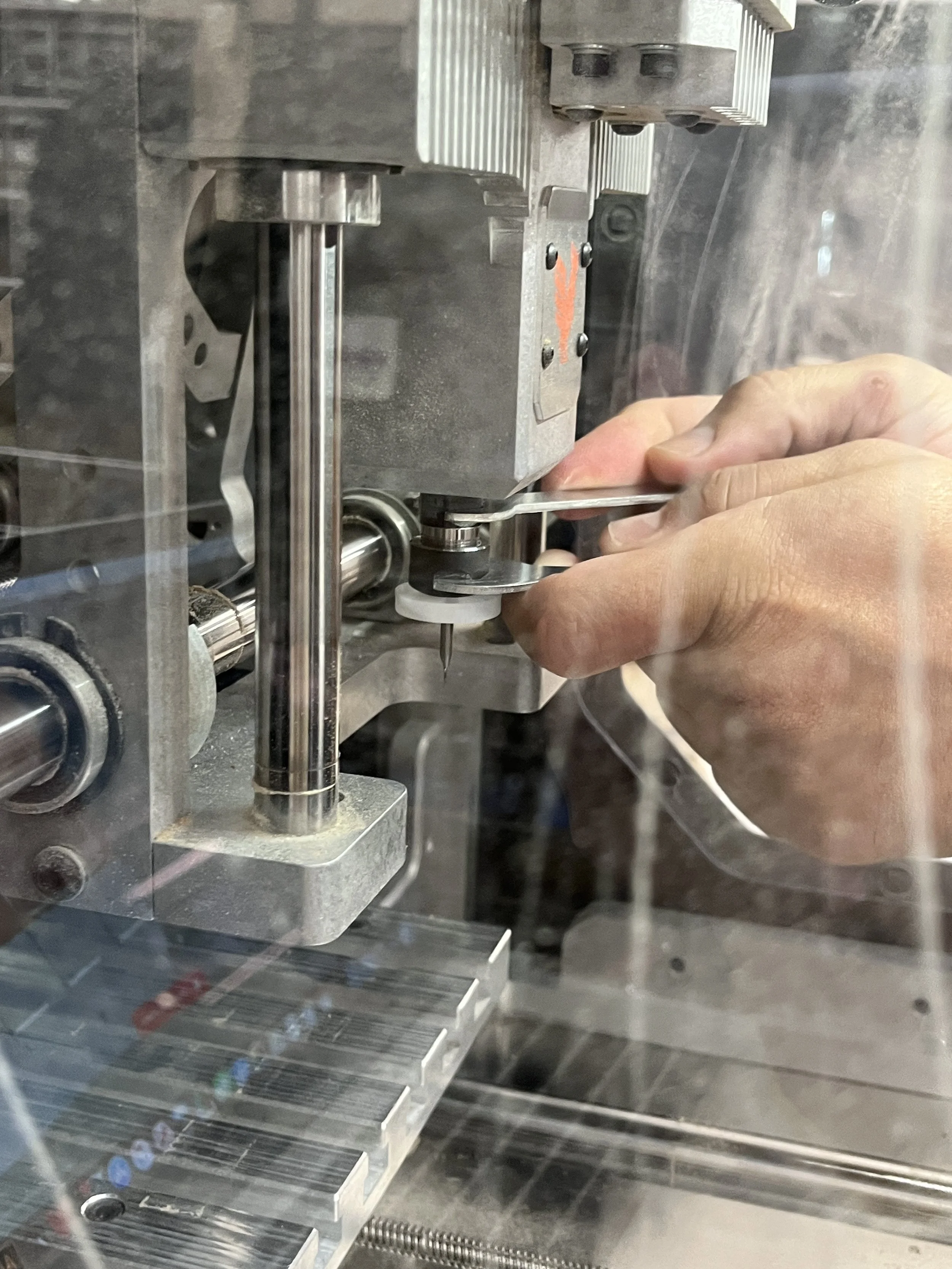

For this week’s homework, I set up my Teensy 4.0 and the Audio Shield using the Audio Workshop tutorial from PJRC as a starting point. Here’s how my hardware is hooked up for now:

While I’m just getting started with analog synthesis, I thought I would just go through the examples shown in class.

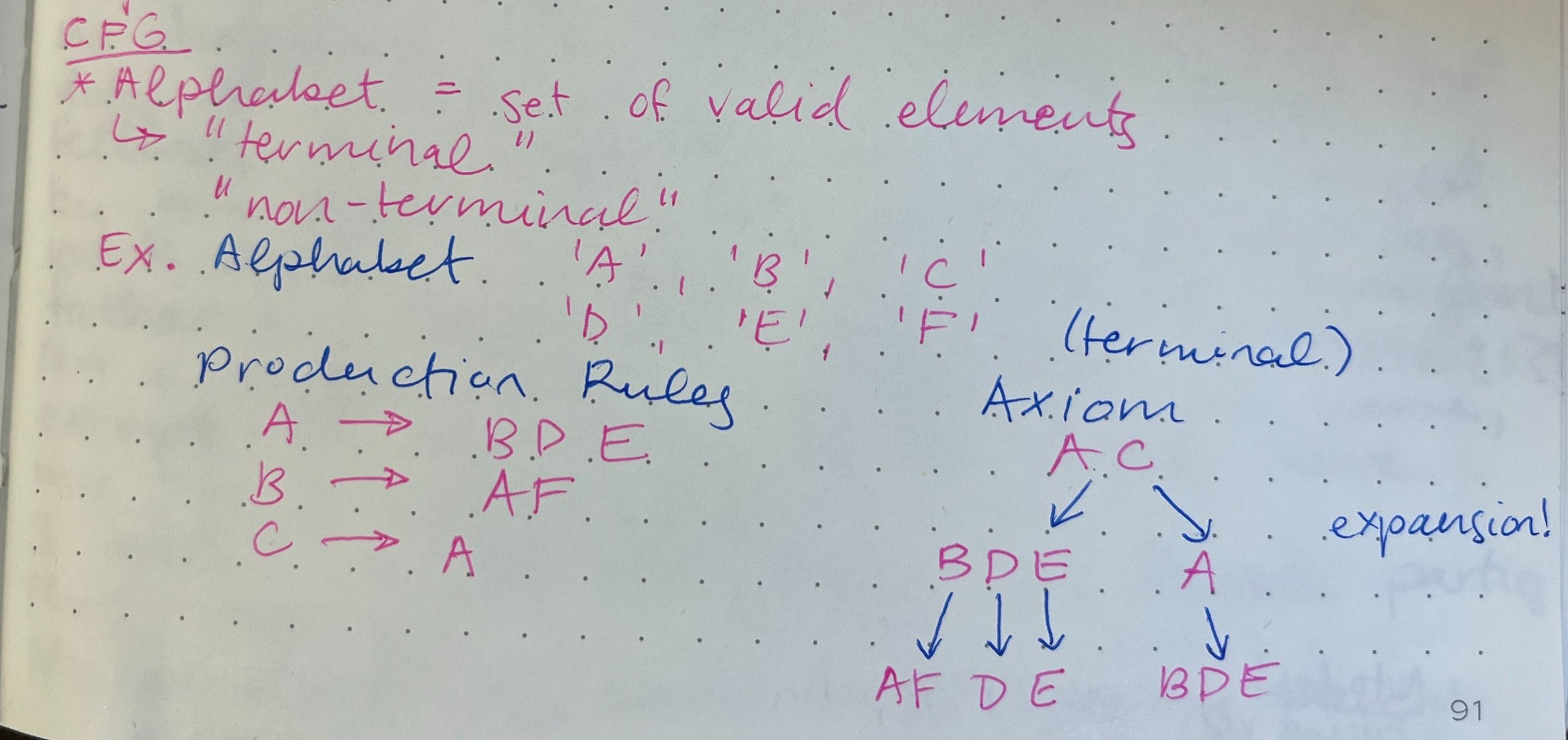

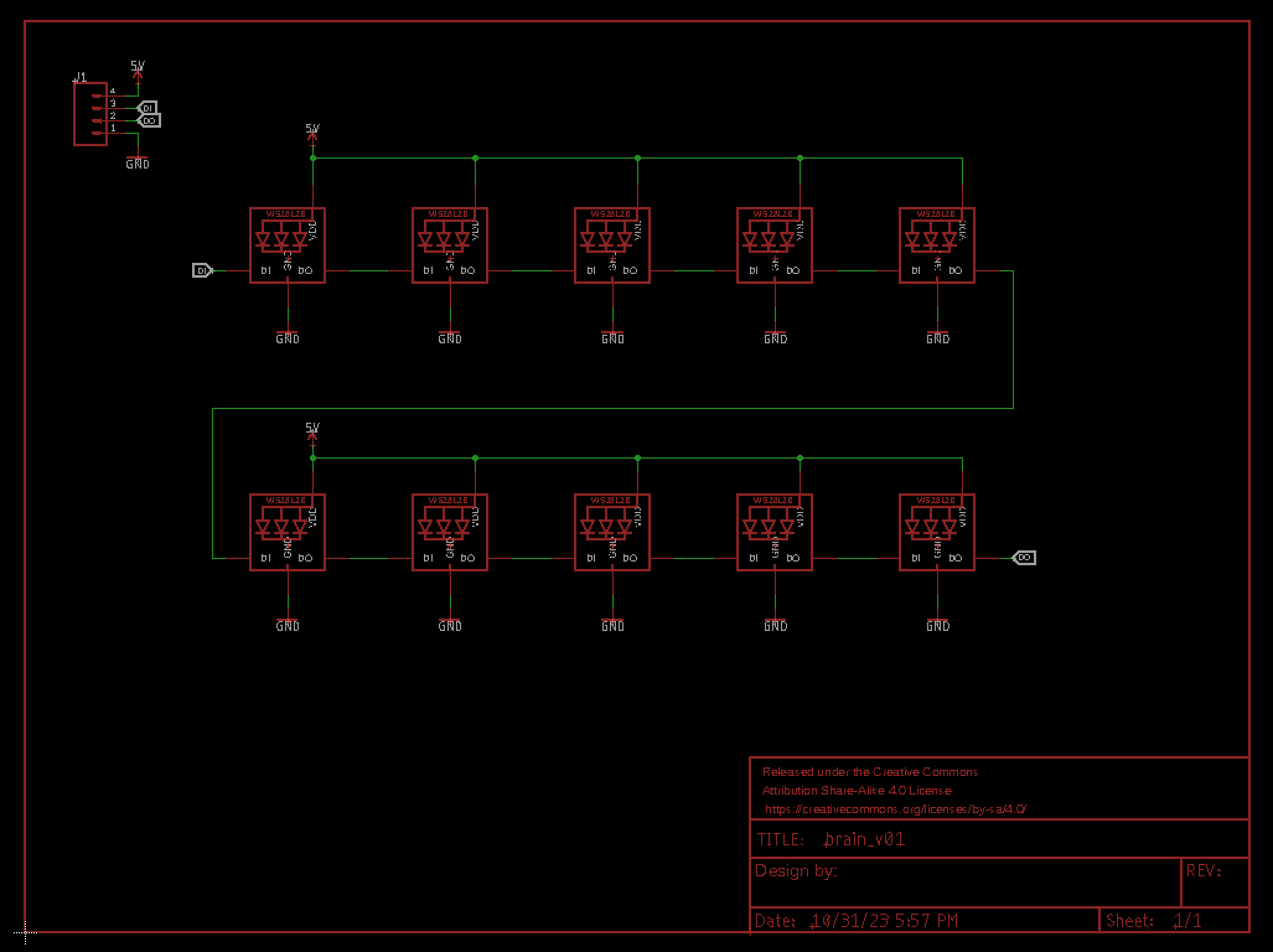

Waveform

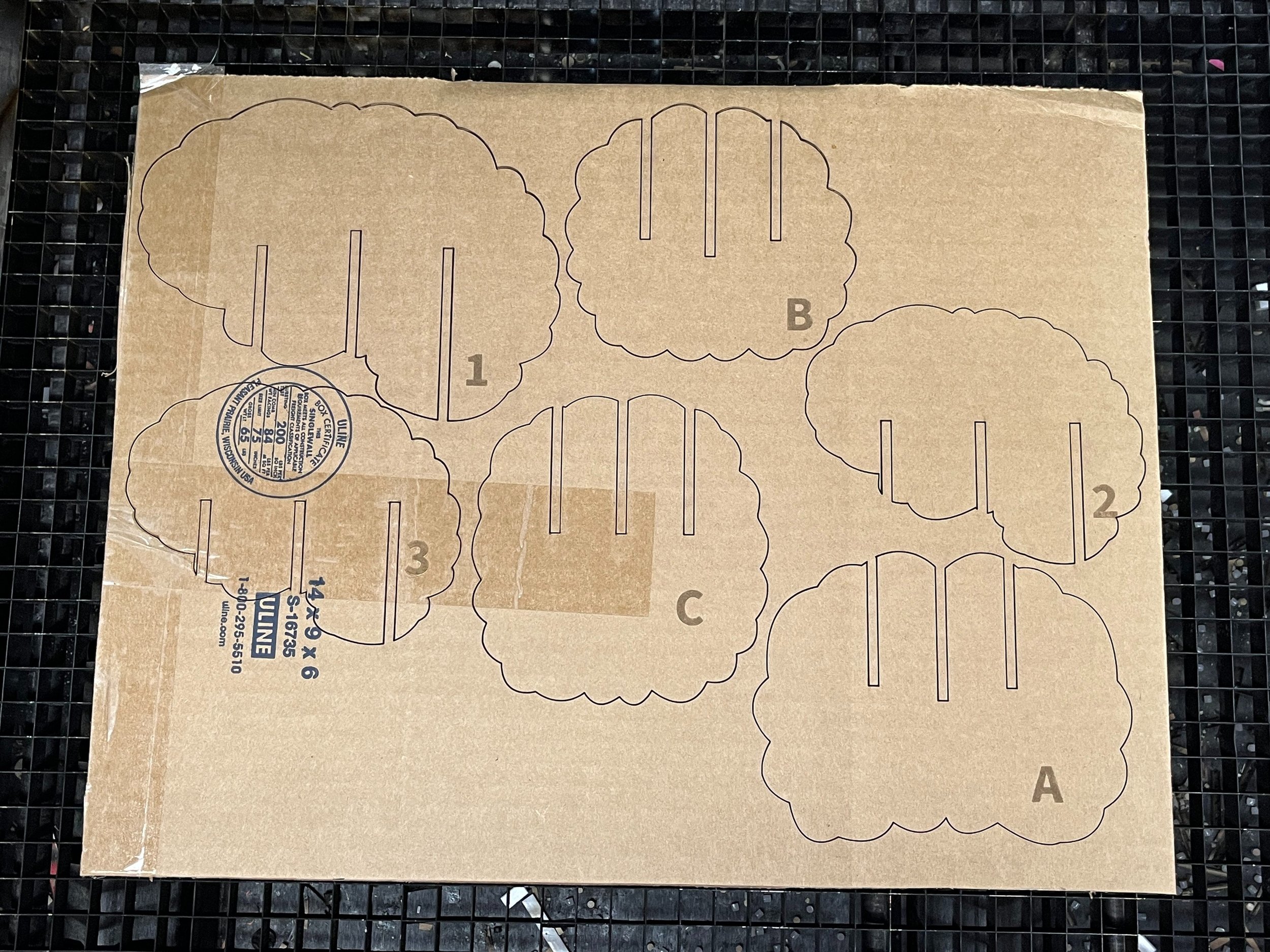

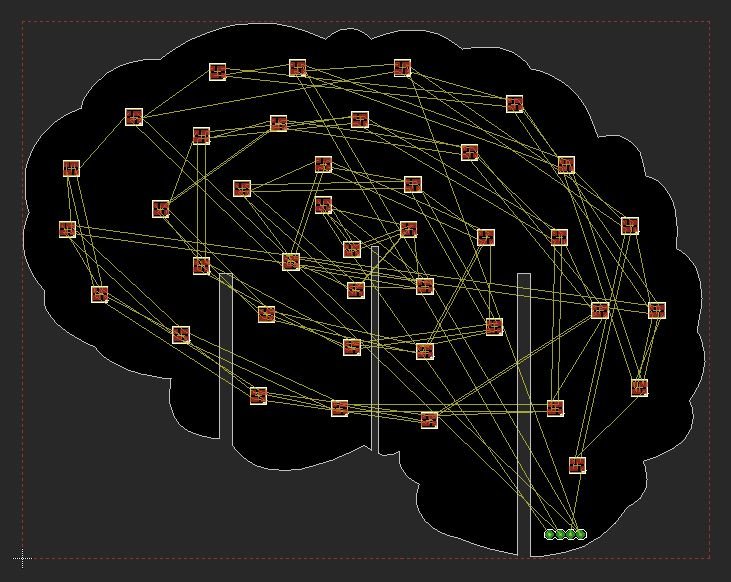

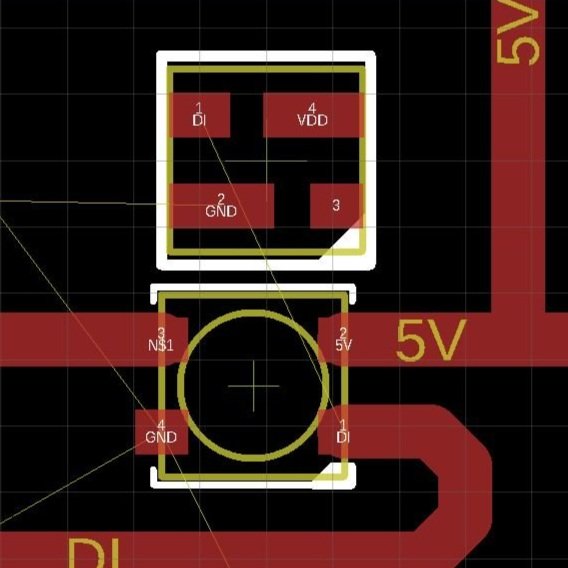

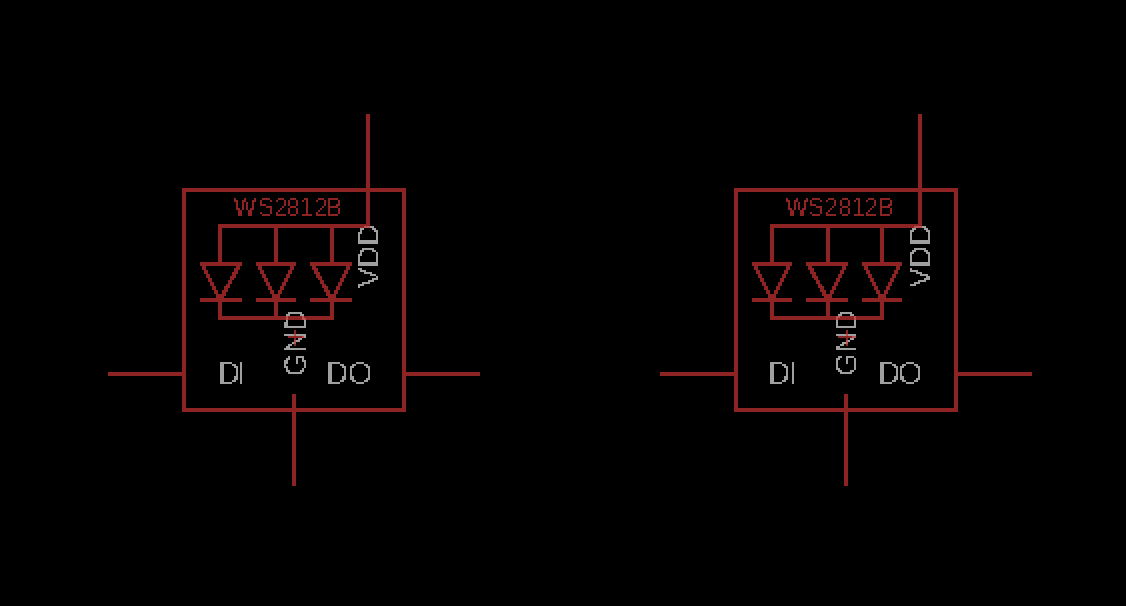

This example just lets you adjust the frequency of waveform output by turning a potentiometer. The i2s block is included in basically all applications of the Audio Library because it transmits 16-bit stereo audio to the shield.

Waveform with button cycle

This example is the same as the last one but you can cycle through different shape for the sound wave (sine, square, triangle, pulse, sawtooth) using a button press. Same flow diagram as the previous example.

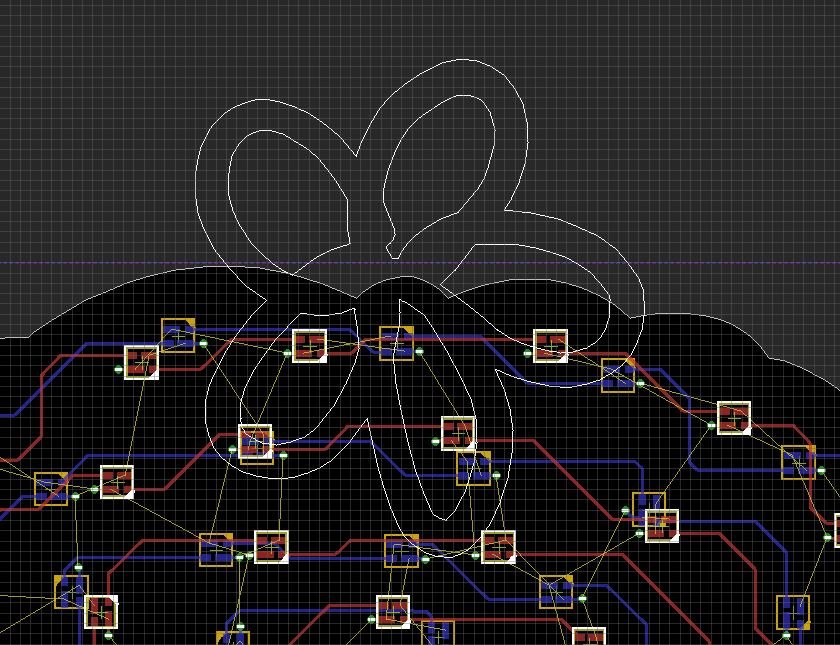

Harmonics

Again a button controls the shape of the signal. The sketch plays a waveform and signals one and two octaves below. Potentiometers control the gain of the three waves. The mixer block combines up to four audio signals together.

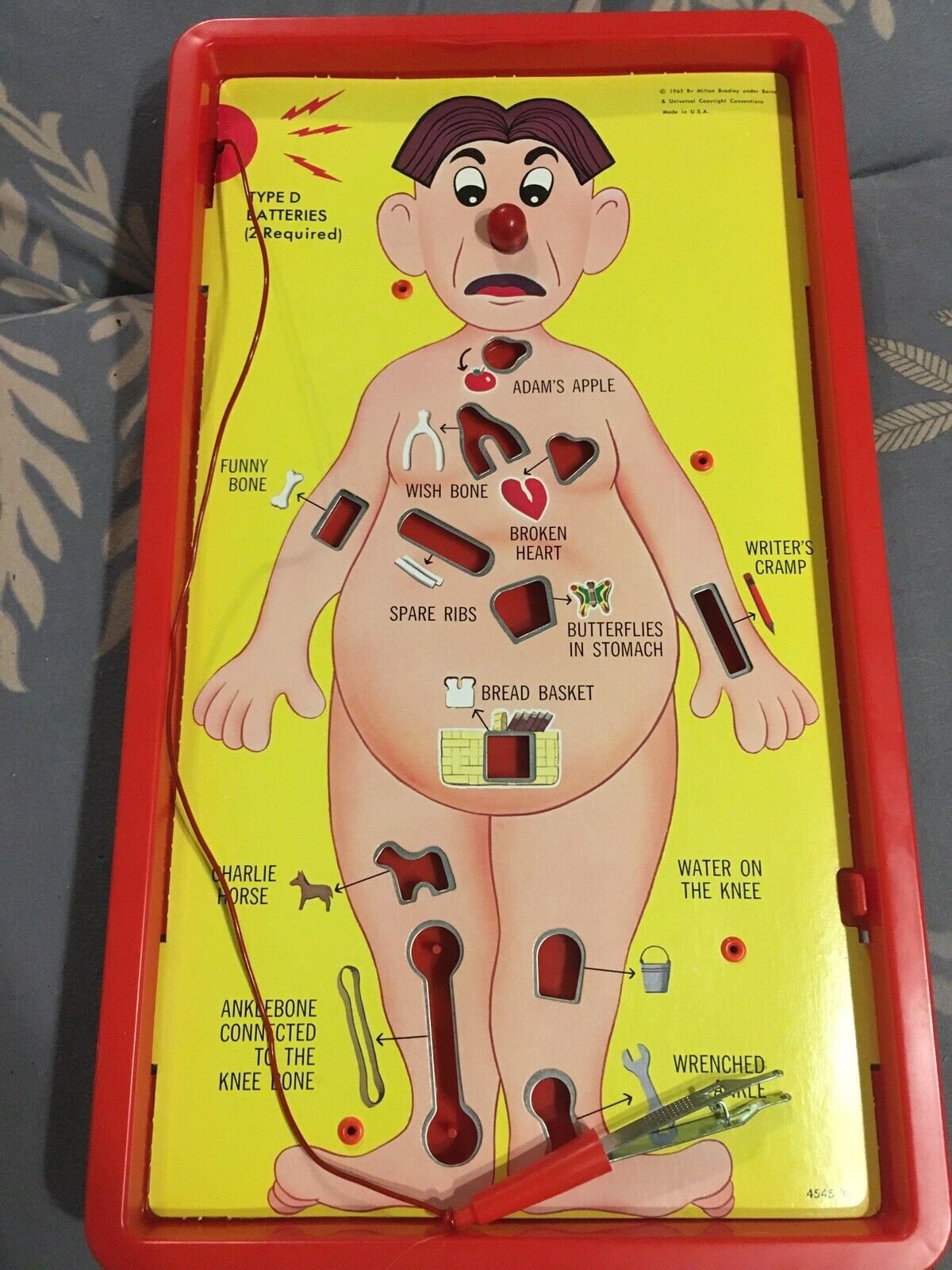

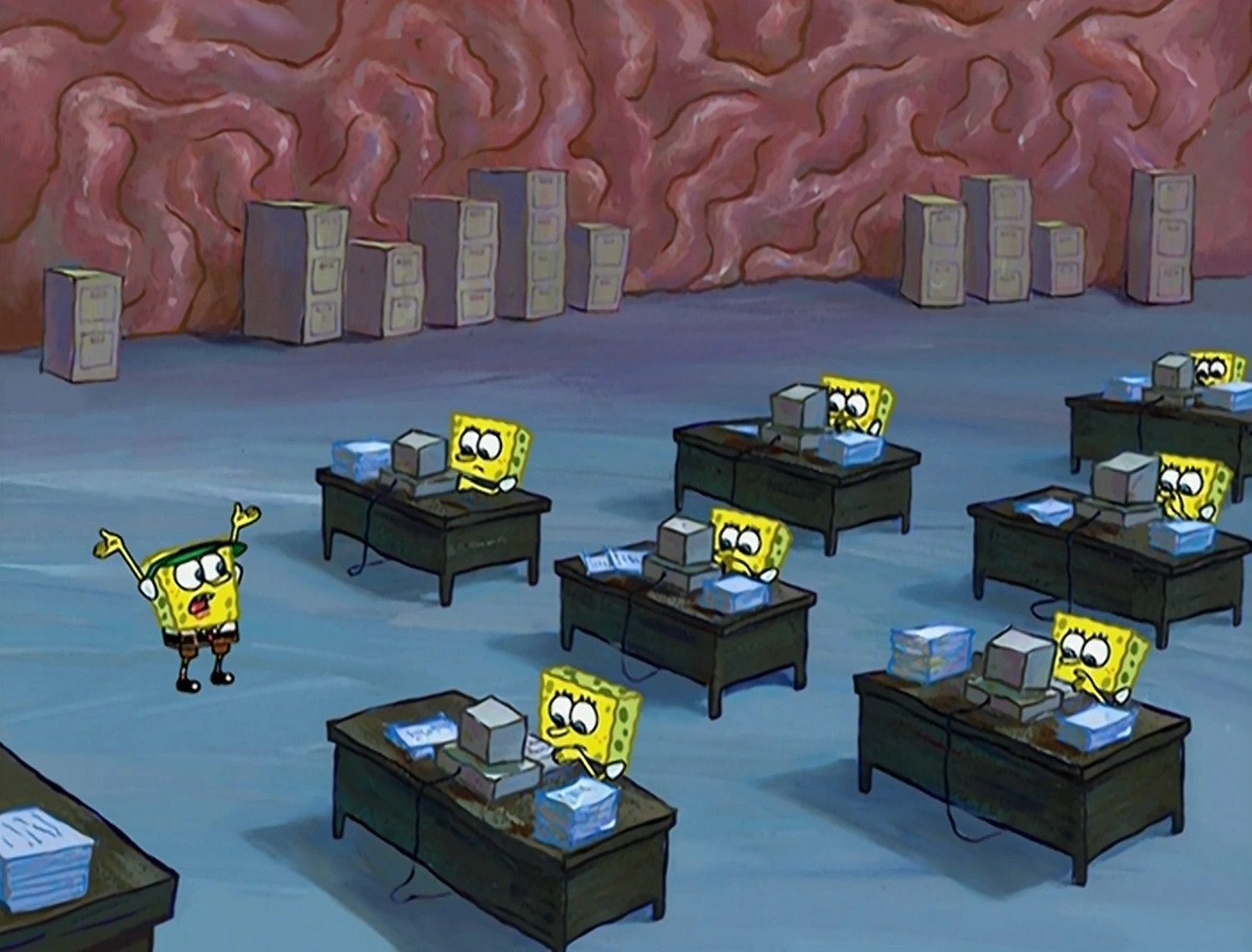

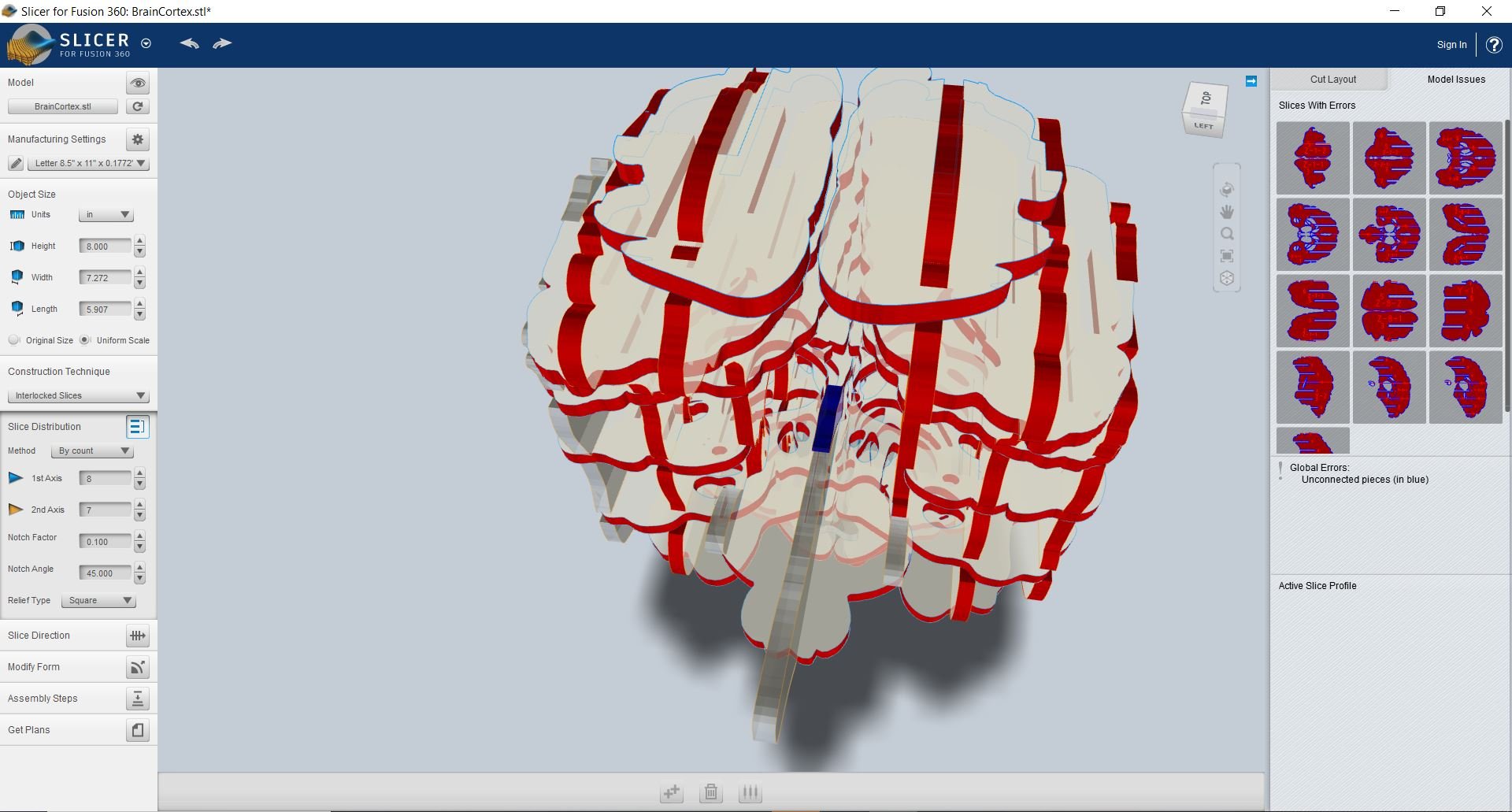

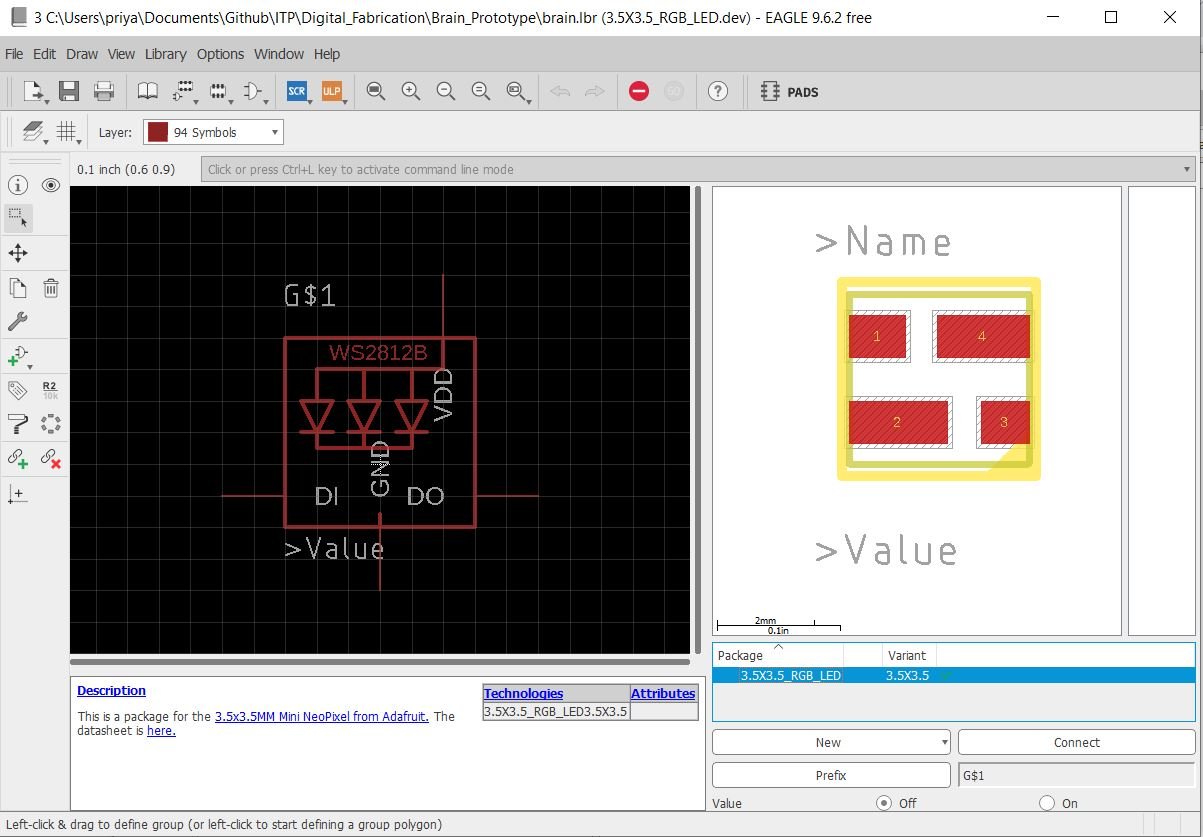

Mixer software block

Harmonics with filter

In this example, the button does its thing. The first two potentiometers control the gain of a base frequency and its harmonic (base frequency / 2). The third potentiometer changes the cutoff frequency of a low pass filer.

Detuning

In this example, the first potentiometer controls the base frequency. A second potentiometer controls the detuned frequency. I’m pretty sure when these two waves are played at the same time it creates beats.

Detuning with filter

Same detuning strategy as above but the third potentiometer controls the cut off frequency of a high pass filter.

I ended up skipping the last example from the class Github because it required SD card playback. Don’t have one of those yet. One weird thing I did encounter is that the headphone jack on the Audio Shield is a little bit iffy. Like my headphones need to be plugged in at just the right depth to heard any audio, bumping it could affect the output…