Evil bit - a fictional IPv4 packet header field proposed to a Request for Comments (RFC) publication for April Fool’s in 2003. The RFC recommended that the last unused bit, the “Reserved bit” in the IPv4 packet header be used to indicate whether a packet had been sent with malicious intent, thus simplifying internet security.

The RFC states that benign packets will have this bit set to 0; those that are used for an attack will have the bit set to 1. Firewalls must drop all inbound packets that have the evil bit set and packets with the evil bit off must not be dropped. The RFC also suggests how to set the evil bit in different scenarios:

Attack applications may use a suitable API to request that the bit be set.

Packet header fragments that are dangerous must have the evil bit set.

Applications that hand-craft their own packets that are part of an attack must set the evil bit.

Hosts inside the firewall must not set the evil bit on any packets. (RFC 3514)

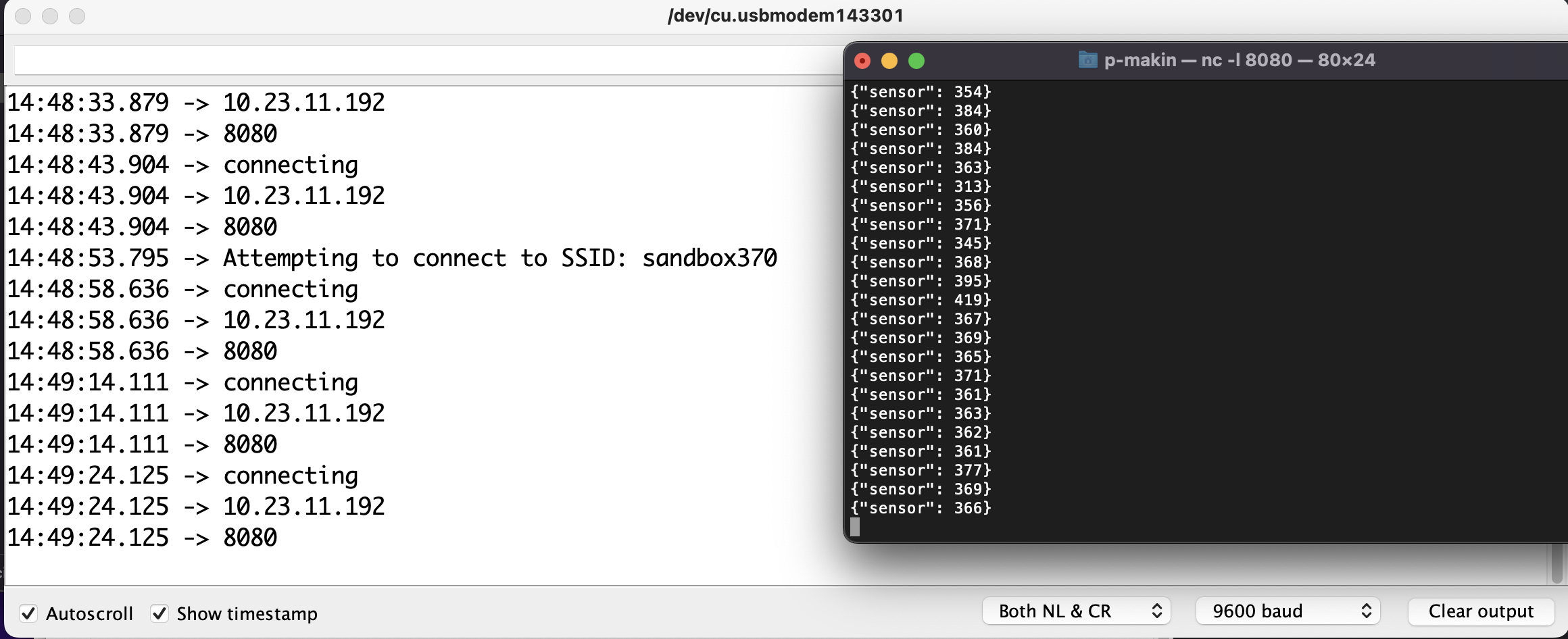

Packet header - Data sent over computer networks, such as the internet, is divided into packets. A packet header is a “label” which provides information about the packet’s contents, origin, and destination. Network packets include a header so that the device that receives them knows where the packets come from, what they are for, and how to process them.

Packets actually have more than one header and each header is used in a different part of the networking process. Packet headers are attached by certain types of networking protocols. At a minimum, most packets that traverse the internet will include a Transmission Control Protocol (TCP) header and an Internet Protocol (IP) header.

For example, the IPv4 packet header consists of 20 bytes of data that are divided into the following fields: