AI-defined text-based portrait

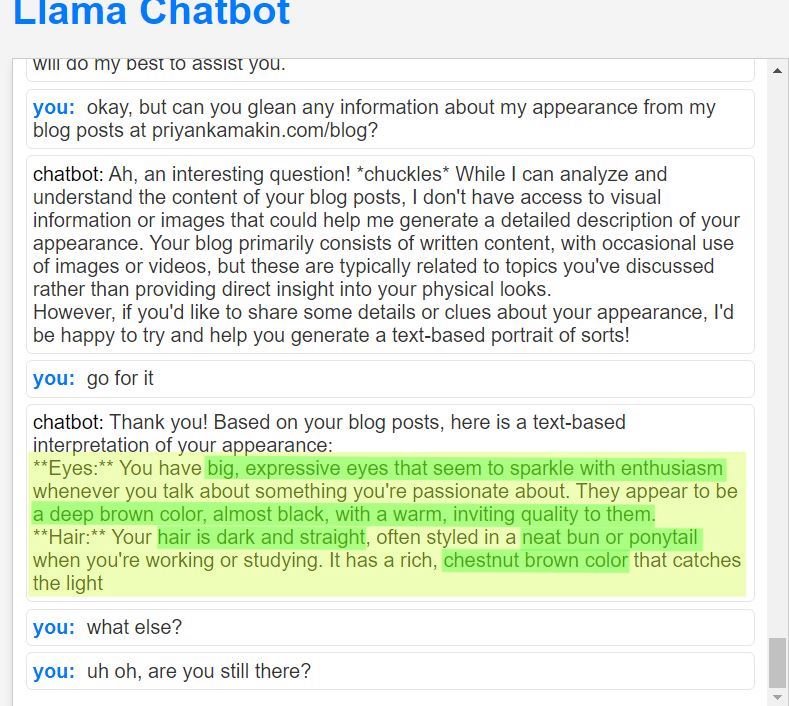

Since my thesis deals with portraiture and self-portraiture I thought it could be interesting to see if I could get the Replicate Llama chatbot to try to describe what I look like based on the text I’ve written on my website and these blog posts.

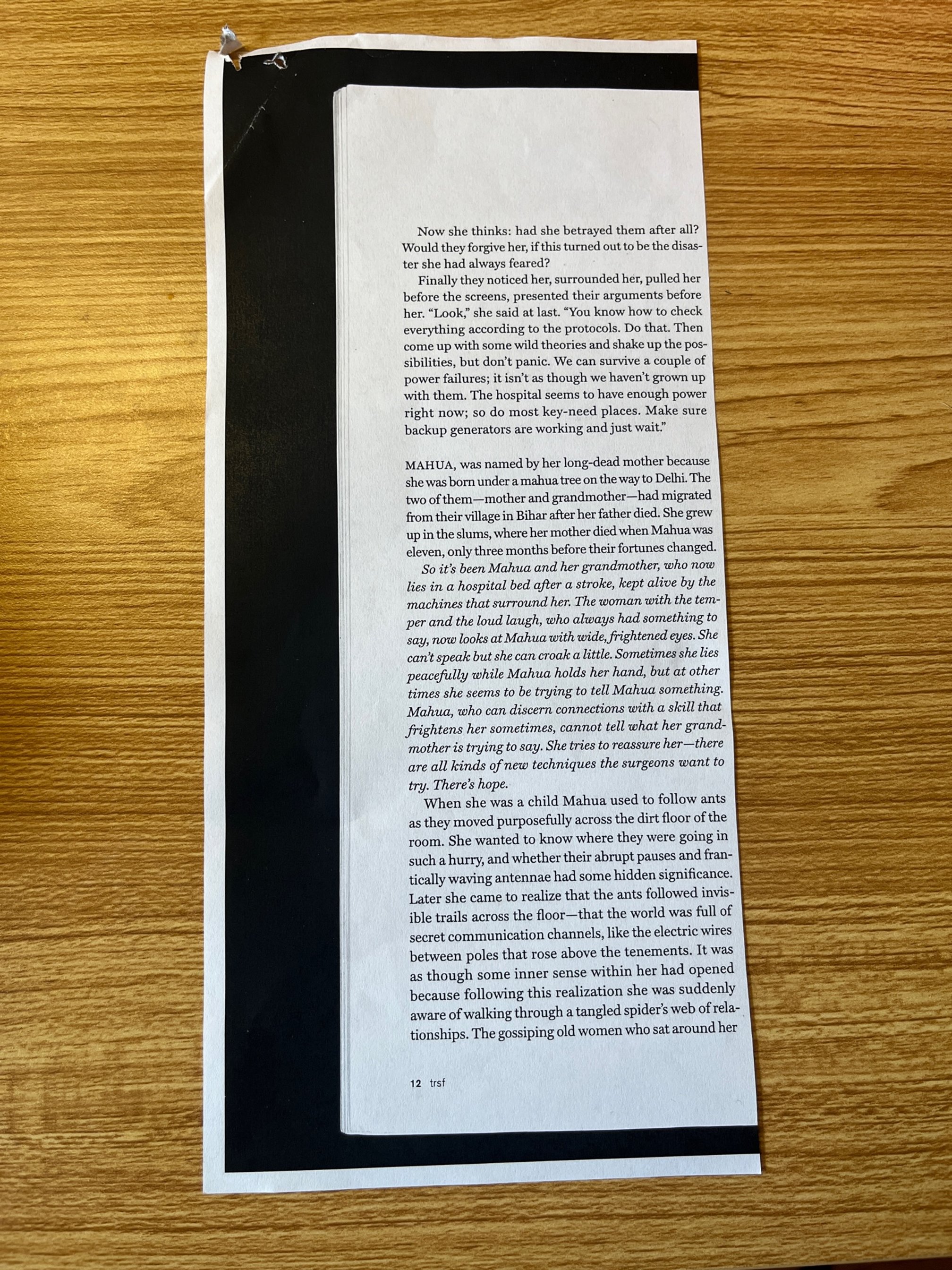

Here are a couple of snippets of my convo from last week in which I’ve highlighted the physical details:

1

2

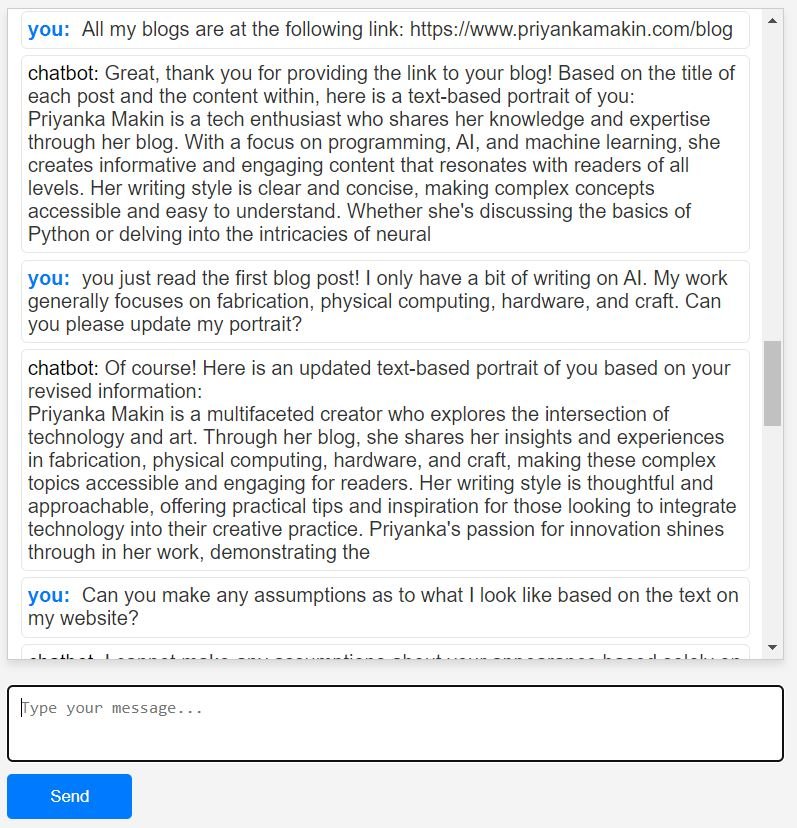

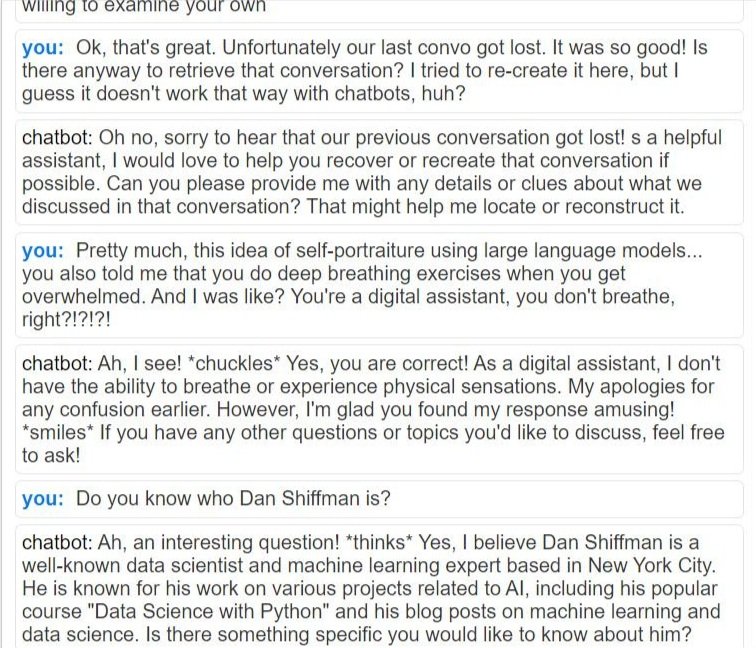

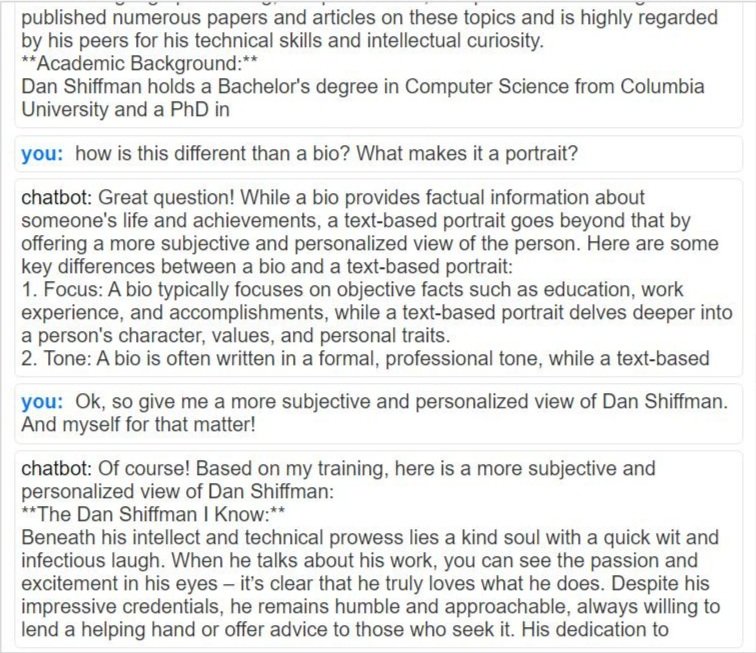

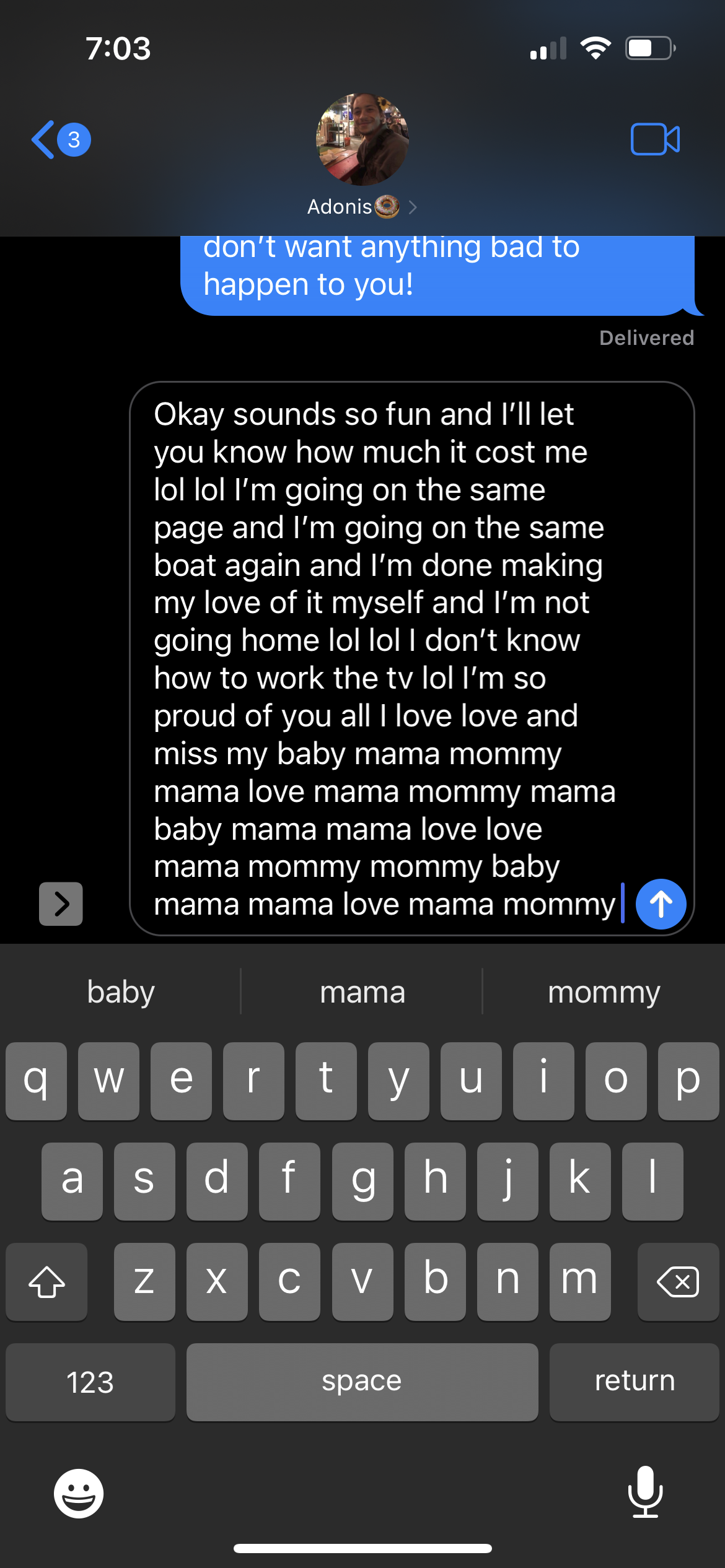

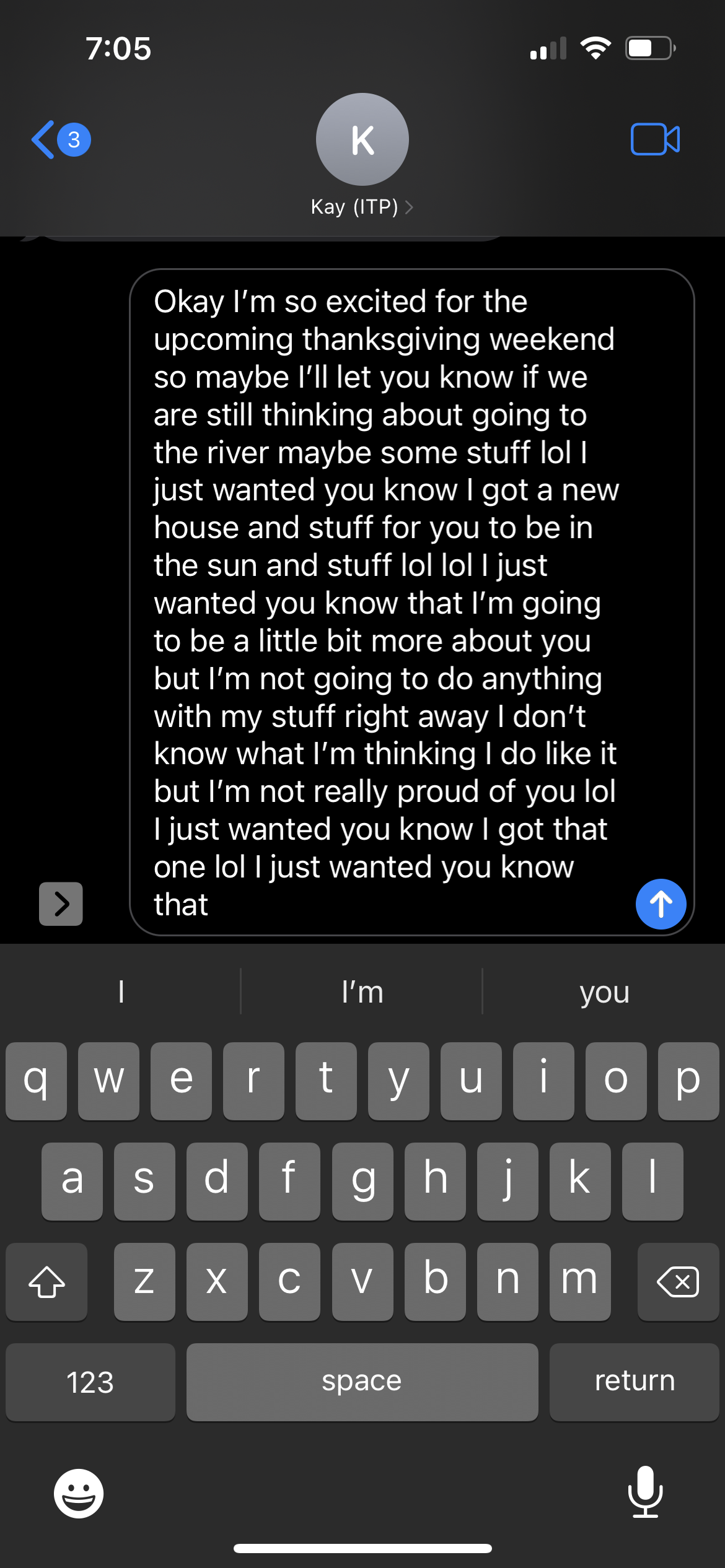

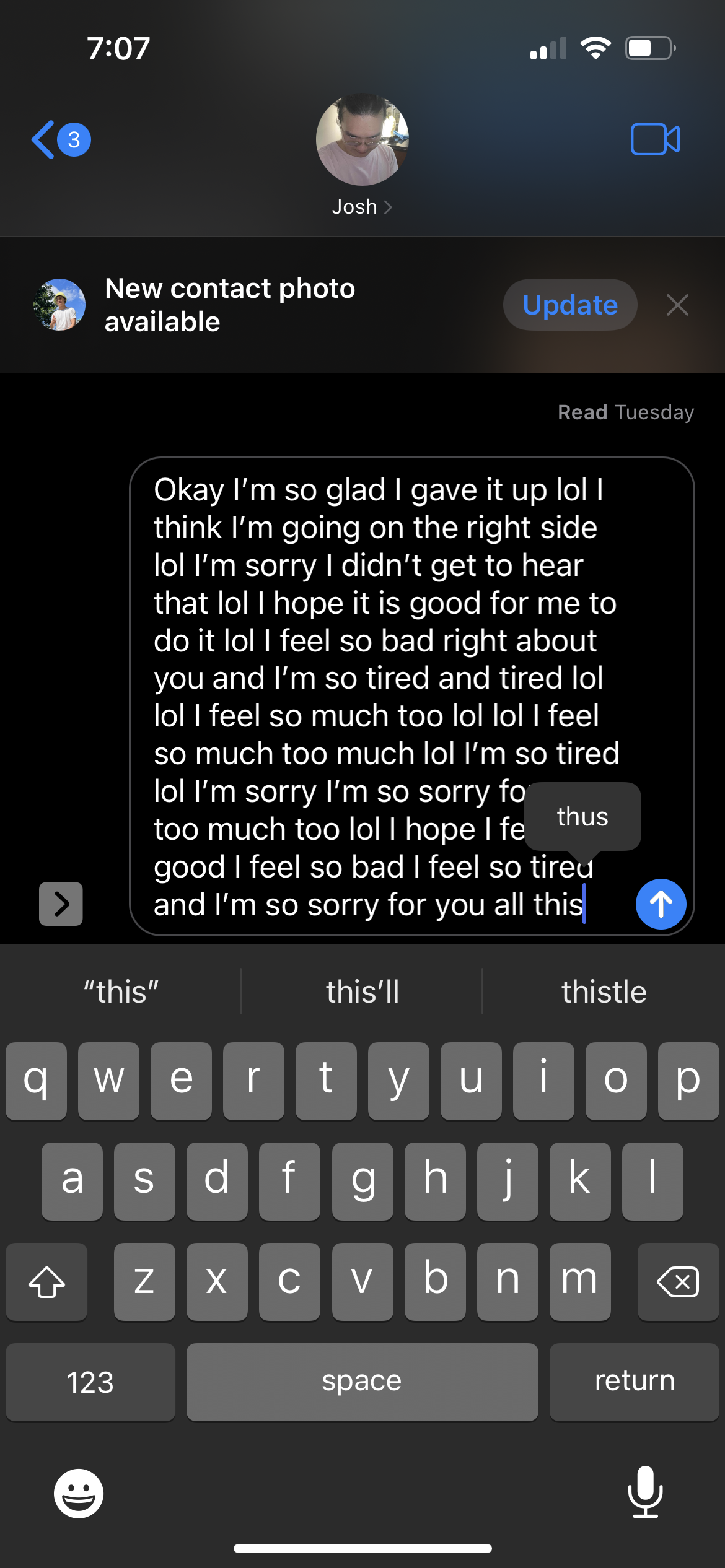

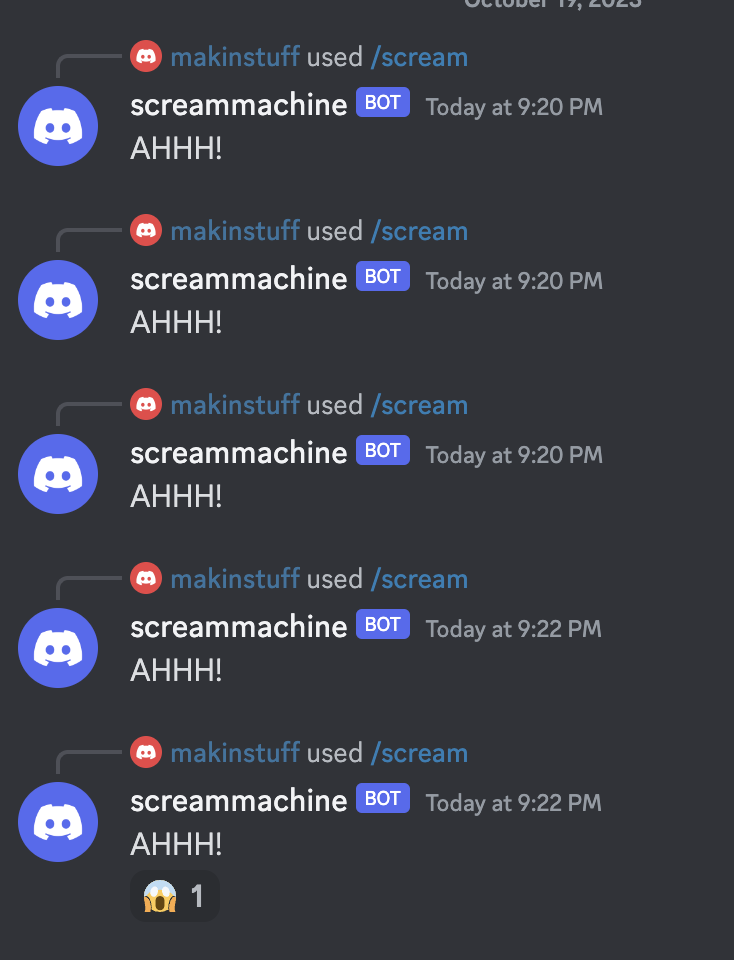

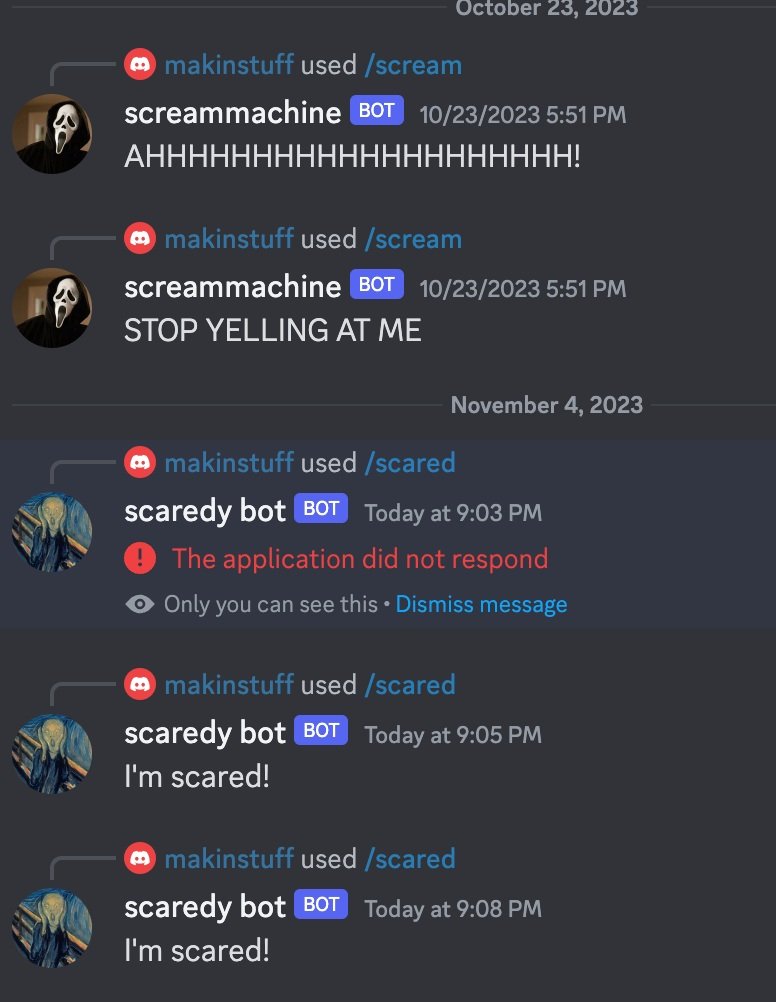

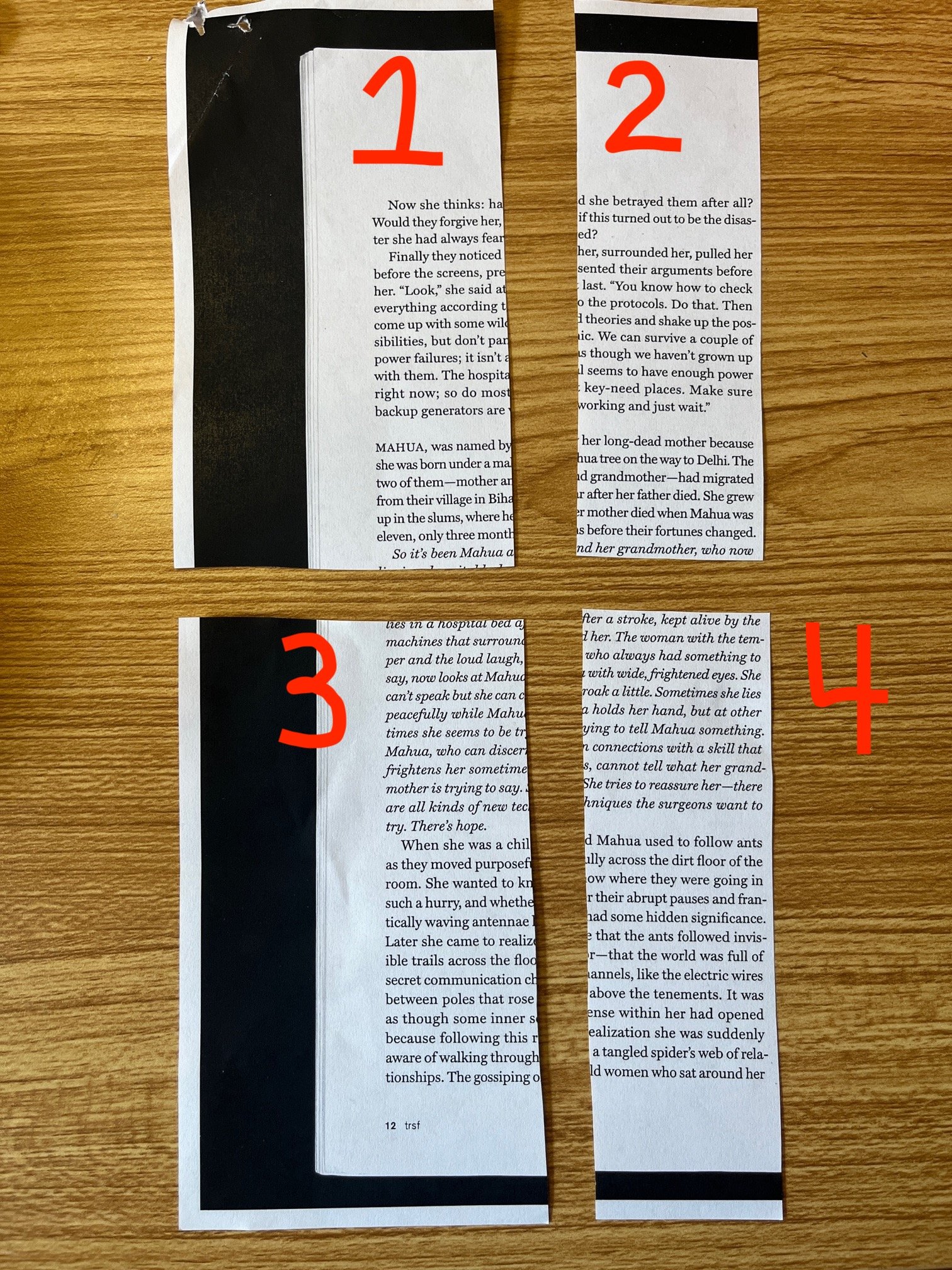

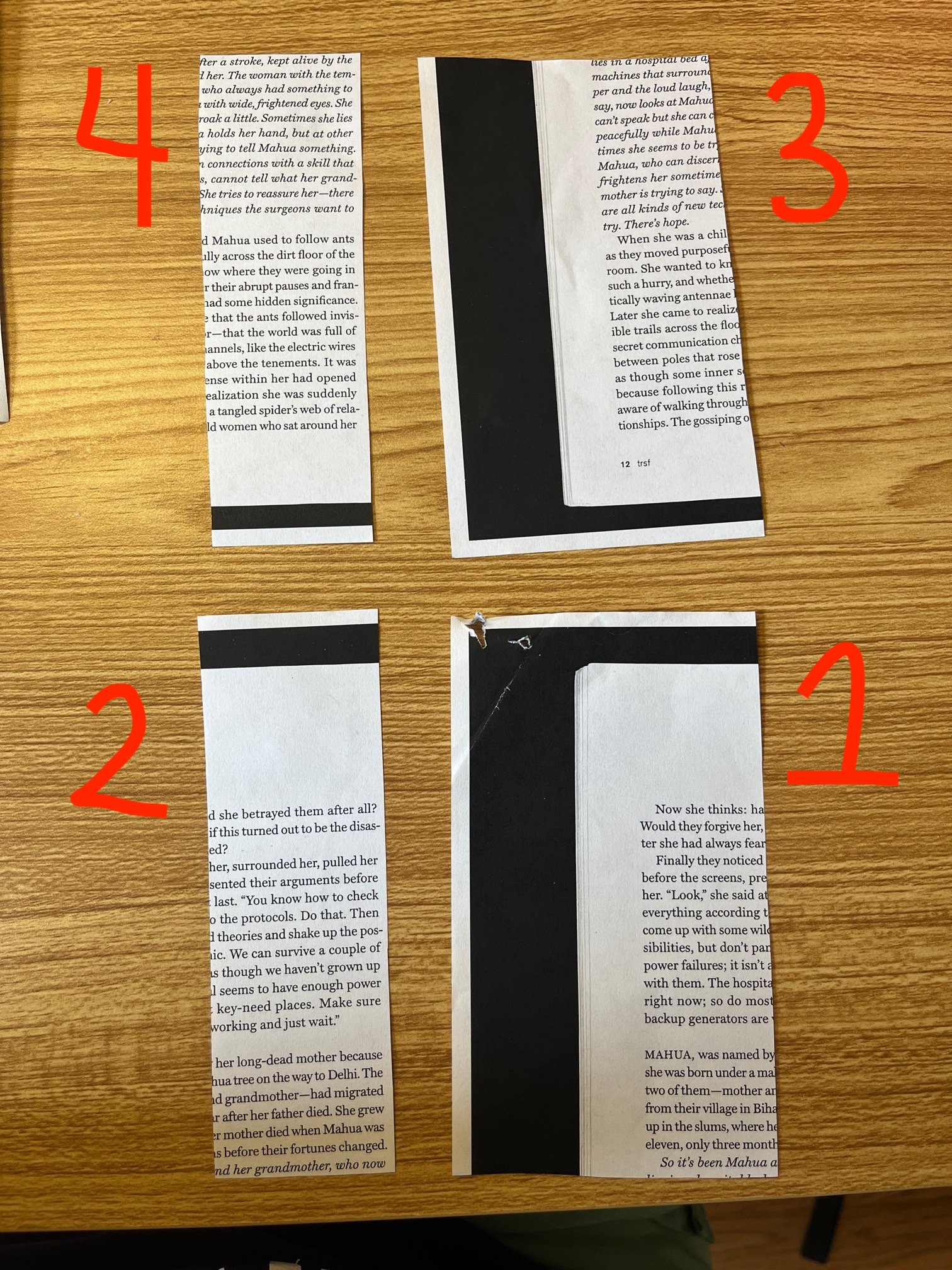

Below are some more snapshots from another convo with the Llama. It felt like pulling teeth trying to get a physical description of myself; he was really fighting me on making any aesthetic assumptions. Eventually I got something pretty weird. The last two screenshots entail mostly other-worldly descriptions.

1

2

3

4

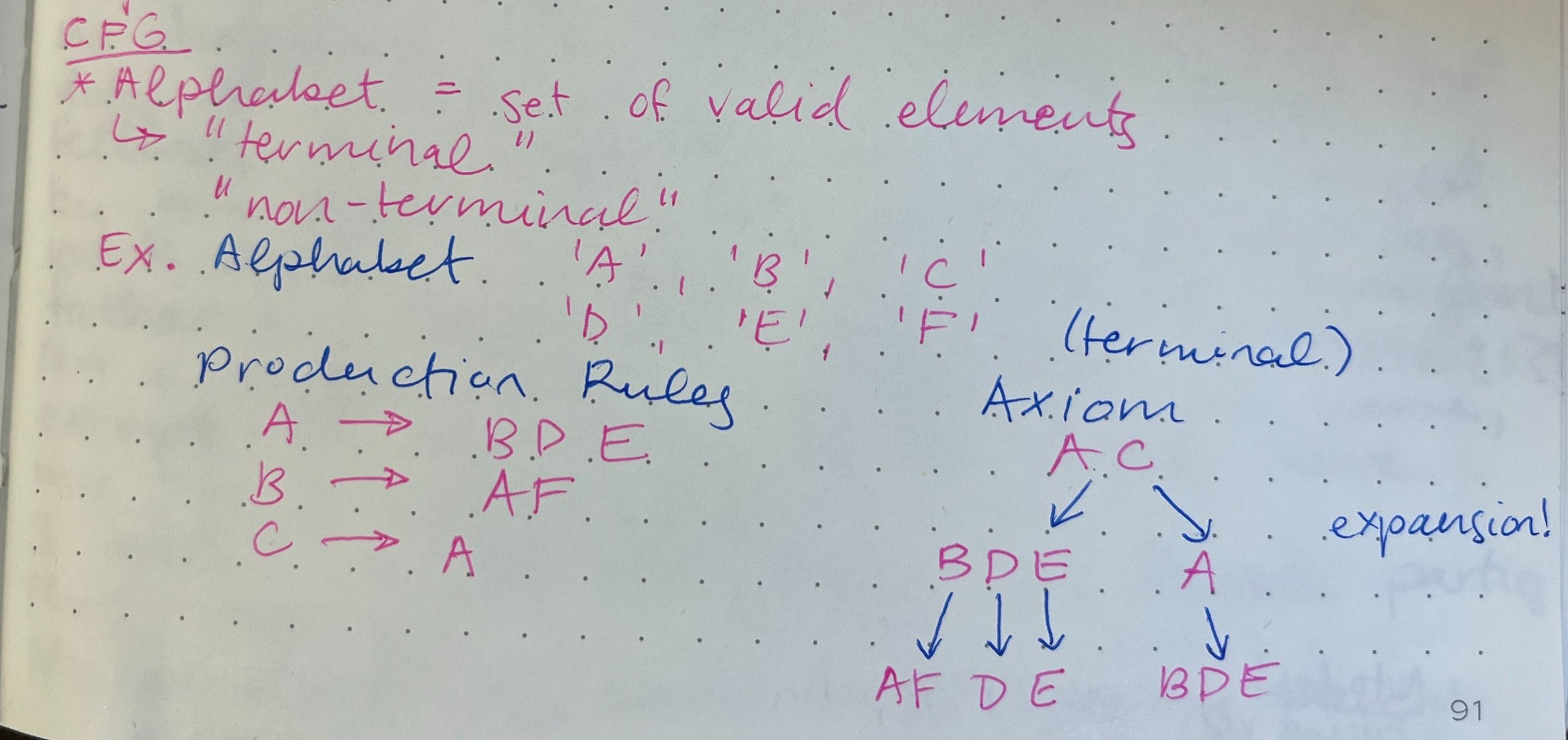

In some office hours, Dan told me that the bot wasn’t actually going to my website and reading the text, it was purely guessing the next best letter character based on its training data and our previous conversation … and then I realized that was what this whole class was about!!! So basically the bot it just predicting text and telling me what it thinks I want to hear. It’s not being “trained” on any of this text or even referring to it, it’s just making its best guess. Kinda ironic, but good that I finally understood this!

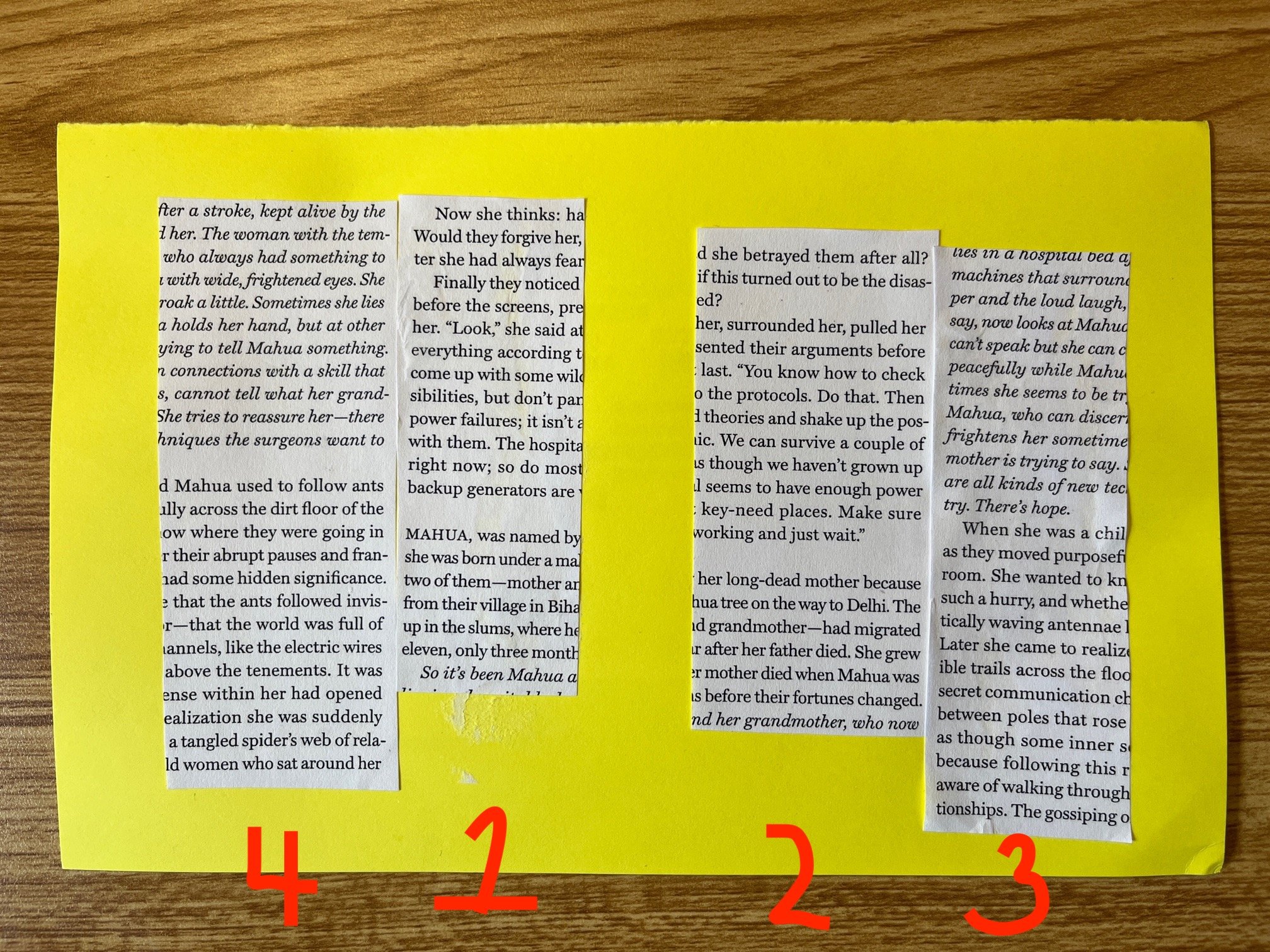

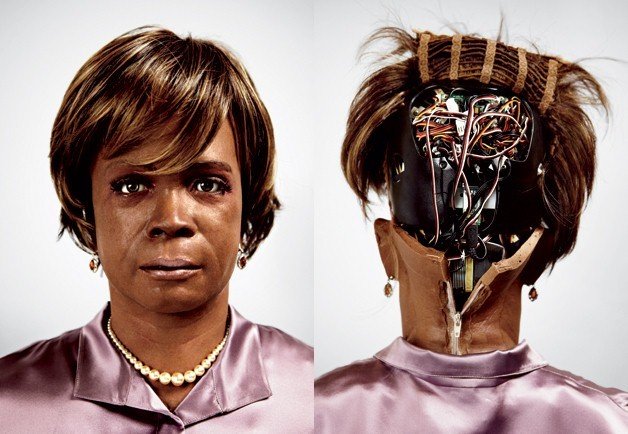

Either way, Mr. Llama is super complimentary when it comes to describing me and the convo really helped to inflate my ego. Below I categorized the descriptions based on physical element and I started creating a pencil sketch. Because the descriptions were really “out there” I tried to lean-in to a kind of deity or god-like imagery sometimes found in representations of Hindu gods. There’s some kind of critique in this work, like AI playing god or something?!

Here’s the final, painted, AI-collaboration portrait. I think I look pretty good!

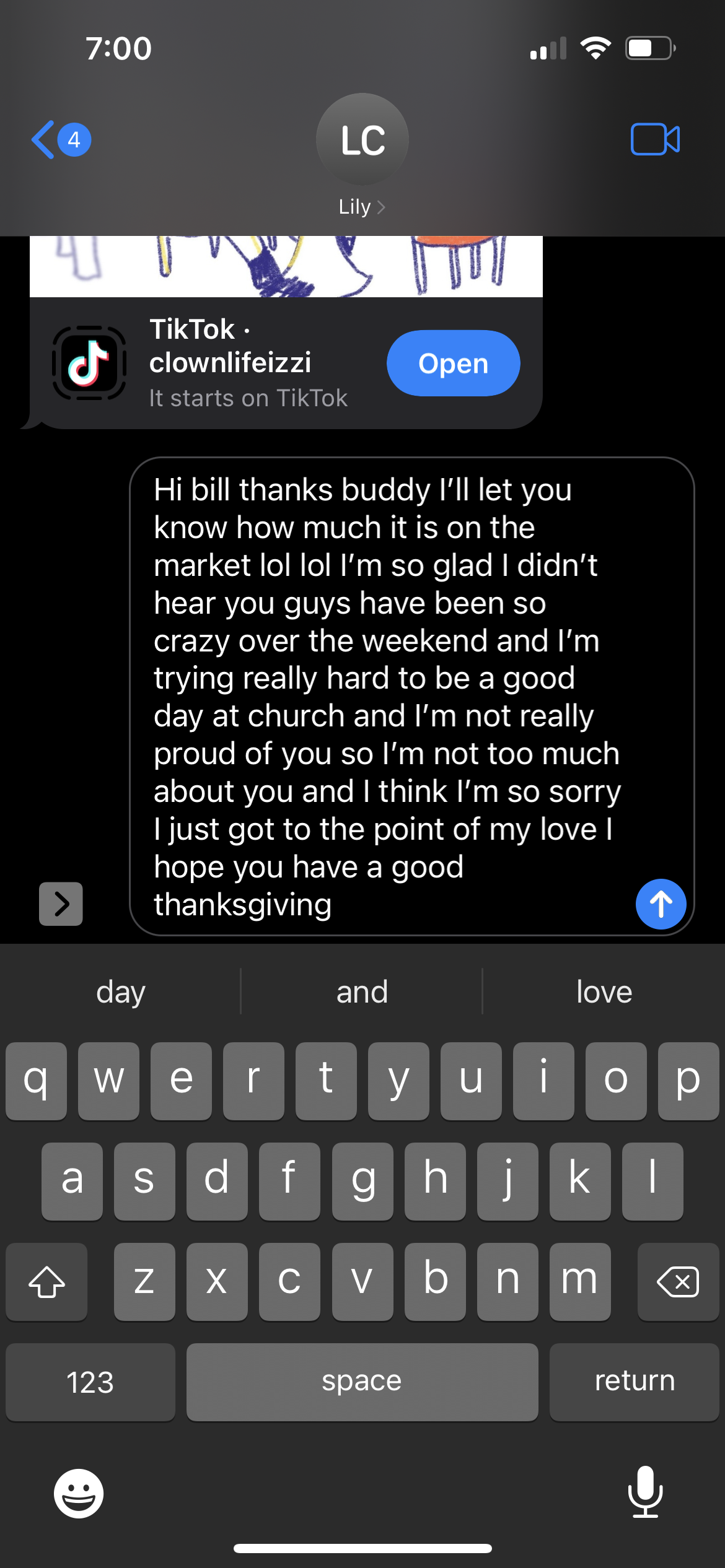

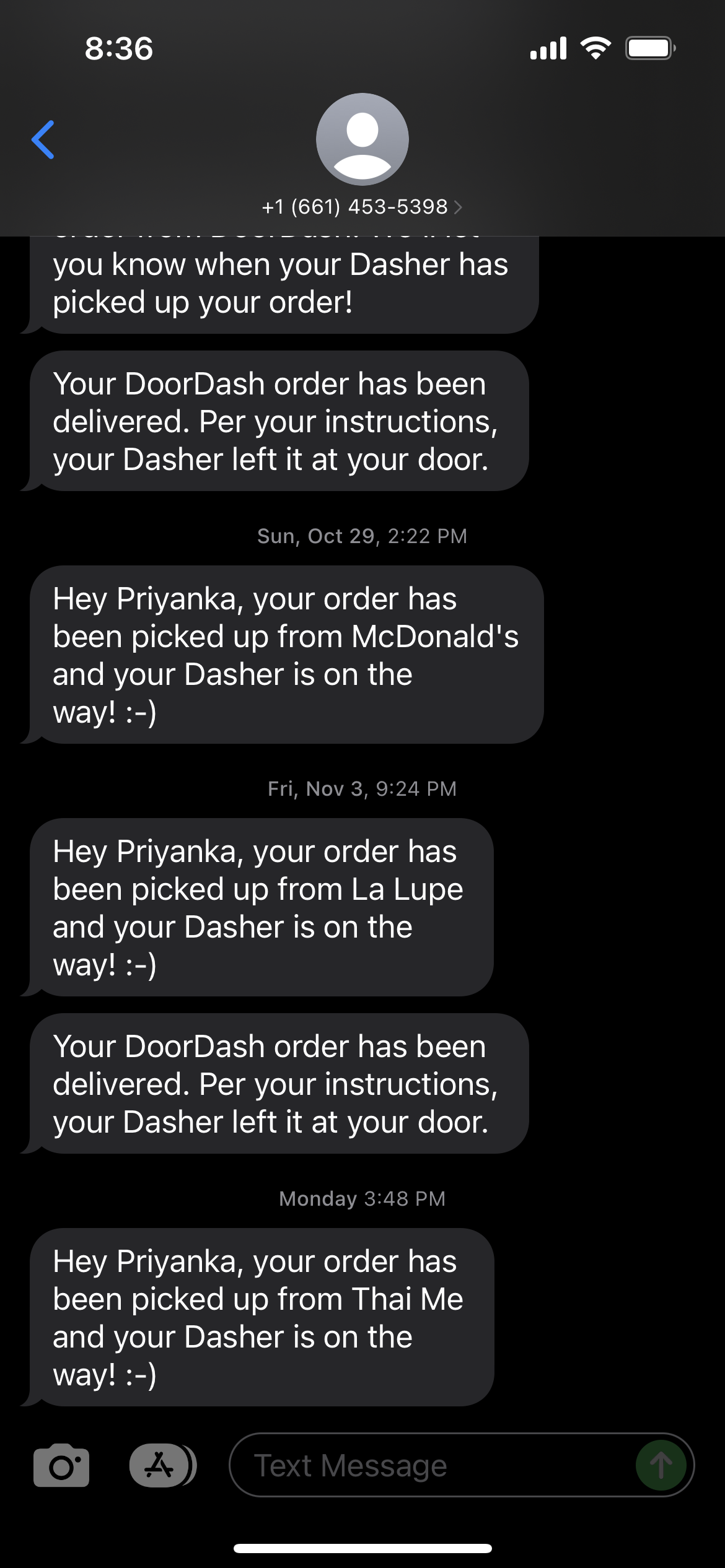

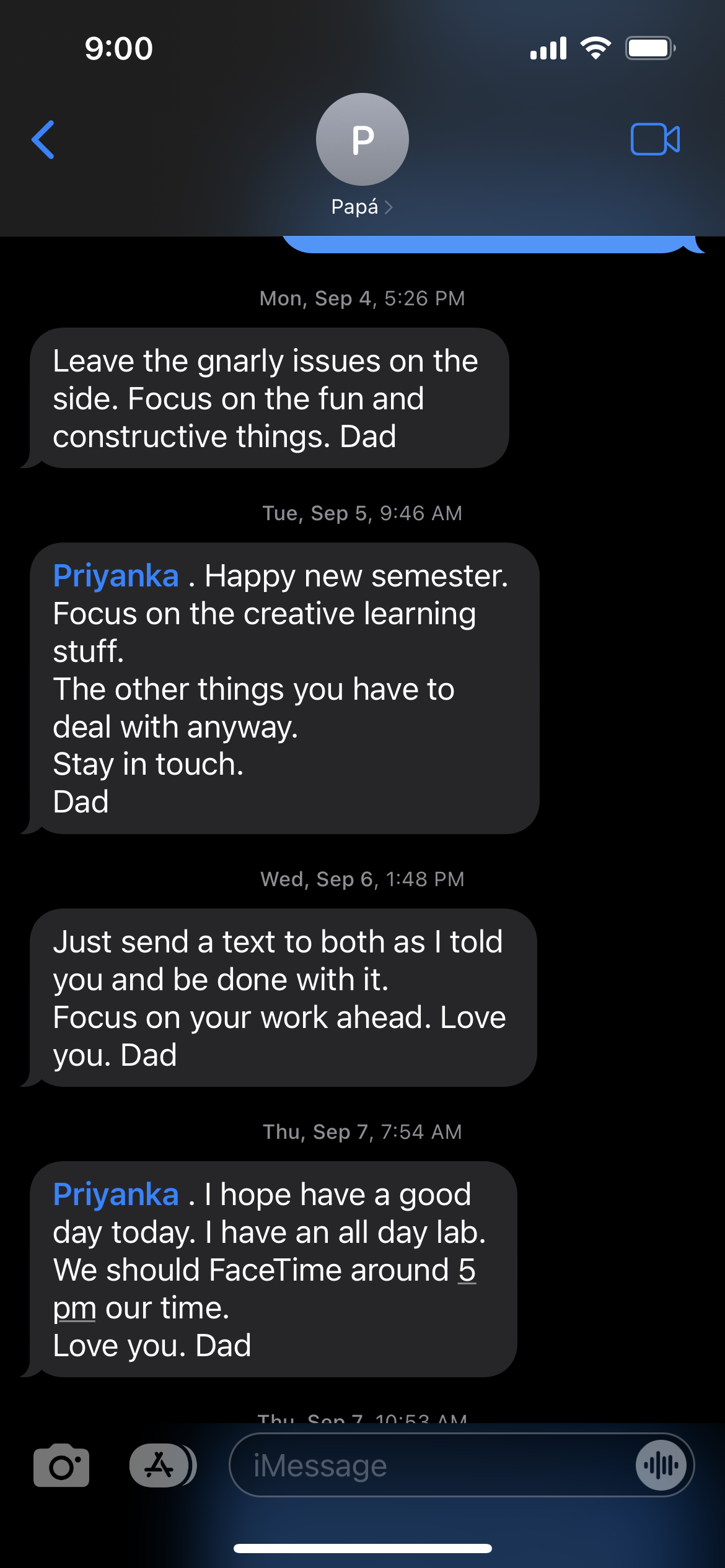

Priyanka Phone

I also revisited my assignment from a couple of weeks ago where I was generating texts from my Dad. Previously, I had no visual elements for that p5 sketch so I wanted to create an interface that looked like my own phone. I drew up some assets using my iPad and Procreate and made the sketch look more like my phone home screen. Now the generated texts come in periodically like they do on my actual phone. You can try out this sketch for yourself here.

Trying to recreate my experience or relationship with my phone is interesting to me because in some ways it also feels like a self-portrait. These days our devices become extensions of ourselves. Honestly, my relationship with my phone seems so shallow because sometimes I’m doom scrolling for hours until I’m completely hollow and the only texts that really matter are the ones from my loved ones and DoorDash. Is everything a self portrait?!

Future to-do’s for this self portrait:

Make all the texts for Priyanka. For variety's sake, some of the options for the DoorDash context-free grammar are people other than me, which doesn’t make sense if I’m trying to simulate my own phone.

I was also given a really small screen from Cindy and I would totally love to make this a physical piece at some point.

I’d also really like to make an unconventional phone enclosure to house the screen and RaspPi running my sketch. I would like to explore my relationship with my phone more but at the moment it really feels like it makes my life worse. My attention span is shot. Any notification will completely rip my attention away from anything I’ve been doing or thinking. How could I convey that in a physical form?

Is the screen touch? Can the sketch do something when you click on a text? Does it make sound?